Web scraping is a powerful automated technique that enables individuals and businesses to extract valuable information from websites effortlessly. This data extraction method plays a vital role across various industries, from e-commerce to market research, allowing companies to gain insights into competitive pricing and customer sentiment. By utilizing advanced web scraping tools and techniques, users can efficiently gather large datasets, streamline decision-making, and improve their strategies. However, the practice of ethical web scraping is essential to navigate legal implications and respect website owners’ terms of service. As the volume of data online continues to grow, mastering web scraping techniques becomes increasingly crucial for anyone looking to stay competitive in today’s data-driven landscape.

Also known as web harvesting or web data extraction, this innovative approach involves using automated methods to gather information from various online sources. The process can significantly enhance productivity in areas such as research, marketing analysis, and sales strategy development. Utilizing specialized harvesting tools, users can efficiently track trends, monitor competitors, and analyze market data. It’s important to implement ethical standards while engaging in this practice to ensure compliance with legal frameworks surrounding data usage. With the right web scraping techniques, businesses can unlock a treasure trove of insights that drive informed decision-making and foster growth.

Understanding Web Scraping Techniques

Web scraping techniques represent the backbone of data extraction processes used in numerous industries today. One of the most common methods includes using HTTP requests to gather HTML content from web pages. This is often done in conjunction with parsing libraries that can interpret the HTML structure, enabling users to systematically extract the desired data. Tools like Beautiful Soup in Python are particularly popular for their ability to efficiently navigate the Document Object Model (DOM) and retrieve specific elements based on user-defined criteria.

Another effective approach is employing APIs where available. Many sites offer APIs that provide structured data in a more accessible format compared to standard web pages. Using APIs can not only streamline the data extraction process but also significantly reduce the risk of encountering issues related to scraping, such as getting blocked or facing legal challenges. By understanding and applying various web scraping techniques, businesses can harness data in ways that support their digital strategies.

Essential Web Scraping Tools

When it comes to web scraping, having the right tools makes a significant difference in efficiency and accuracy. Popular tools such as Scrapy, a robust Python framework, allow users to develop spiders that can navigate websites, extract data, and store it in various formats. This tool stands out due to its support for asynchronous network requests, enabling rapid scraping of multiple pages simultaneously. Additionally, for those not as versed in coding, browser extensions like Web Scraper.io provide an intuitive interface for users to capture data quickly without deep technical knowledge.

Moreover, tools like Selenium offer powerful browser automation capabilities, allowing for the simulation of user interactions with a website. This feature is especially useful for scraping dynamic websites that rely heavily on JavaScript to present their data. With the right combination of web scraping tools, users can proficiently extract vast amounts of data while addressing complexities such as session handling, CAPTCHA challenges, and data cleaning.

Ethical Web Scraping Practices

While web scraping unlocks a treasure trove of information for many industries, it’s crucial to approach this practice with a firm understanding of ethical guidelines. Ethical web scraping involves obtaining data legally and in compliance with a website’s terms of service. For instance, scraping publicly available data from e-commerce sites for competitive analysis is typically acceptable, provided it doesn’t put undue strain on the site’s server. Additionally, respecting robots.txt directives helps prevent unwanted data extraction activities.

It is also important to consider the privacy of individuals when scraping data, particularly from social media platforms or review sites where personal opinions are shared. Collecting data for business insights should be conducted transparently, fostering trust between businesses and their customers. Overall, adhering to ethical standards in web scraping not only safeguards legal interests but also enhances the reputation of organizations leveraging this powerful tool.

Practical Uses of Web Scraping Across Industries

The practical uses of web scraping span a wide array of industries, helping businesses leverage data for strategic advantages. In e-commerce, companies utilize web scraping to monitor competitor pricing, enabling them to adjust their own prices dynamically to stay competitive in a rapidly shifting market landscape. By analyzing historical pricing data, businesses gain insights into pricing trends, helping to forecast future customer behavior.

Additionally, the market research sector benefits immensely from web scraping. By gathering customer reviews, social media sentiments, and even news articles, firms can analyze public opinion in real-time. This capability allows businesses to pivot their marketing strategies based on current trends and consumer feedback, leading to informed decision-making that aligns with market demands.

The Role of Data Extraction in Business Intelligence

Data extraction is critical in the realm of business intelligence, serving as the foundational step for generating meaningful insights. Through web scraping, organizations can compile vast datasets from various online sources, enabling them to analyze trends, customer preferences, and competitive landscapes. This data, once organized and interpreted, can lead to actionable insights that drive strategic initiatives, helping businesses stay ahead of the competition.

Moreover, implementing effective data extraction techniques enhances operational efficiency. By automating the data gathering process, companies reduce manual labor and the likelihood of human error, ensuring that decisions are based on reliable and up-to-date information. In the fast-paced digital environment, the ability to quickly extract and analyze data is vital for maintaining a responsive and agile business strategy.

Challenges and Risks of Web Scraping

Despite its many advantages, web scraping is not without its challenges and risks. One of the primary concerns is the legal implications surrounding data extraction. Websites often have specific terms of service that may restrict or prohibit scraping activities. Ignoring these guidelines can lead to legal action from site owners, potentially resulting in fines andblocked access to data.

Another challenge involves technical barriers such as CAPTCHAs and anti-scraping technologies implemented by many websites. These measures are designed to deter automated scraping and can complicate the extraction process. Successfully navigating these hurdles requires continuous adaptation and the use of advanced web scraping tools that can bypass or work around such restrictions, while still remaining compliant with ethical standards.

Leveraging Web Scraping for Market Analysis

Web scraping has emerged as an invaluable tool for market analysis, allowing businesses to gather insights from a multitude of online sources. By scraping data from competitor websites, forums, and social media platforms, companies can develop a comprehensive understanding of market dynamics and consumer behavior. This information enables organizations to tailor their products, services, and marketing strategies to meet evolving customer needs.

Additionally, web scraping facilitates the collection of quantitative data, such as sales figures and product availability. Companies can use this data to identify market trends, assess demand, and pinpoint growth opportunities. By leveraging web scraping effectively, businesses can make data-driven decisions that enhance their competitive edge and foster sustainable growth in their target markets.

The Future of Web Scraping Technologies

As technology continues to evolve, the future of web scraping is set to become even more advanced and sophisticated. Innovations in artificial intelligence and machine learning are paving the way for smarter scraping tools that can learn from user activities and improve data extraction accuracy over time. These intelligent systems will not only streamline the scraping process but also enhance the quality of the insights generated from the collected data.

Furthermore, advancements in cloud-based services are likely to enable more scalable and efficient scraping operations. Businesses can access vast computing resources without the need for extensive on-premise infrastructure, allowing them to scrape larger datasets rapidly. The convergence of these technologies suggests a bright future for web scraping, with capabilities that will empower organizations to transform data into actionable intelligence with unprecedented speed and efficiency.

Web Scraping as a Competitive Advantage

In today’s data-driven landscape, effective web scraping can serve as a powerful competitive advantage. By gathering and analyzing large volumes of data from various online platforms, businesses can uncover valuable insights that inform their strategic decisions. For instance, by monitoring competitor pricing, brands can adjust their pricing strategies swiftly, staying one step ahead in a competitive marketplace.

Moreover, organizations that harness the power of web scraping can identify emerging trends and consumer preferences faster than those relying solely on traditional market research methods. This agility allows them to tailor their offerings and marketing campaigns to align with current market demands, ultimately enhancing their market position and solidifying customer loyalty.

Frequently Asked Questions

What is web scraping and how does it work?

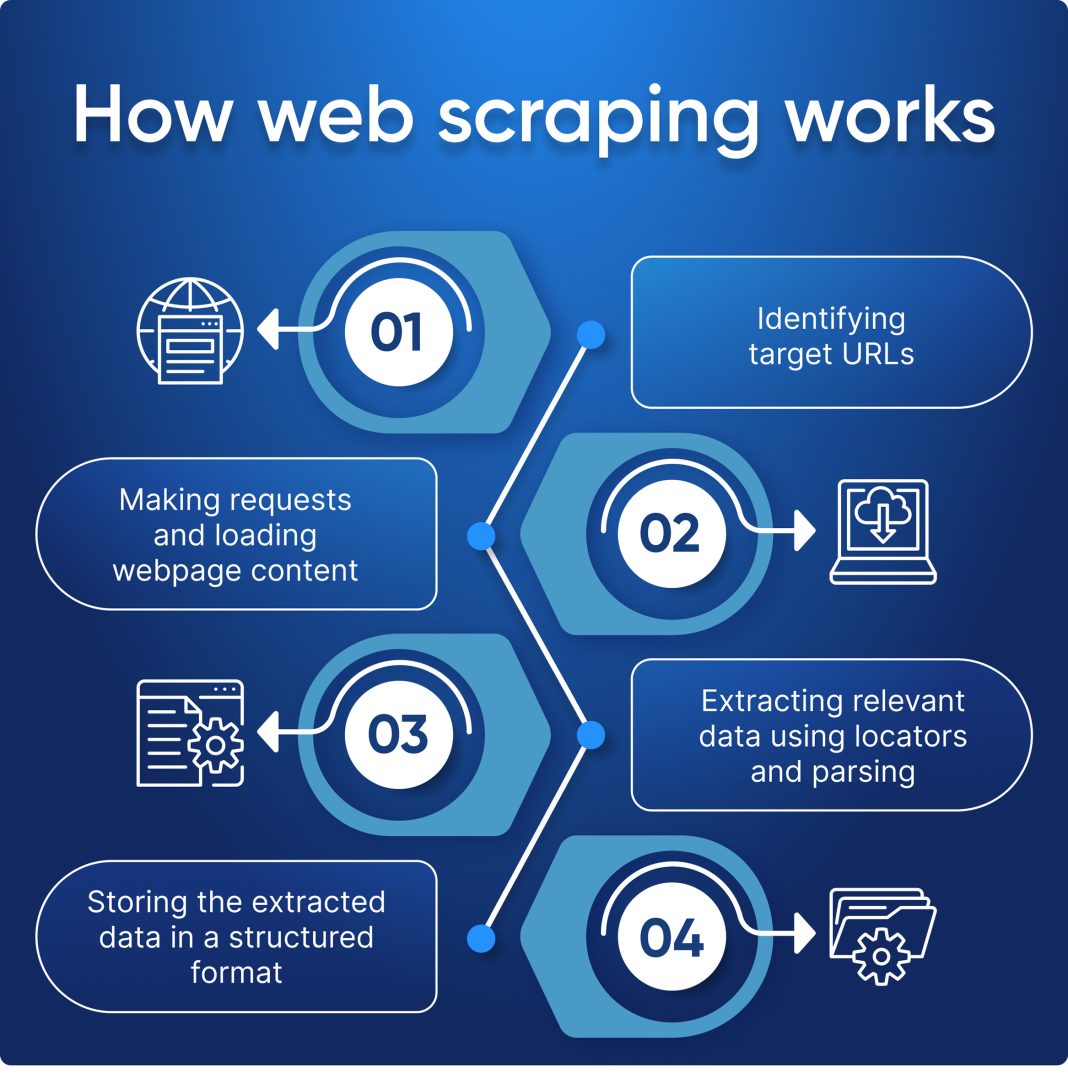

Web scraping is an automated technique used to extract information from websites. It works by sending requests to web servers, retrieving HTML content, and parsing it to collect relevant data. This data can be used for various applications such as data analysis, market research, and more.

What are the most common web scraping techniques?

Common web scraping techniques include HTML parsing, API consumption, and browser automation. HTML parsing involves extracting content from a webpage’s structure using tools like Beautiful Soup or Scrapy. API consumption allows extracting data from websites that provide APIs, while browser automation tools like Selenium simulate user actions to access dynamic content.

What tools can I use for web scraping?

There are various web scraping tools available, such as Beautiful Soup and Scrapy for Python, which are excellent for parsing HTML. Additionally, browser automation tools like Selenium help scrape dynamically loaded content. Other popular scraping tools include Octoparse, ParseHub, and Apify, each with unique features suited for different scraping needs.

What are the ethical considerations in web scraping?

Ethical web scraping involves respecting a website’s terms of service, being mindful of the robots.txt file, and avoiding excessive requests that could disrupt a website’s operation. Ethical scrapers ensure that the data extraction aligns with privacy laws and does not infringe on copyright or intellectual property rights.

How can businesses benefit from web scraping?

Businesses can utilize web scraping to gather competitive pricing information, monitor market trends, analyze customer sentiment through reviews, and track product availability. These insights can inform pricing strategies, enhance marketing efforts, and support data-driven decision-making.

Is web scraping legal?

The legality of web scraping often depends on how it’s performed and the target website’s terms of service. While scraping publicly available information is generally permissible, scraping with malicious intent or against a website’s policies can lead to legal repercussions. It’s important to review applicable laws and website terms before scraping.

What are some use cases for web scraping in different industries?

Web scraping has diverse use cases across various industries: in e-commerce, it helps compare prices and monitor competitors; in real estate, it extracts property listings; in finance, it gathers market data; and in academia, it aids in collecting research data. Each of these applications enables organizations to make informed decisions based on real-time data.

What are the challenges associated with web scraping?

Challenges in web scraping include dealing with anti-scraping measures implemented by websites, such as CAPTCHAs, IP blocking, and dynamic content loading. Additionally, maintaining compliance with legal regulations and managing the quality and reliability of extracted data can pose difficulties for web scraping efforts.

How can I start learning web scraping?

To start learning web scraping, consider exploring online tutorials and courses that focus on using Python with libraries like Beautiful Soup and Scrapy. Familiarizing yourself with HTML, CSS, and JavaScript is also beneficial, as understanding webpage structures will aid in effectively extracting data. Join web scraping communities to exchange knowledge and best practices.

What is the role of APIs in web scraping?

APIs play a significant role in web scraping by providing a structured way to access data directly from services and platforms. Many websites offer APIs that enable users to request specific data fields without the need for scraping the website’s HTML. Using APIs can enhance the efficiency and legality of data extraction efforts.

| Key Point | Details |

|---|---|

| Definition of Web Scraping | Automated technique for extracting data from websites. |

| Industries Utilizing Web Scraping | E-commerce, data analysis, market research. |

| Benefits of Web Scraping | Collect competitive pricing, analyze customer sentiment. |

| Methods and Tools | APIs, Python libraries (Beautiful Soup, Scrapy), Selenium. |

| Legal and Ethical Considerations | Can breach website terms of service. |

Summary

Web scraping is an essential technique that allows businesses to gather valuable data for strategic decision-making. By implementing effective web scraping methods, companies can unlock insights that contribute to enhanced planning and competitive advantages in various industries. However, it is crucial to navigate the legal and ethical landscape to ensure compliance with terms of service, thereby maintaining a responsible approach to data extraction.