Web scraping has emerged as a powerful tool for data extraction, allowing individuals and businesses to gather valuable information from websites with ease. By employing techniques such as web crawling, users can automate the collection of data, making it more efficient than manual methods. With the right programming skills, particularly in Python web scraping, anyone can utilize libraries like Beautiful Soup to enhance their scraping capabilities. However, it’s crucial to engage in ethical web scraping practices to respect the terms of service of websites and protect user data privacy. As technology evolves, the integration of AI in web scraping is poised to revolutionize the way we gather and analyze online information.

Collecting data from the web, often referred to as data harvesting or online data extraction, plays a vital role in numerous industries today. This process involves using automated tools to navigate through web pages, cataloging relevant information for various applications. To harness the potential of this technology, many turn to programming languages like Python, which facilitates efficient data retrieval through user-friendly libraries. While exploring this realm, it is important to consider the ethical implications of these practices, ensuring compliance with legal standards. Moreover, the growing influence of artificial intelligence could significantly advance these techniques, enabling more sophisticated data collection methods.

Understanding Web Scraping and Data Extraction

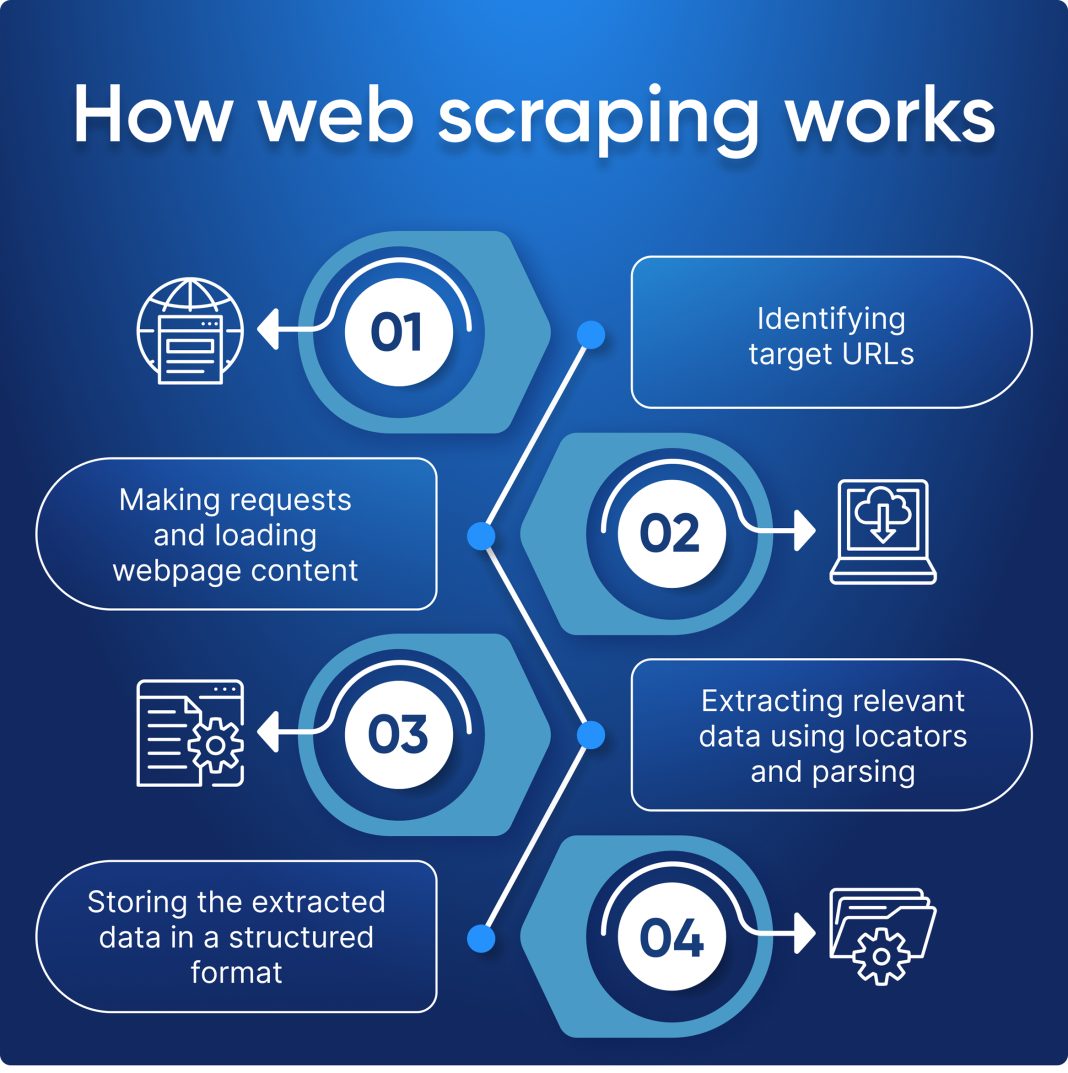

Web scraping is a vital technique used to collect and extract data from various websites. This process involves automated scripts that mimic human web browsing behavior to retrieve significant amounts of information efficiently. By using tools and programming languages, such as Python, developers can harness libraries like Beautiful Soup or Scrapy to simplify the data extraction process. These tools aid in parsing HTML and XML documents, allowing for seamless data organization and transformation.

Data extraction is closely linked to web crawling, wherein software bots systematically browse the web to index content for future reference. This technique not only aids businesses by aggregating data from multiple sources but also enhances their analytical capabilities. However, understanding the nuances of web scraping is essential to ensure compliance with legal regulations and respect for website owners’ rights.

The Ethical Considerations in Web Scraping

Conducting web scraping raises a myriad of ethical considerations that practitioners must address. Ethical web scraping involves adhering to the terms of service set forth by websites, which may limit or prohibit automated data collection. By respecting these guidelines, individuals and organizations can ensure they are not infringing upon any copyrights or violating user privacy. It’s imperative for web scrapers to be transparent about their intentions and the nature of the data being collected.

Moreover, ethical practices can also contribute to data integrity and public trust in the scraping community. Engaging in responsible data extraction means not only being compliant with legal frameworks but also considering the potential impacts on the websites being scraped. This entails being mindful of the server load, requesting data at reasonable intervals, and avoiding actions that could disrupt site functionality.

Python Web Scraping: A Practical Approach

Python has emerged as one of the most popular languages for web scraping due to its versatility and ease of use. With libraries like Beautiful Soup and Scrapy, developers can build robust scraping solutions tailored to their specific needs. These libraries facilitate parsing HTML documents, navigating through DOM trees, and extracting relevant data while maintaining efficiency in code execution.

In practical applications, Python enables users to create scripts that can automate the process of data collection with minimal effort. Additionally, its community support and rich ecosystem of tools empower developers to enhance their web scraping abilities. From handling complex pages to circumventing anti-scraping measures, Python’s capabilities make it an essential tool for anyone interested in data-driven insights.

Innovations in AI for Web Scraping

The integration of artificial intelligence (AI) into web scraping practices is revolutionizing the way data is collected and processed. AI algorithms can enhance the scraping process by intelligently identifying patterns, making predictions, and automating decision-making based on the scraped data. This allows organizations not only to gather data more efficiently but also to analyze it on a deeper level.

Furthermore, AI-driven tools are increasingly becoming adept at simulating human behavior, which can significantly improve the effectiveness of web scraping efforts. Such advancements lead to better handling of CAPTCHA systems, dynamic content loading, and even adaptive scraping techniques that can adjust based on website changes. The use of AI in web scraping thus opens new frontiers for data extraction, fostering innovative solutions that can process vast amounts of information seamlessly.

Best Practices for Effective Web Crawling

Implementing best practices for web crawling is crucial to ensure effective and ethical scraping operations. One primary best practice is to familiarize oneself with the website’s robots.txt file, which outlines the specific rules regarding which parts of the site are permitted for bots to access. Adhering to these guidelines not only prevents potential blocks or legal issues but also promotes responsible scraping behavior.

Additionally, employing efficient request practices can greatly impact the success of web crawling activities. By spacing out requests and limiting the number of simultaneous connections, scrapers can reduce the risk of overwhelming servers and potentially causing service interruptions. Keeping track of data changes and employing techniques to handle changes in web structures are also essential components for long-term success in web scraping.

Challenges in Web Scraping and Data Handling

While web scraping offers numerous advantages, it is not without its challenges. Websites frequently implement anti-scraping measures like CAPTCHAs, IP blocking, and dynamic content rendering to thwart automated access. Navigating these barriers requires scrapers to develop adaptive techniques, such as rotating IP addresses or using headless browsers, which simulate user interactions more closely.

Moreover, managing and storing vast amounts of extracted data also presents challenges. Scrapers must choose appropriate databases and structures that efficiently handle the incoming data while remaining scalable. Implementing data cleaning and validation processes is crucial to ensure the accuracy of the gathered information, ultimately enhancing the reliability of analytics derived from the scraped content.

The Future of Web Scraping Technologies

The landscape of web scraping is continuously evolving, influenced by advancements in technology and shifting legal frameworks. One of the most exciting developments is the growing integration of machine learning with web scraping tools. This combination allows scrapers to learn from previous data collection efforts, improve their efficiency, and even dynamically adapt to changes in website layouts or structures.

Moreover, as concerns about data privacy and protection grow, regulatory measures may reshape the future of web scraping practices. Organizations will need to stay ahead of these changes and adapt their scraping strategies accordingly, balancing data needs with compliance and ethical considerations. The ability to leverage cutting-edge technologies while adhering to responsible practices will define the future of web scraping.

Exploring Web Scraping Tools and Libraries

There is a plethora of tools and libraries available for web scraping, each catering to different user needs and expertise levels. Popular options include Beautiful Soup for parsing HTML and XML documents, Scrapy for building powerful web crawlers, and Selenium for automating web interactions. These tools not only streamline the scraping process but also enhance data management capabilities, making it easier for users to retrieve and manipulate information.

Additionally, specialized scraping tools like Octoparse provide a user-friendly interface, allowing non-programmers to engage in data extraction without extensive coding knowledge. Understanding the strengths and weaknesses of various tools can help individuals select the right solution for their specific scraping projects, facilitating effective data extraction from the vast resources available on the web.

The Importance of Respecting Data Privacy in Web Scraping

Respecting data privacy is paramount in the realm of web scraping. As more users become aware of their data rights and the implications of data collection, scrapers must prioritize ethical practices and compliance with regulations such as GDPR and CCPA. These laws highlight the significance of transparency regarding data usage and the necessity of obtaining consent when collecting personal information.

Failure to respect data privacy can lead to severe legal repercussions and damage to a company’s reputation. Scrapers should implement robust measures to anonymize and protect sensitive data while ensuring that their scraping practices comply with relevant legal standards. By placing a strong emphasis on data privacy, organizations can build trust and foster a healthy relationship with both their data sources and end-users.

Frequently Asked Questions

What is web scraping and how does it relate to data extraction?

Web scraping is the automated process of extracting data from websites. It involves the use of bots or scripts to collect information and transform it into a structured format. This process is closely related to data extraction, highlighting the efficiency of obtaining vast amounts of data for analysis or storage.

What ethical considerations should be taken into account for ethical web scraping?

Ethical web scraping entails respecting the website’s terms of service, making sure not to overload servers with requests, and considering the implications for user privacy. Properly conducted web scraping should avoid accessing sensitive information and respect data ownership rights to ensure compliance with legal and ethical standards.

How can Python be used for web scraping?

Python is a popular language for web scraping due to its readability and rich ecosystem of libraries such as Beautiful Soup and Scrapy. These libraries facilitate HTTP requests, HTML parsing, and data storage, making the scraping process more efficient and manageable for developers.

What tools are commonly used in Python web scraping?

Common tools for Python web scraping include Beautiful Soup for parsing HTML, Requests for making network requests, and Scrapy for building intricate scraping frameworks. These tools allow developers to extract specific data elements from web pages and handle various web scraping tasks effectively.

How is AI influencing the future of web scraping?

AI is revolutionizing web scraping by automating data extraction processes and improving the accuracy of data collection. Machine learning algorithms can enable smarter scraping strategies that adapt to website changes, enhancing both the speed and reliability of data extraction while minimizing manual intervention.

What are the legal implications of web scraping?

The legal implications of web scraping can vary by region and involve issues such as copyright infringement, data privacy laws, and terms of service violations. It is essential to understand and adhere to applicable laws and website policies to avoid potential legal disputes.

Can web crawling and web scraping be used interchangeably?

While often used interchangeably, web crawling refers to the process of systematically browsing the web, usually for indexing purposes (as done by search engines), whereas web scraping specifically involves extracting targeted data from websites. Both processes are essential to gather information online.

What challenges do developers face in ethical web scraping?

Developers face various challenges in ethical web scraping, including navigating CAPTCHAs, adhering to robots.txt guidelines, managing the risks of IP blocking, and ensuring compliance with legal regulations. Balancing these concerns while executing scraping operations can be complex.

| Key Point | Details |

|---|---|

| Techniques | Web scraping involves various techniques for collecting data from websites. |

| Ethical Implications | It’s crucial to address ethical concerns and respect website terms of service. |

| Tools | Common tools for web scraping include programming languages like Python and libraries such as Beautiful Soup. |

| Coding Practices | The article presents examples of coding practices used in web scraping. |

| Data Privacy | Respecting data privacy is essential when scraping data from websites. |

| Future Trends | The role of AI in automating web scraping processes is highlighted as a future trend. |

Summary

Web scraping is a vital technique used to extract data from websites efficiently. It encompasses a variety of methods and tools, such as Python and Beautiful Soup, while emphasizing the ethical considerations tied to data collection. Moreover, as technology advances, the integration of artificial intelligence into web scraping practices is expected to revolutionize the industry, making it essential for data professionals to stay informed about these trends.