Web scraping, the technique of extracting data from websites, has become an essential tool in today’s data-driven world. It enables automated data gathering, allowing businesses and researchers to retrieve vast amounts of information for analysis and decision-making. By employing the best web scraping practices, users can ensure they ethically navigate the online landscape while gathering valuable insights. Popular web scraping tools, such as Beautiful Soup and Scrapy, facilitate this process by providing users with powerful capabilities to parse and organize data effectively. Understanding the intricacies of data extraction not only sharpens your skills but also enhances your ability to leverage web resources responsibly.

The concept of data mining from online sources, often referred to as website scraping or data harvesting, plays a crucial role in modern analytics. This process involves using automated scripts or bots to collect pertinent information from various websites, streamlining the data extraction workflow. Alternative terms like web data extraction and online data collection also capture the essence of this innovative technique. However, just as important as the gathering of information is the adherence to ethical web scraping principles, which advocate for the responsible use of technology and respect for website regulations. By understanding and applying these approaches, individuals can maximize the benefits of their data collection efforts without compromising ethical standards.

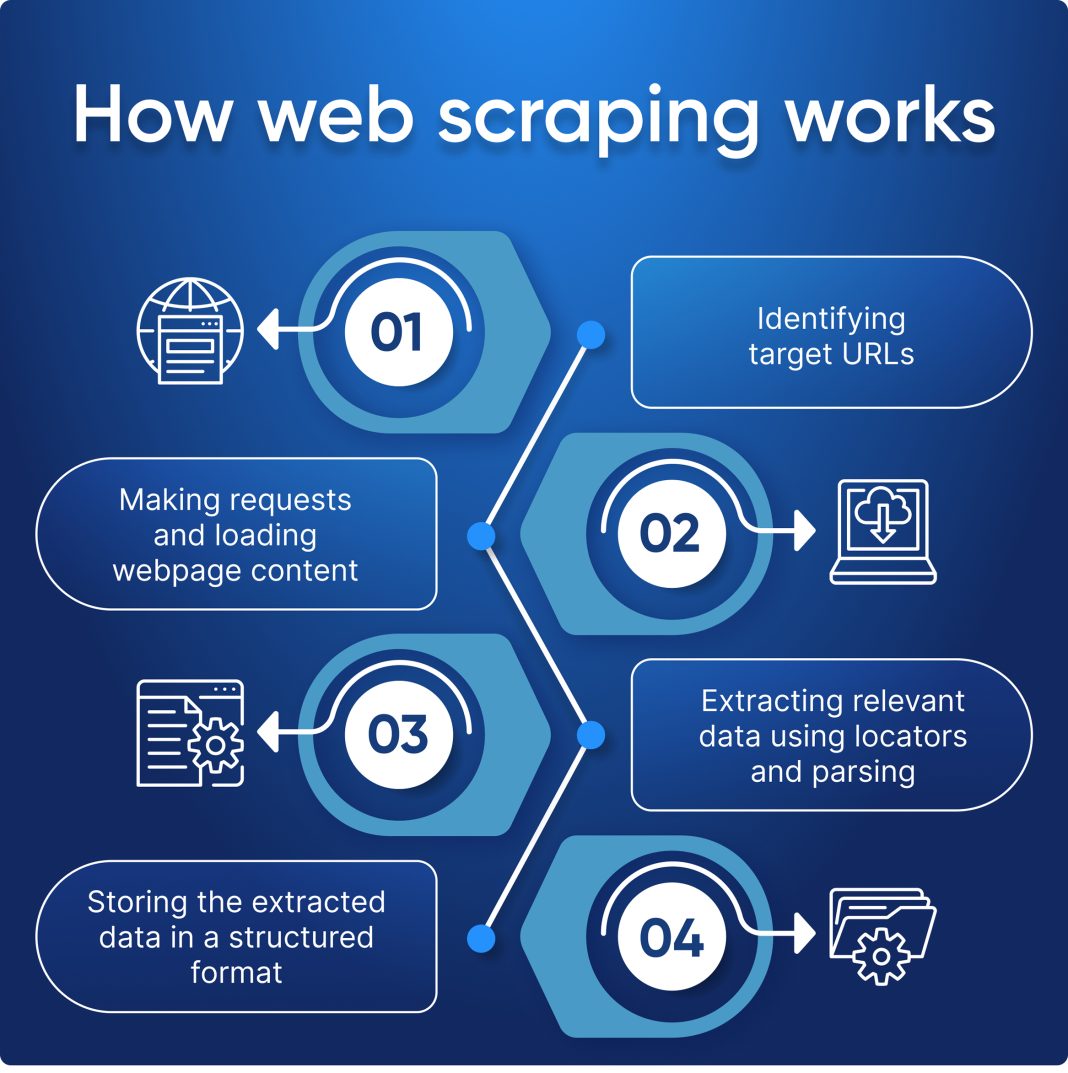

How Web Scraping Works: A Detailed Overview

Web scraping functions through a complex interaction between bots and web servers. When a web scraper requests data from a webpage, it mimics a user’s behavior, retrieving HTML content to be processed. This process frequently involves automated data gathering techniques, where scripts are programmed to navigate various web pages, fetching specific data points. This can be particularly useful for gathering large volumes of data quickly and efficiently, significantly outpacing manual methods.

Understanding the mechanics behind web scraping enhances its effectiveness. Typically, web scrapers utilize libraries such as Beautiful Soup or Scrapy to parse HTML responses received from servers. This parsing process allows the scrapers to pinpoint relevant information, such as tabular data, text, or images. Moreover, automated methods enable adjustments for different structures across websites, ensuring comprehensive data extraction regardless of site complexity.

Common Tools for Efficient Web Scraping

Selecting the right tool for web scraping can greatly influence the efficiency and effectiveness of your data extraction efforts. Beautiful Soup, for instance, is a favored choice among Python developers as it simplifies the HTML parsing process, allowing users to dive quickly into data extraction. Alternatively, Scrapy provides a more robust framework suited for larger projects requiring complex data collection workflows.

Selenium stands out in scenarios where interaction with dynamic content is necessary. Unlike static pages, dynamically generated pages often display content based on user actions; hence, Selenium can simulate these user actions to scrape relevant data. Utilizing these best web scraping practices ensures that you are equipped with the right tools, thereby maximizing data quality and retrieval speed.

Ethical Web Scraping: Guidelines to Follow

Ethical web scraping is paramount to ensure a sustainable and respectful approach to data extraction. Before initiating any scraping project, it’s critical to analyze the targeted website’s robots.txt file, which specifies the rules governing automated access. Respecting these guidelines not only protects the scraper from potential legal repercussions but also maintains the operational integrity of the service being scraped.

Many websites implement rate limiting to manage the number of requests from a single source, thus safeguarding server performance. Engaging in ethical web scraping involves implementing sleep intervals between requests and limiting concurrent connections to avoid overwhelming the server. By adhering to these standards, data scraping activities can thrive without infringing on the rights or functionalities of the source websites.

Best Practices for Successful Web Scraping

To optimize the results of web scraping endeavors, adherence to best practices is fundamental. First and foremost, ensure compliance with GDPR and other data protection regulations, particularly when performing data extraction involving personal information. It is advantageous to gather only the data required, thereby minimizing the risk of breaching privacy laws.

Maintaining clean and organized code for scraping scripts enhances both efficiency and productivity. Implementing error handling and logging mechanisms helps troubleshoot issues that might arise during the scraping process. Additionally, regularly updating your web scraping approach in response to changes on target websites ensures long-term success and minimizes disruptions.

Utilizing Web Scraping for Data Analysis

Web scraping serves as an invaluable asset for data analysis, enabling researchers and businesses to compile datasets from multiple sources. By harnessing the power of automated data gathering, users can quickly collate information needed for insights or business intelligence. For example, a competitor analysis might involve scraping multiple online retailers’ prices and product descriptions, providing a comprehensive landscape view.

As data analysis techniques evolve, the integration of web scraping with data visualization tools can yield profound insights. Scraping data from various platforms and consolidating it can help in identifying trends and correlations that are essential for decision-making. This practice empowers businesses to stay ahead of market trends and customer preferences.

The Future of Web Scraping: Trends and Innovations

The landscape of web scraping is continually changing as technology advances. Innovations such as AI and machine learning are increasingly being integrated into web scraping tools, enabling more sophisticated data extraction methods. Predictive analytics powered by these technologies can foresee data trends, allowing businesses to more accurately target their scraping strategies for maximum impact.

Moreover, the rise of headless browsers is transforming web scraping practices. Tools like Puppeteer facilitate scraping in ways that mimic actual user navigation through a web browser, aiding interactions that rely on JavaScript execution. As web content becomes more dynamic and interactive, leveraging advanced technologies for scraping will become essential for maintaining data accuracy and relevance.

Web Scraping for Competitive Analysis

In today’s fast-paced market, organizations leverage web scraping for competitive analysis purposes. By gathering data on competitor pricing, promotions, and product offerings, businesses can adjust their strategies to maintain a competitive edge. Implementing automated data gathering techniques ensures that businesses always have the most current insights at their disposal.

This data-driven approach provides a comprehensive outlook on market behaviors, allowing companies to identify gaps and opportunities within their sector. Moreover, the ability to monitor competitor changes in real-time enables agile responses to market shifts, reinforcing the importance of ethical web scraping practices to maintain competitiveness without infringing on legal or ethical boundaries.

Web Scraping Tools: An Overview of Popular Options

Various web scraping tools dominate the industry, each tailored to specific needs and user levels. Beautiful Soup provides straightforward and effective data extraction capabilities, particularly for smaller tasks and quick scrapes. For more extensive operations, Scrapy provides a powerful framework that supports the creation of complex crawlers to harvest large datasets efficiently.

Selenium, renowned for its interaction with dynamic web content, is ideal for scraping websites relying heavily on client-side JavaScript. Combining these tools appropriately allows users to maximize their data extraction efforts while adhering to best practices, ensuring ethical compliance throughout the scraping process.

Challenges and Solutions in Web Scraping

Despite its benefits, web scraping comes with its own set of challenges. One major issue is the frequent changes in website structures, which can break scrapers and halt data collection efforts. To combat this, developers should create flexible scraping scripts that can adapt to minor changes in HTML structure or prioritize regular maintenance and updates.

Additionally, some websites implement anti-scraping measures like CAPTCHAs or IP blocking. Employing rotating proxies and CAPTCHA-solving services are effective solutions to mitigate these challenges. Adopting an approach that respects the site’s terms while creatively navigating these obstacles can lead to successful web scraping initiatives.

Frequently Asked Questions

What is web scraping and how is it used for data extraction?

Web scraping is the process of extracting data from websites using automated tools or scripts. It is applied in various fields such as data analysis, competitive intelligence, and monitoring website updates. By utilizing web scraping techniques, users can gather valuable data for insights and decision-making.

What are the best web scraping practices to follow?

To ensure ethical web scraping, it is essential to respect a website’s terms of service and check its robots.txt file for permissions. Additionally, implementing rate limiting and polite scraping techniques helps prevent server overload while extracting the desired information.

What tools are commonly used for automated data gathering in web scraping?

There are several popular tools for automated data gathering in web scraping, including Beautiful Soup for data extraction in Python, Scrapy for building comprehensive web crawlers, and Selenium for scraping dynamic web pages. These tools simplify the data extraction process and enhance efficiency.

How does ethical web scraping differ from unauthorized scraping?

Ethical web scraping involves adhering to a site’s guidelines, such as those outlined in the robots.txt file, and obtaining permission when necessary. In contrast, unauthorized scraping disregards these rules and can lead to IP bans, legal issues, and harm to the website’s performance.

What are some common use cases for web scraping?

Web scraping is commonly used for various purposes, including price comparison, market research, sentiment analysis, and data aggregation for reporting. By extracting data from multiple sources, businesses can gain insights and trends to inform their strategies.

What programming languages are best suited for web scraping?

Python is one of the most favored languages for web scraping due to its robust libraries, such as Beautiful Soup and Scrapy. Other languages like JavaScript, using frameworks like Puppeteer, and languages like Ruby can also effectively support web scraping endeavors.

How can I prevent getting blocked while web scraping?

To minimize the risk of being blocked while web scraping, use rotating proxies, implement rate limiting to space out requests, and randomize user-agent strings to mask your scraper. Following ethical scraping practices and respecting a site’s usage limits also helps avoid detection.

What should I do if a website does not allow web scraping?

If a website explicitly prohibits web scraping, it is best to respect its policies and seek alternative data sources. You may also consider reaching out to the website owner to request permission to access specific data or explore APIs that provide the same information legally.

| Key Point | Details |

|---|---|

| Definition of Web Scraping | Web scraping is the process of extracting data from websites. |

| How Web Scraping Works | Involves using bots to request web pages and extract information, can be automated with libraries like Beautiful Soup or Puppeteer. |

| Common Tools | 1. Beautiful Soup: A Python library for easy scraping. 2. Scrapy: An open-source framework for extracting data. 3. Selenium: A tool for testing and scraping dynamic pages. |

| Ethics and Best Practices | Check a website’s robots.txt for scraping permissions and follow site terms to maintain integrity and avoid server overload. |

Summary

Web scraping is an essential technique for extracting valuable data across various sectors. By grasping the fundamental aspects and adhering to ethical standards, users can leverage web scraping effectively to enhance data analysis and competitive intelligence.