Web scraping is a powerful technique that allows users to extract valuable data from websites quickly and effectively. By leveraging various web scraping techniques, developers can gather information for analysis, market research, or content generation. Among the best programming languages for web scraping, Python stands out due to its simplicity and robust libraries that facilitate scraping data with Python effortlessly. For those who prefer JavaScript, there are also effective methods for JavaScript web scraping that enable automated data collection from dynamic web pages. Automating tasks with web scraping not only saves time but also opens up new opportunities for optimizing business processes and gaining insights into consumer behavior.

Data extraction from web pages, often referred to as web harvesting or data scraping, has become a vital skill in the age of information. By mastering these data gathering techniques, individuals and businesses alike can harness the power of online content for various purposes such as analytics, research, or competitor benchmarking. Programming languages like Python and JavaScript offer tailored solutions to streamline this process, making it easier to retrieve and utilize information efficiently. Whether you’re automating tasks or conducting extensive research, understanding these methods is crucial to staying competitive in today’s data-driven landscape. Welcome to the world of data extraction, where the right tools and strategies can transform how you access the vast web of information!

Understanding Web Scraping Techniques

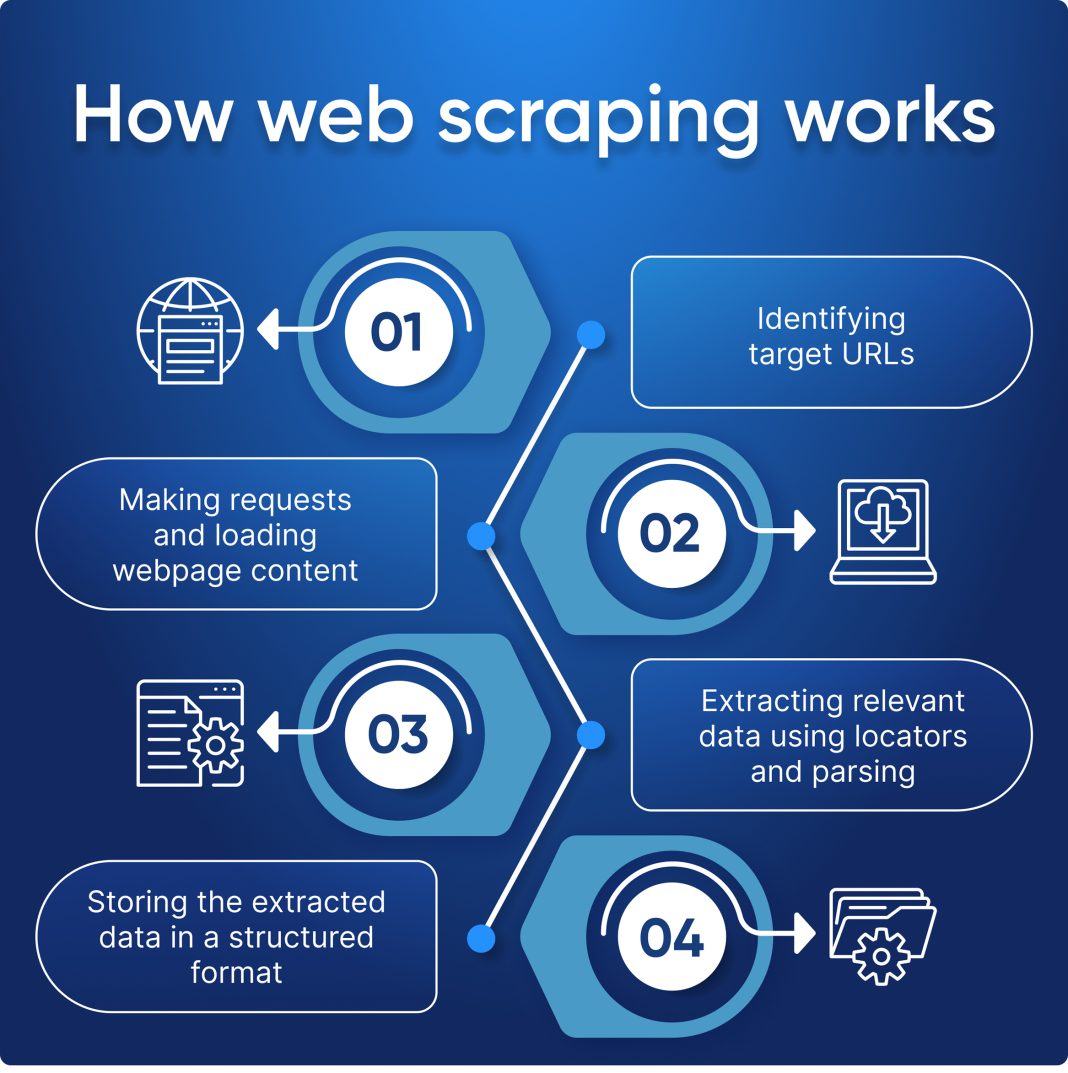

Web scraping refers to the automated process of extracting information from websites using software. Various techniques can be employed in web scraping, including HTML parsing, DOM navigation, and utilizing APIs. These techniques help developers collect data from numerous sources quickly, which can be invaluable for research, competitive analysis, or even building comprehensive datasets for machine learning.

Among the popular web scraping techniques, HTML parsing is one of the most widely used. It involves extracting data by analyzing the HTML structure of web pages. Libraries and frameworks, such as Beautiful Soup for Python or Cheerio for JavaScript, simplify the HTML parsing process, making it easier to retrieve specific data elements like tables, images, or textual information.

Best Programming Languages for Web Scraping

When it comes to web scraping, choosing the right programming language is crucial for efficiency and speed. Python is often considered the go-to language due to its simplicity and rich ecosystem of libraries designed specifically for web scraping, such as Scrapy and Requests. These libraries make it easy to handle web requests and navigate through HTML and XML documents, enabling developers to focus on data extraction rather than dealing with the complexities of lower-level programming.

JavaScript is another powerful language that excels in scenarios where dynamic content is present. Many modern web applications rely heavily on JavaScript frameworks, making it essential for web scrapers to understand how to work with tools like Puppeteer or Selenium. These tools can simulate user interactions and wait for the JavaScript to render fully before scraping the desired data, ensuring accurate retrieval of information.

Scraping Data with Python

Python is undoubtedly one of the most popular choices for scraping data, thanks to its simplicity and the wide variety of libraries available for this task. With tools like Beautiful Soup, developers can parse HTML and XML documents easily, while Requests simplifies the process of making HTTP requests. This combination allows for straightforward data extraction from static web pages. The flexibility of Python makes it possible to adapt scraping scripts to different websites swiftly.

Moreover, Python supports advanced features for handling websites that employ JavaScript to load content dynamically. By using libraries such as Selenium, developers can automate browser actions, which is particularly useful for scraping data that is not immediately available in the page source. This capability opens up possibilities for scraping more complex websites and gathering a wider range of data.

JavaScript Web Scraping: A Dynamic Approach

JavaScript web scraping is gaining traction as more websites adopt frameworks like React and Angular that render content dynamically. When dealing with these types of websites, classic scraping methods may fail to retrieve the necessary data because the HTML is generated via JavaScript on the client side. JavaScript web scraping allows developers to automate browser interactions, waiting for the content to load before executing any scrape.

Tools like Puppeteer and Playwright are specifically designed for web scraping with JavaScript, providing an easy interface to control headless browsers. By utilizing these tools, developers can navigate through pages, fill out forms, and click on elements just like a human would, ensuring that they don’t miss any dynamically loaded content. This approach is essential in today’s web environment, where much of the most valuable data is hidden behind JavaScript.

Automating Tasks with Web Scraping

Web scraping is not only about gathering data; it can also significantly automate repetitive tasks. For instance, web scrapers can be set up to regularly extract pricing data from e-commerce websites, monitor stock levels, or pull in news articles from specified sources. By utilizing automation, businesses can free their staff from mundane tasks and allow them to focus on higher-level strategic activities.

Furthermore, automating data extraction using web scraping can lead to real-time data gathering which is crucial for competitive analysis and decision-making processes. By integrating scraping scripts with scheduling tools like cron jobs in UNIX/Linux systems, developers can ensure that data is refreshed automatically at defined intervals, providing up-to-date insights without manual intervention.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is the process of automatically extracting data from websites. It works by sending requests to web servers and parsing the retrieved HTML or XML content to extract specific data points using web scraping techniques.

What are the best programming languages for web scraping?

The best programming languages for web scraping include Python, due to its simplicity and powerful libraries like Beautiful Soup and Scrapy, as well as JavaScript for handling dynamic content through tools like Puppeteer.

How can I scrape data with Python effectively?

To scrape data with Python effectively, use libraries such as Beautiful Soup for parsing HTML, Requests for making web requests, and Scrapy for building robust scraping applications.

Can I use JavaScript for web scraping?

Yes, JavaScript can be used for web scraping, especially for dynamic websites. Tools like Puppeteer or Selenium allow you to interact with web pages as a user would, making it easier to scrape data that is loaded asynchronously.

How can I automate tasks with web scraping?

You can automate tasks with web scraping by scheduling your scraping scripts using tools like Cron jobs in Linux, or utilizing task automation libraries in languages such as Python, which can run scripts at specified intervals to consistently fetch and process web data.

| Key Point | Details |

|---|---|

| Introduction | This blog post demonstrates the concept of web scraping. |

| Programming Languages | Discussed languages include Python, JavaScript, and Java. |

| Next Steps | Encourage readers to stay tuned for more updates. |

Summary

Web scraping is an essential technique for gathering data from websites. It allows developers to automate the collection of information, making it easier to analyze and utilize in various applications. In this post, we covered an introduction to web scraping along with a brief mention of programming languages suitable for web scraping tasks. Stay tuned for detailed guides and resources on how to effectively implement web scraping.