Web scraping is an essential technique for individuals and businesses looking to extract valuable insights from the vast ocean of information available on the internet. This data extraction method involves fetching web pages and harvesting useful information for various purposes such as competitive intelligence and market research. With the right scraping tools at your disposal, you can efficiently gather significant amounts of data for analysis, leading to informed decision-making and strategic planning. By leveraging effective data collection techniques, organizations can decipher trends and understand customer preferences, ultimately enhancing their operational strategies. In this article, we will delve into the nuances of web scraping, highlighting its advantages, popular tools, and best practices to optimize your data acquisition process.

When discussing automatic data gathering from websites, many may refer to it as web harvesting or data mining. This innovative approach not only facilitates the extraction of information from multiple sources but also plays a crucial role in analytical endeavors like competitor evaluation and market analysis. Various data scraping methodologies have emerged, enabling businesses to streamline their research processes and make better-informed decisions. By employing automated systems for intelligent data retrieval, organizations can capitalize on emerging trends and gain competitive advantages. Throughout this discussion, we will explore the intricacies of these methodologies, emphasizing their applications and benefits.

The Advantages of Web Scraping for Data Collection

Web scraping is an incredibly efficient method for data collection, enabling businesses to extract vast amounts of information from websites in a fraction of the time it would take to gather manually. By automating the data extraction process, companies can focus on analyzing the data instead of spending hours or even days on collection. This rapid gathering of information becomes instrumental in areas such as market research and trend analysis, allowing businesses to make informed decisions backed by comprehensive data.

In addition to speed, web scraping offers versatility in terms of data sources. Companies can target multiple sites simultaneously, pulling valuable insights from various locations on the internet. For instance, a retailer may scrape competitor websites to analyze price fluctuations, product availability, and consumer reviews, equipping them with the necessary competitive intelligence to adjust their marketing strategies effectively.

Enhancing Market Research with Data Extraction Techniques

Market research is an essential component for any business looking to thrive in a competitive landscape. By employing data extraction techniques through web scraping, organizations can analyze users’ search behavior, preferences, and purchasing trends. This information can be gathered from forums, e-commerce sites, and social media platforms, leading to a nuanced understanding of what drives consumer choices. Such insight is invaluable for tailoring products and marketing strategies to better serve target audiences.

Moreover, data extraction through web scraping allows businesses to identify emerging trends and shifts in consumer behavior based on real-time data. For instance, by continuously tracking online reviews and feedback, companies can adjust their product offerings or services to meet the changing demands of their customer base. This proactive approach to market research not only strengthens customer relations but also enhances overall business performance.

Choosing the Right Scraping Tools for Effective Data Collection

Selecting the right tools for web scraping can significantly impact the quality and efficiency of data collection efforts. Various scraping tools offer unique capabilities, from simple libraries like Beautiful Soup for quick HTML parsing to complex frameworks such as Scrapy designed for large-scale web scraping projects. Businesses need to assess their specific requirements and choose tools that fit their technical skills and data needs.

Additionally, tools like Selenium can be invaluable for scraping data from dynamic websites that rely heavily on JavaScript. By using Selenium, businesses can navigate and interact with webpages as if they were users, enabling them to extract data from elements that are typically challenging to access. The right tool can enhance not just data extraction but also the overall analytic performance, allowing for better insights from the collected data.

Best Practices for Ethical Web Scraping

While web scraping is an effective method of data collection, it’s crucial to follow best practices to ensure ethical usage. One primary consideration is to respect a site’s `robots.txt` file, which outlines the permissions granted to web scrapers. By adhering to these guidelines, businesses can avoid potential legal issues and maintain a positive relationship with data sources.

Another best practice involves implementing responsible scraping techniques to prevent overwhelming websites with requests. By including delays between scraping cycles and limiting the frequency of data requests, scrapers can minimize server load and reduce the risk of being blocked or banned. Maintaining ethical standards not only enhances the sustainability of web scraping efforts but also promotes cooperation with webmasters and content providers.

Leveraging Web Scraping for Competitive Intelligence

In today’s fast-paced business environment, competitive intelligence is key to staying ahead. Web scraping serves as a powerful tool for gathering insights about competitors, such as their pricing structures, product launches, and marketing strategies. By continuously monitoring competitors’ websites, businesses can make data-driven decisions that influence their own strategic direction.

Moreover, web scraping facilitates real-time tracking of competitor movements in the market. For instance, if a competitor introduces a new product or makes a major change in pricing, businesses can quickly adapt their offerings to remain relevant and competitive. This not only helps in staying informed but also in seizing market opportunities before they dissipate, directly influencing profitability and growth.

Organizing Scraped Data Effectively for Analysis

Once data is scraped from web sources, it is essential to organize the collected information in a structured format. Proper data storage solutions, such as databases or well-structured CSV files, can significantly enhance the ease of access and analysis. This organization allows businesses to quickly retrieve relevant data when needed and ensures that the analysis process is efficient and effective.

Additionally, utilizing effective data cleaning and preprocessing techniques is vital after scraping. This involves ensuring data accuracy, removing duplicates, and formatting errors. Clean data not only improves the reliability of the analysis but also leads to more accurate insights, ultimately supporting better decision-making processes for businesses engaged in market research and competitive intelligence.

The Future of Web Scraping: Trends and Innovations

As technology continues to advance, so does the landscape of web scraping. Innovations such as machine learning are emerging, enabling more sophisticated scraping techniques that can adapt to changes in web structures and layouts. Tools are evolving to incorporate AI capabilities, making it easier to scrape unstructured data and convert it into meaningful insights. This progressive transition indicates a shift towards automated and intelligent data extraction processes.

Furthermore, the rise of headless browsers facilitates the scraping of complex, dynamic sites with ease. The ability to scrape data without rendering a user interface saves time and resources, giving businesses a competitive edge in their data collection methods. As these technological advancements unfold, organizations that embrace new scraping methods will be better positioned to harness the potential of collected data.

Understanding the Legal Implications of Web Scraping

As beneficial as web scraping can be for businesses, it is critical to understand the legal implications surrounding the practice. Numerous laws govern data collection, and each website may have different terms of service that outline permissible use of their data. Familiarizing oneself with legal constraints is essential to avoid violations that could lead to litigation or penalties.

Moreover, constant engagement with legal frameworks around data rights and privacy regulations is necessary, especially with growing concerns about data usage and protection. By staying informed and aligning scraping practices with established laws, businesses can harness the power of web scraping while minimizing risks associated with legal repercussions.

Future-Proofing Your Scraping Strategies

To ensure long-term success in data extraction efforts, businesses must actively future-proof their scraping strategies. This involves regularly updating tools and practices to stay ahead of evolving web technologies and regulations. By investing in adaptive web scraping solutions and continually training staff on the latest techniques, companies can maintain an edge in their data collection initiatives.

Additionally, fostering relationships with data providers can enhance the sustainability of web scraping practices. By creating partnerships, businesses can gain insights into the types of data they can collect, and potentially receive data in a more structured, reliable manner. This collaborative approach not only secures valuable data but also strengthens trust and communication with other stakeholders in the industry.

Frequently Asked Questions

What is web scraping and how does data extraction work?

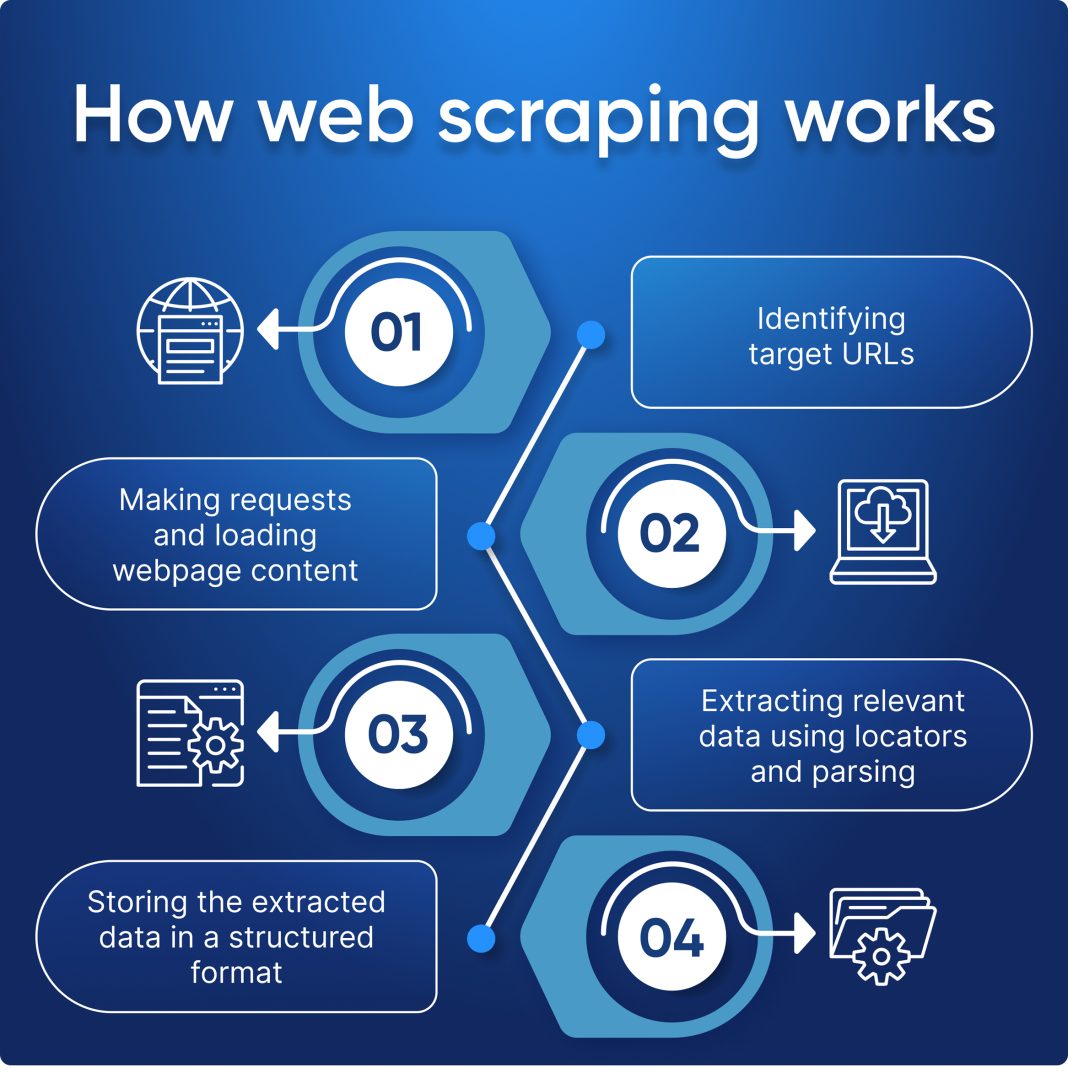

Web scraping is a technique used to programmatically extract data from websites. It involves making requests to web pages and retrieving their content, where useful information is parsed and stored. By utilizing data extraction methods, businesses can efficiently gather large volumes of data for analysis, enhancing decision-making and competitive intelligence.

What are the most popular scraping tools for effective data collection?

Some of the most popular scraping tools include Beautiful Soup, which is great for parsing HTML and XML, and Scrapy, a robust framework perfect for large-scale web scraping projects. Additionally, Selenium is widely used for scraping data from dynamic websites that require rendering JavaScript, making it a versatile choice for data collection techniques.

How can web scraping support competitive intelligence efforts?

Web scraping helps businesses gather critical information about competitors by extracting data such as pricing, product offerings, and marketing strategies. This process supports competitive intelligence initiatives by providing insights that inform strategic planning and position businesses advantageously in the market.

What are the best practices for ethical web scraping?

To ensure ethical web scraping, always respect the `robots.txt` file of websites to verify if scraping is permitted. It’s important to avoid overloading servers by implementing time delays between requests. Finally, ensure that your collected data is stored in a structured format, such as CSV or databases, for easy access and analysis.

What is the role of web scraping in market research?

Web scraping plays a crucial role in market research by enabling companies to collect data on trends, consumer preferences, and market conditions. By automating data collection from various websites, businesses can quickly gather the necessary information to analyze market dynamics and inform their strategies effectively.

Can web scraping be used for real-time data monitoring?

Yes, web scraping can be effectively utilized for real-time data monitoring, such as tracking changes in competitor pricing, product availability, or fluctuations in market trends. By regularly scraping data from relevant websites, businesses can stay updated and make timely decisions based on the most current information.

What should I consider when choosing scraping tools for my project?

When selecting scraping tools, consider factors such as the complexity of the websites you’ll be targeting, the size of the data you need to collect, the programming languages you’re familiar with, and the specific features offered by tools like Beautiful Soup and Scrapy. Choose a solution that aligns well with your data collection needs and technical capabilities.

| Aspect | Details |

|---|---|

| Benefits | Data Collection: Quickly gather large amounts of data. |

| Competitive Analysis: Extract competitor data like pricing and product offerings. | |

| Market Research: Collect data for trend analysis and understanding customer preferences. | |

| Tools | Beautiful Soup, Scrapy, Selenium – popular tools for web scraping. |

| Best Practices | Respect robots.txt, avoid server overload, and store data wisely (CSV or database). |

Summary

Web scraping is an essential method for extracting valuable data from websites, enabling businesses to perform in-depth analysis and gain a competitive edge. By understanding its benefits, utilizing the right tools, and following best practices, organizations can effectively harness the power of web scraping to drive informed decision-making and foster growth.