Web scraping has emerged as a pivotal technique in the digital age, enabling automated data collection from various online sources. This powerful process allows businesses and researchers to gather insights efficiently, turning vast amounts of information into actionable data. With the right web scraping tools, users can engage in effective market research techniques and stay ahead of competitors by analyzing current trends effortlessly. By harnessing the benefits of ethical web scraping, organizations can ensure compliance while maximizing their data extraction capabilities. However, it’s essential to navigate the potential challenges carefully to avoid legal pitfalls associated with scraping.

Often referred to as data harvesting or web crawling, this method involves systematically collecting web data for analysis and insight. By utilizing advanced software solutions, individuals and businesses can extract crucial information, enhancing their decision-making processes. Automated data collection techniques facilitate real-time updates and efficient monitoring of online content, driving forward market analysis and intelligence. The landscape of digital data constantly evolves, making these practices essential for staying competitive. Yet, the rise of automated web scraping comes with the responsibility of adhering to ethical standards and ensuring compliance with website policies.

Understanding Web Scraping: An Overview

Web scraping serves as a pivotal method for automated data collection, allowing users to systematically gather extensive information from websites. At the core of web scraping lies the ability to fetch and extract crucial data, facilitating informed decisions based on real-time data trends. This process can either be performed manually, which is time-consuming and inefficient, or through automated scripts that enhance productivity and accuracy. Understanding the complexities behind these techniques is essential for anyone looking to leverage data for various applications such as market research or academic studies.

Beyond just fetching data, web scraping offers a robust means for businesses to stay competitive. By utilizing state-of-the-art web scraping tools, organizations can conduct in-depth market analysis, keeping tabs on competitor pricing, product offerings, and customer sentiment. This invaluable data can then be analyzed to identify market trends and consumer preferences, guiding strategic decisions that align with customer needs. Thus, web scraping not only aids in data acquisition but also supports broader goals of competitive intelligence and market positioning.

Advantages of Web Scraping for Businesses

Web scraping presents significant advantages that streamline data collection processes across various industries. For instance, through automated data collection, businesses are capable of gathering vast amounts of data quickly without the need for extensive human labor. By setting up web scraping scripts, companies can retrieve updated data on consumer behaviors, pricing models, and product availability, thereby enhancing their market research techniques. The aggregated data provides a solid foundation for driving informed business strategies and initiatives.

In addition to efficiency, web scraping fosters real-time data monitoring. Businesses can track live changes in their respective markets, allowing them to respond promptly to competitors’ moves and shifts in consumer preferences. This proactive approach is critical in today’s rapidly changing digital landscape, where timely information can make or break a business. Moreover, the ability to automate data updates reduces human error and ensures high accuracy, further reinforcing the need for reliable web scraping practices.

Ethical Considerations in Web Scraping

Conducting ethical web scraping is crucial to maintaining a positive reputation and avoiding legal ramifications. As web scraping involves accessing data that is often not meant for unrestricted distribution, it’s important to respect the terms of service outlined by individual websites. Ethical web scraping practices encourage compliance with legal guidelines and promote fair usage of the data being collected. Ignoring these considerations can lead to significant penalties, including lawsuits and IP bans from websites.

Adhering to ethical guidelines in web scraping not only protects the scraper but also benefits the data ecosystem as a whole. When companies utilize ethical web scraping techniques, they contribute to a community that respects data ownership and the privacy of individuals and businesses alike. Practicing transparency and accountability when extracting data fosters trust among users and webmasters, ultimately benefiting everyone involved in the data-sharing landscape.

Selecting the Right Web Scraping Tools

Choosing the appropriate web scraping tools is vital to achieving efficient and effective data extraction. Tools like Beautiful Soup, Scrapy, and Selenium each offer unique features tailored to different scraping needs. Beautiful Soup, for instance, is celebrated for its simplicity and is ideal for beginners looking to perform basic scraping tasks. On the other hand, Scrapy is a more complex framework designed for larger projects that require more extensive data processing and management. Understanding the specific requirements of your data collection task will guide you toward selecting the appropriate tool.

Additionally, evaluating the capabilities of web scraping tools is essential in determining their suitability for automated data collection. For example, while Selenium excels in scraping dynamic content generated by JavaScript, it may not be the best fit for static data extraction, where lighter tools can be more efficient. Businesses must assess their specific needs, expected data volume, and desired output format to choose the right tool that balances ease of use with comprehensive functionality.

Common Challenges in Web Scraping

Despite its benefits, web scraping comes with a set of challenges that can hinder the extraction process. One of the primary issues is the legal implications associated with scraping data from websites, as this can often breach copyright laws or violate terms of service. It is imperative for businesses to not only understand these regulations but also to navigate them carefully, ensuring that their web scraping activities remain compliant and ethical. Failure to do so can lead to severe consequences, including legal battles that disrupt business operations.

Another significant challenge involves detection and blocking mechanisms implemented by websites to deter scrapers. Many sites actively monitor for scraping activity and may employ techniques to ban IP addresses or limit access for suspected bots. This makes it essential for companies to integrate smart scraping strategies that circumvent such barriers, allowing for sustained access without being flagged as malicious. Employing proxies, user-agent rotation, and rate limiting are some of the methods that can enhance the efficacy of web scraping efforts.

The Role of Web Scraping in Market Research

Web scraping plays a transformative role in the domain of market research, enabling organizations to gather and analyze data that can provide insights into competitors and consumer behavior. By employing automated data collection techniques, companies can obtain critical information about market trends, product performance, and pricing strategies that would otherwise require extensive manual effort to compile. This rapid data acquisition empowers businesses to make swift and informed decisions that can impact their market positioning.

Furthermore, web scraping allows for the aggregation of customer sentiment analysis derived from online reviews and social media platforms. By utilizing web scraping tools to extract this data, companies can assess public perceptions and reactions to their products or services. This invaluable feedback loop can inform product development, marketing strategies, and overall customer engagement efforts, ultimately enhancing brand loyalty and market performance.

Best Practices for Ethical Web Scraping

To engage in ethical web scraping, organizations should adhere to established best practices that protect both their interests and those of the data owners. One of the primary best practices is to always check the website’s robots.txt file, which outlines what parts of the site can be scraped and under what conditions. This is not just a legal precaution; it also helps to maintain a respectful relationship with the website owners by demonstrating compliance with their data usage policies.

Moreover, establishing a clear and respectful scraping strategy is essential. Businesses should communicate their intentions to site owners if possible, and ensure that scraping does not impede website performance or abuse server resources. Setting reasonable scraping limits, such as avoiding excessive requests to a site within a short timeframe, will further emphasize ethical practices. By cultivating a responsible scraping framework, organizations can build credibility and trust in the data they utilize for their operations.

Future Trends in Web Scraping Technology

The landscape of web scraping technology is continually evolving, and future trends suggest an increased integration of artificial intelligence (AI) and machine learning in automated data extraction processes. These advanced technologies will enhance the efficiency and accuracy of web scraping tasks, enabling businesses to gather richer and more nuanced datasets. With predictive algorithms, companies could analyze historical data to anticipate market changes, thereby refining their strategic approaches based on data-driven insights.

Additionally, as data privacy regulations become more stringent, web scraping tools will likely evolve to prioritize ethical practices and compliance with such laws. This shift might include features that promote transparency, allowing users to document their scraping activities and ensure adherence to legal standards. Moreover, as the demand for real-time data escalates, future web scraping solutions will likely focus on developing smarter, more adaptable technologies that can seamlessly handle the complexities of modern web environments while maintaining ethical integrity.

Integrating Web Scraping into Business Operations

Integrating web scraping into existing business operations can provide organizations with a significant competitive edge. By automating the data collection process, businesses can transform real-time insights into actionable strategies without dedicating extensive resources to manual data entry. This efficiency enables companies to allocate time and effort to analyzing gathered data, thereby sharpening their decision-making processes and optimizing overall productivity.

However, successful integration requires careful planning and consideration of technical aspects, such as team training and tool selection. Organizations should invest in developing the necessary skills among their workforce to maximize the benefits of web scraping tools. Furthermore, ensuring that web scraping practices are aligned with the company’s ethical standards and operational goals will enhance the overall effectiveness of the strategy, ultimately positioning the organization as a leader in data-driven decision-making.

Frequently Asked Questions

What is web scraping and how does it work?

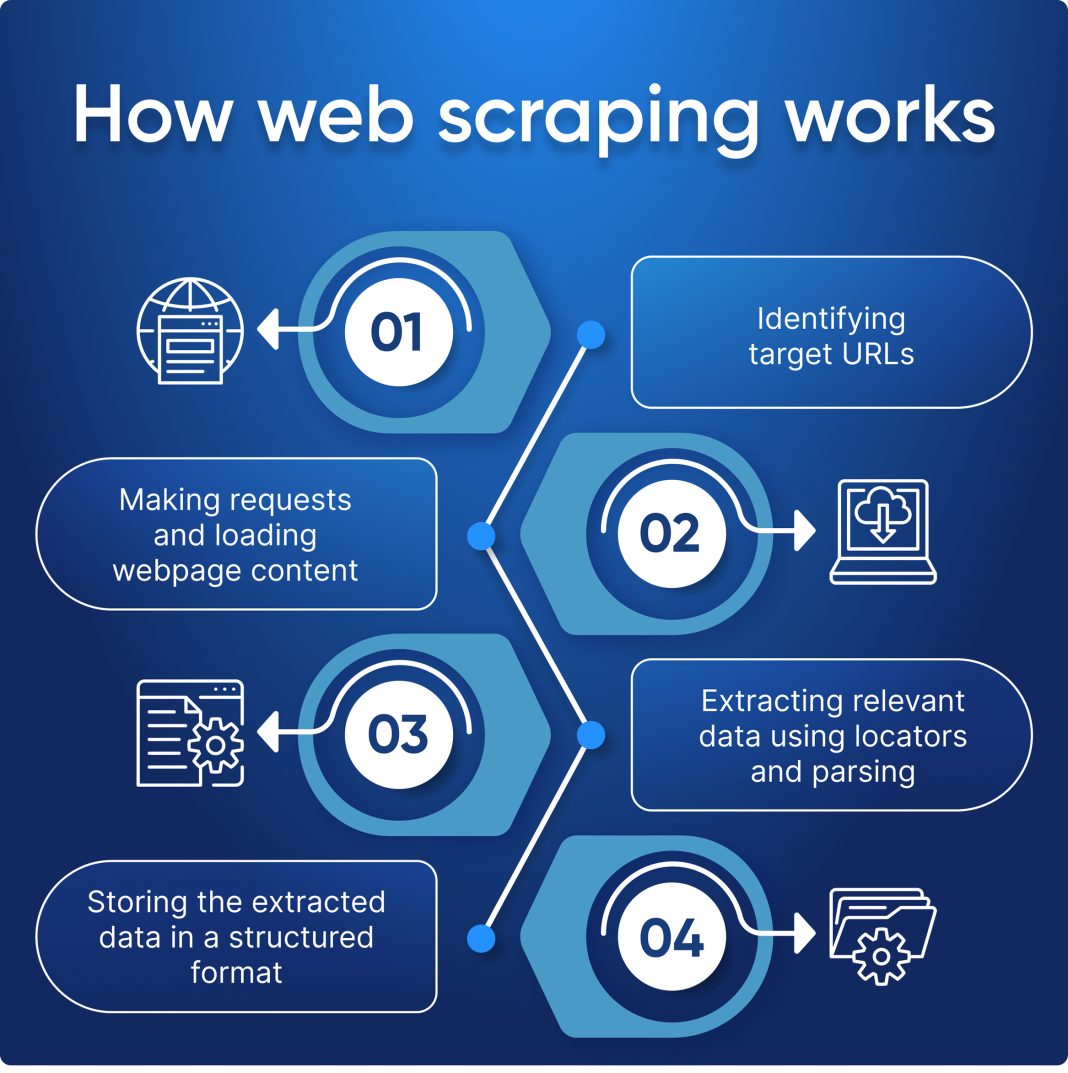

Web scraping is the automated process of extracting data from websites. It involves fetching a web page’s content and using specific algorithms or tools to pull relevant information. This can be achieved through various web scraping tools like Beautiful Soup, Scrapy, or Selenium, which help automate data collection effectively.

What are the benefits of using web scraping tools for data extraction?

Web scraping tools streamline the data extraction process, allowing users to gather large volumes of data quickly and efficiently. The benefits include automated data collection for market research, real-time updates on competitor pricing, and the ability to conduct extensive analysis without manual data entry, making it a valuable resource for businesses.

How can I perform ethical web scraping without violating website terms?

To perform ethical web scraping, it’s crucial to read and understand the terms of service of the website you intend to scrape. Always ensure that your scraping activities comply with legal guidelines, avoid overwhelming servers with rapid requests, and respect any restrictions set by the site. Using responsible web scraping tools can help maintain adherence to these practices.

What common challenges do users face when implementing automated data collection?

Common challenges in automated data collection through web scraping include legal complications due to violating website terms, encountering anti-scraping measures like CAPTCHAs or IP blocks, and managing changes in website structure that may disrupt data extraction scripts. It’s essential to stay updated on these issues to maintain successful scraping operations.

What market research techniques can be enhanced by web scraping?

Web scraping enhances several market research techniques, including competitor analysis, price monitoring, customer sentiment analysis, and assessing product availability. By obtaining data efficiently, businesses can make informed decisions based on comprehensive insights gained through automated data collection.

Which programming languages are commonly used for web scraping?

Python is the most popular programming language for web scraping due to its robust libraries like Beautiful Soup and Scrapy. Other languages that are used include JavaScript with Node.js, Ruby, and PHP. Each language has specific tools that facilitate efficient data extraction.

Are there any legal risks associated with web scraping?

Yes, there are potential legal risks involved in web scraping. These can include copyright infringement or breach of contract if the scraping violates a website’s terms of service. It’s vital to ensure compliance with laws such as the Computer Fraud and Abuse Act in the U.S. and similar regulations in other jurisdictions.

How can I prevent my web scraping efforts from being detected and blocked?

To avoid detection and blocking during web scraping, consider implementing techniques such as rotating IP addresses, using proxies, adjusting request headers, and mimicking human behavior by randomizing request intervals. Additionally, respecting the site’s robots.txt file and avoiding excessive requests can help maintain a lower profile.

What tools are essential for effective web scraping?

Essential tools for effective web scraping include Beautiful Soup for parsing HTML pages, Scrapy for comprehensive web crawling, and Selenium for interacting with dynamic web content generated by JavaScript. Each tool offers unique capabilities that can enhance data extraction efforts.

How can businesses leverage web scraping for competitive analysis?

Businesses can leverage web scraping for competitive analysis by automatically collecting data on competitors’ pricing, inventory levels, product features, and customer reviews. This data provides valuable insights that can inform pricing strategies, marketing efforts, and product development to maintain a competitive edge in the market.

| Aspect | Details |

|---|---|

| What is Web Scraping? | The process of extracting data from websites through manual or automated means. |

| Benefits | 1. Data Collection: Automate gathering of large datasets. 2. Market Research: Analyze competitor pricing and product offerings. 3. Real-time Updates: Monitor changes in information. |

| Challenges | 1. Legal Issues: Check website terms of service. 2. Detection and Blocking: Anti-scraping measures from websites can hinder data extraction. |

| Tools for Web Scraping | – Beautiful Soup – Scrapy – Selenium |

Summary

Web scraping is a vital technique for data collection that offers significant insights into various domains. As detailed, it encompasses both manual and automated methods to extract crucial data from websites effectively. The benefits of web scraping include streamlined data collection for market analysis and real-time monitoring of critical information. However, users must navigate challenges like legal considerations and preventive measures employed by websites. By utilizing the right tools such as Beautiful Soup, Scrapy, and Selenium, web scraping can enhance data-driven decisions while maintaining ethical standards.