In the realm of technology, **ethics in artificial intelligence** has become an urgent topic of discussion as AI continues to reshape industries worldwide. With capabilities that drive efficiencies and enhance decision-making, the vast potential of AI prompts us to consider our **artificial intelligence responsibility**. Ethical dilemmas, such as **machine learning bias** and **AI and privacy issues**, challenge us to build fair and secure systems. Furthermore, as we contemplate the **AI impact on jobs**, it is paramount to understand how these technologies can displace workers and alter the workplace landscape. Overall, establishing robust ethical frameworks is essential to ensuring that AI serves humanity equitably and responsibly.

As technology advances, the moral implications surrounding automated systems and machine intelligence have gained significant prominence. The discourse on **AI ethics** delves into responsible innovation and the governance structures necessary to guide these transformative technologies. Issues such as algorithmic bias and the safeguarding of individual privacy are at the forefront of discussions on artificial intelligence accountability. Moreover, the effects of AI applications on employment necessitate a comprehensive examination of how we adapt to these changes in the workforce. By fostering a collaborative dialogue between industry leaders, ethicists, and regulatory bodies, we can steer the future of intelligent machines towards a more equitable path.

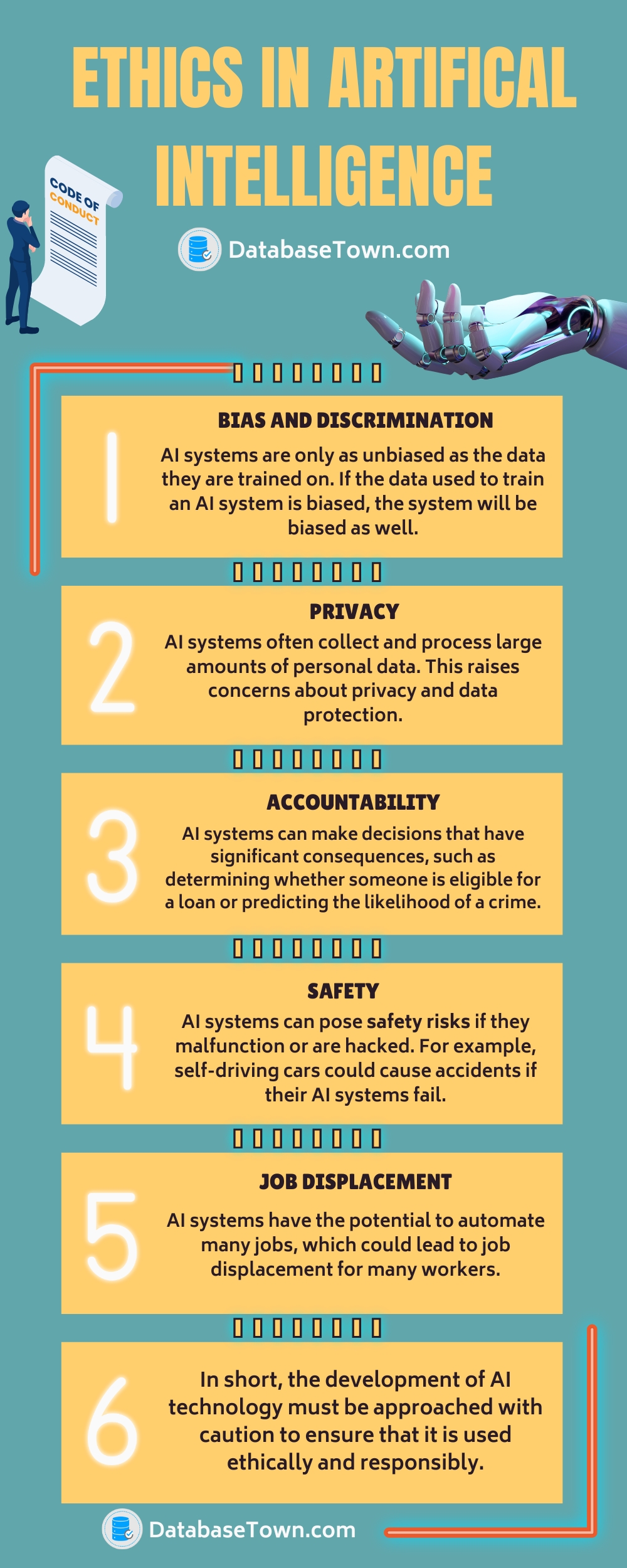

Understanding Ethics in Artificial Intelligence

Ethics in artificial intelligence represents a critical aspect of the ongoing discourse surrounding the technology’s rapid advancement. As AI systems become more prevalent in making decisions that affect our daily lives, concerns regarding accountability and transparency grow. Stakeholders across industries must acknowledge the moral implications of their AI-driven projects, ensuring fairness and respect for all individuals. This commitment to ethical practices can help mitigate risks associated with bias, which can emerge from flawed data or inadequate training of algorithms, potentially leading to discriminatory outcomes.

Incorporating a robust ethical framework into AI development not only enhances technological innovation but also fosters trust among users and stakeholders. By addressing the ethical dimensions of AI, such as informed consent, data privacy, and algorithmic fairness, organizations can minimize potential risks while maximizing benefits. This approach encourages developers to consider the broader societal impact of AI technologies, signaling responsibility and integrity as essential components of innovation.

The Role of AI and Ethics in Job Advancement

As artificial intelligence technologies advance, their potential impact on employment cannot be ignored. Automation, driven by AI, carries the risk of job displacement across various sectors, from manufacturing to services. It is imperative to exercise responsibility in implementing such technologies to avoid exacerbating socioeconomic disparities. Industry leaders must engage ethically with their workforce and stakeholders to devise strategies that not only embrace AI innovation but also ensure job security and workforce reskilling.

Furthermore, the intersection of AI ethics and job advancement can lead to new opportunities. By promoting AI literacy and encouraging human-AI collaboration, industries can harness the strengths of both entities. This coalition can elevate creativity and productivity, allowing workers to engage in more complex tasks while AI handles repetitive processes. The commitment to ethical AI integration will enable organizations to not only navigate the challenges but also leverage AI for sustainable job growth.

Addressing AI Impact on Privacy and Security

AI’s capacity for processing vast amounts of data presents significant privacy and security challenges that necessitate ethical consideration. As organizations deploy AI solutions, particularly in sensitive sectors like healthcare and finance, they must prioritize data protection to build trust with consumers. This includes implementing rigorous data security measures and transparent data usage policies, ensuring that personal information remains confidential and is used ethically.

Moreover, understanding the balance between leveraging AI for efficiency and upholding privacy rights is crucial. The ethical use of AI necessitates informed consent, where individuals are made aware of how their data is collected, used, and stored. With growing public awareness of privacy issues, companies that prioritize ethical AI practices will likely gain a competitive edge, reinforcing their commitment to responsible and respectful data handling.

Mitigating Machine Learning Bias Through Ethical AI Practices

Machine learning algorithms often reflect the data they are trained on, which can lead to inherent biases and stereotypes. Ethical frameworks in artificial intelligence development are essential to address these biases, ensuring fairness and inclusivity. By utilizing diverse datasets and conducting regular audits on AI systems, organizations can identify and rectify biases before they translate into real-world inequalities.

Moreover, fostering a culture of ethical AI development requires collaboration among technologists, ethicists, and community representatives. Engaging stakeholders from various backgrounds can help bring different perspectives to the table, ensuring AI technologies are designed to serve all communities equitably. A proactive approach to mitigating machine learning bias not only enhances social justice but also improves the reliability and effectiveness of AI applications.

The Importance of Collaboration in AI Ethics

Collaboration between technologists, ethicists, and policymakers is critical in navigating the ethical landscape of artificial intelligence. By forming multidisciplinary teams, organizations can better address the complex challenges posed by AI and ensure that diverse perspectives are included in the decision-making process. This collaborative approach not only enhances innovativeness but also contributes to building trust and accountability in AI systems.

Furthermore, fostering partnerships with regulatory bodies can ensure that AI technologies are developed within ethical guidelines. Engaging in dialogue with stakeholders from various sectors, including academia and civil society, helps to establish a shared understanding of AI’s implications and cultivates a culture of responsibility. Through collaboration, the technology’s benefits can be maximized while minimizing ethical risks.

Responsible AI Development and Innovation

To drive AI innovation responsibly, organizations must prioritize ethical considerations from the inception of technology development. This involves creating guidelines that inform AI applications and promote public welfare while avoiding harms. Organizations should actively seek out feedback from users and affected communities to better align their AI initiatives with societal values and needs.

Additionally, investing in ongoing education and training for AI developers on ethical practices will promote a culture of responsibility. By equipping technologists with the tools to consider the ethical ramifications of their work, organizations can foster a new generation of innovators eager to craft AI solutions that enhance society while adhering to ethical standards. Responsible AI development not only leads to innovative outcomes but also reinforces public confidence in the technology.

AI Ethics: Guidelines for Future Innovations

Establishing clear ethical guidelines for artificial intelligence is vital for shaping future innovations that align with societal values. These guidelines should encompass principles such as transparency, accountability, and inclusivity, providing a framework for developers to create ethical AI solutions. By maintaining these standards, organizations can ensure that artificial intelligence technologies promote the common good while minimizing adverse consequences.

Moreover, as the AI landscape evolves, so too must ethical guidelines adapt to new challenges and opportunities. Ongoing dialogue among stakeholders, including technologists, ethicists, and policymakers, will be crucial to continuously refining and updating these guidelines. As a result, organizations will be better equipped to enhance the positive impact of AI on society while navigating ethical complexities effectively.

Navigating AI Challenges: Policy and Ethics Integration

The rapid advancement of AI technology brings forth numerous challenges that necessitate a robust integration of policy and ethics. Policymakers must work alongside technologists and ethicists to develop regulations that ensure ethical standards in AI deployment. This collaborative effort will help to create an environment where innovation can flourish while safeguarding human rights and promoting accountability.

Additionally, integrating ethics into policy developments can help anticipate and address potential risks associated with AI technologies before they escalate. This proactive approach encourages organizations to evaluate the societal impact of their AI systems, leading to more responsible business practices. By positioning ethics as a core component of AI policy, societies can navigate challenges effectively and harness the full potential of artificial intelligence for the benefit of all.

The Future of AI Technology in an Ethical Framework

As we look toward the future of artificial intelligence, embedding ethical considerations into technology development will become increasingly essential. Organizations must recognize that the future success of AI not only lies in technical advancements but also in their ability to adhere to ethical principles. This dual focus will drive innovation that enhances human welfare while addressing critical ethical concerns such as bias, privacy, and job displacement.

Additionally, the landscape of AI technology will benefit from collaborative initiatives aimed at fostering ethical innovation. By harnessing a diverse range of perspectives, organizations can create AI solutions that truly reflect societal values and promote equity. As stakeholders unite in their commitment to an ethical framework, they will help steer the future of AI towards a trajectory that serves humanity’s best interests, ensuring that technology remains a force for good.

Frequently Asked Questions

What are the key principles of AI ethics?

AI ethics revolves around guiding principles that ensure artificial intelligence technologies are developed and used responsibly. These principles typically include fairness, accountability, transparency, privacy, and the promotion of human well-being. By adhering to these principles, organizations can address potential biases in machine learning and the ethical implications of AI applications.

How does machine learning bias affect AI ethics?

Machine learning bias is a critical concern in AI ethics, as it can lead to unfair outcomes in decision-making processes. When datasets used for training AI models contain biased information, the AI system may perpetuate these biases, resulting in discriminatory practices. Addressing machine learning bias is essential to ensuring ethical AI systems that treat all individuals fairly and equitably.

What is the responsibility of organizations in ensuring AI ethics?

Organizations have a significant responsibility in promoting AI ethics by implementing clear policies that govern the development and deployment of artificial intelligence technologies. This includes conducting regular audits to identify and mitigate biases, ensuring data privacy, and fostering a culture of ethical decision-making. Strong governance frameworks can help organizations navigate the ethical challenges produced by AI.

How does AI impact jobs and what are the ethical considerations?

The impact of AI on jobs raises substantial ethical considerations, as automation may lead to job displacement for many workers. AI ethics calls for a proactive approach to address these challenges, including retraining programs, the creation of new job opportunities, and ensuring that the benefits of AI advancements are equitably shared across society. Ethical policies should prioritize worker welfare while harnessing AI’s potential.

What are the privacy issues associated with artificial intelligence?

AI and privacy issues are closely intertwined, as the vast data required for training AI systems can compromise individual privacy. Ethical AI practices demand robust data protection measures, ensuring that personal information is handled responsibly and transparently. Organizations must comply with privacy regulations and prioritize the consent and rights of individuals when leveraging AI technologies.

| Key Point | Explanation |

|---|---|

| Significance of AI | AI is revolutionizing various industries, enhancing efficiency and decision-making. |

| Ethical Concerns | Issues such as bias, privacy breaches, and job displacement are rising. |

| Need for Frameworks | Developing ethical frameworks is essential to balance innovation with responsibility. |

| Collaboration Importance | Partnerships between technologists, ethicists, and policymakers are critical. |

Summary

Ethics in artificial intelligence is a vital consideration as the field continues to evolve. Addressing the ethical implications of AI is paramount to ensuring its responsible use in various sectors. As AI systems grow increasingly integrated into our daily lives, establishing guidelines and frameworks to mitigate risks associated with bias, privacy, and job displacement is crucial. The effective collaboration among technologists, ethicists, and policymakers will play a significant role in navigating these challenges, ultimately ensuring that AI is harnessed for the benefit of humanity.