Web scraping is a powerful technique used for data extraction from websites, enabling users to gather valuable information from the vast expanse of the internet. As businesses and researchers seek to harness the potential of online data, understanding effective scraping methods becomes essential. With a variety of web scraping tools available, including popular libraries like BeautifulSoup and Scrapy, users can streamline their data collection processes. However, it’s crucial to approach web scraping ethically, ensuring that the data extracted is done so in compliance with legal guidelines. This post will explore best practices and coding techniques that can optimize your web scraping efforts while addressing common challenges that may arise during data collection.

Data extraction, often referred to as web harvesting, is an essential skill in today’s data-driven environment. As organizations strive to tap into the wealth of information available online, mastering various scraping techniques becomes increasingly important. Tools like BeautifulSoup and Scrapy empower users to navigate and collect data efficiently from diverse websites. However, engaging in ethical web scraping is vital to avoid legal pitfalls and uphold good practices in the field. This discussion will delve into effective strategies for harnessing online information while addressing the complexities and obstacles that may surface in the scraping journey.

Understanding Web Scraping: An Introduction

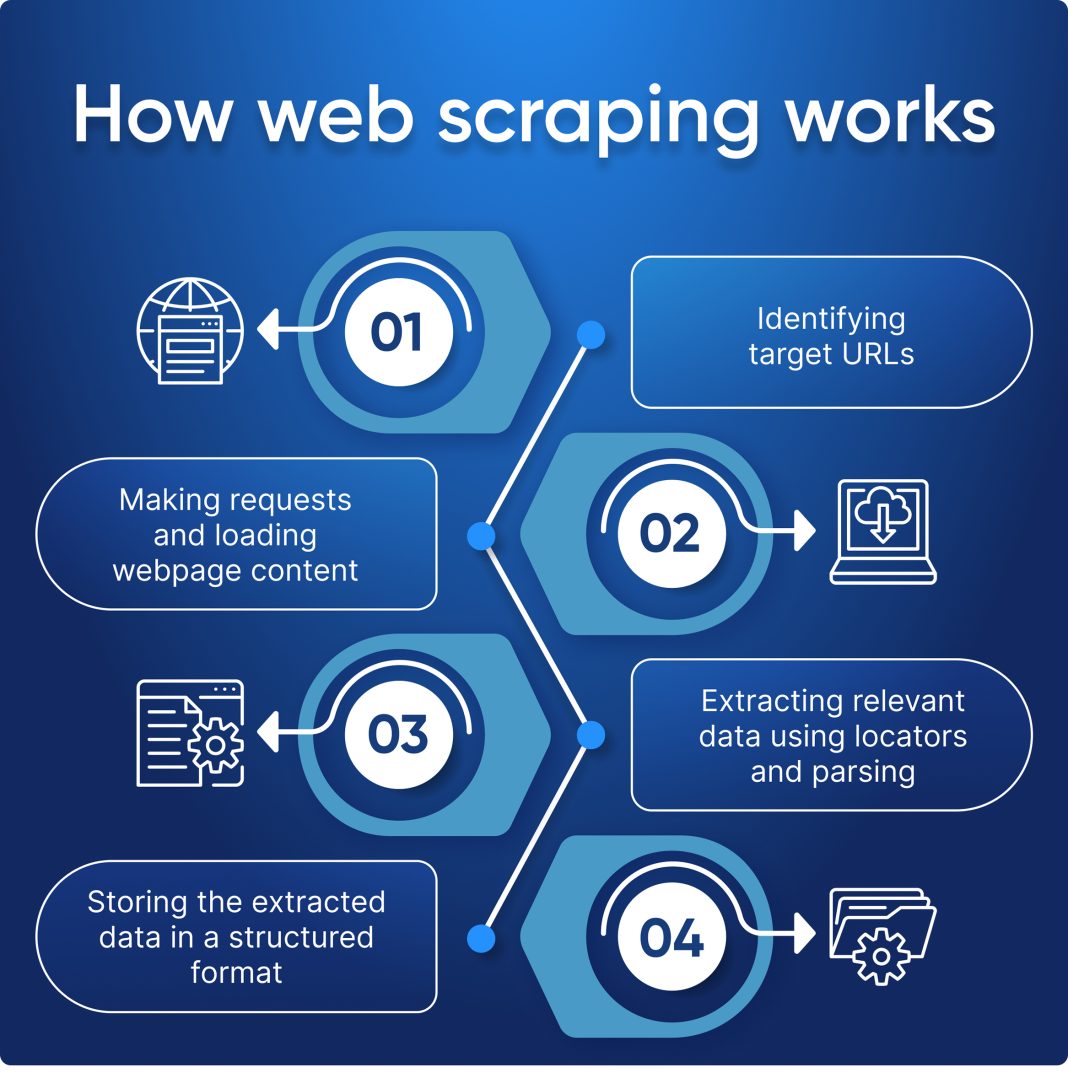

Web scraping is the automated process of collecting data from websites. Organizations leverage this technique to gather information from various sources, facilitating data analysis and insights generation. By implementing effective web scraping methods, businesses can track competitor prices, aggregate customer feedback, and conduct market research, thus gaining a competitive edge. It’s essential to grasp the foundational concepts of web scraping to harness its full potential.

The act of web scraping varies in complexity depending on the data desired and the structure of the target website. In its most basic form, scrapings can entail fetching content without deeper considerations of data formats. However, more advanced techniques involve employing scraping tools and libraries to navigate HTML structures, ensuring accurate data extraction. Understanding these dynamics not only aids in performance but also compliance with ethical standards in data collection.

Best Practices for Data Extraction

When engaging in data extraction, adhering to best practices is crucial for maintaining both efficiency and compliance. This includes respecting the terms of service of the target website, implementing rate limiting to avoid overwhelming servers, and utilizing proper user-agent strings to present oneself accurately to web servers. Following these protocols not only ensures that data collection processes run smoothly but also minimizes the risk of legal repercussions.

Moreover, structuring your scraping approach can significantly impact the quality of the extracted data. By employing robust parsing techniques, often facilitated by tools like BeautifulSoup and Scrapy, scrapers can effectively navigate complex HTML layouts. This saves time and resources while enhancing the reliability of the collected information, allowing businesses to analyze and utilize data without significant errors or omissions.

Ethical Considerations in Web Scraping

Ethical web scraping is an essential aspect of responsible data extraction. It is vital for researchers and businesses alike to understand the implications of their activities. Ethical considerations include transparency about the purpose of data collection, obtaining permission when necessary, and avoiding the collection of sensitive or personal data without consent. By maintaining high ethical standards, organizations can foster trust and avoid potential legal challenges associated with their scraping efforts.

Furthermore, practicing ethical web scraping involves being mindful of the impacts on the target website. Heavy scraping activities can lead to performance issues for the site, ultimately disrupting user experience. By implementing strategies such as scheduling scrapers during off-peak hours and limiting the frequency of requests, data extractors can strike a balance between gathering necessary information and respecting the integrity of the underlying site.

Web Scraping Tools: Enhancing Your Data Collection Process

Choosing the right web scraping tools is critical to streamline the data extraction process. Tools such as BeautifulSoup and Scrapy equip developers with versatile functionalities to parse HTML and XML documents effectively. BeautifulSoup, for instance, provides an intuitive interface that simplifies web scraping tasks, especially for beginners. Scrapy, on the other hand, is a more comprehensive framework designed for larger projects, offering built-in support for handling requests, processing data, and navigating complex sites.

Utilizing these tools not only enhances the scraping experience but also enables the development of customized scraping solutions. Implementing frameworks allows for scalability, as users can create robust pipelines that accommodate the growth of data requirements over time. This combination of efficiency and customization makes leveraging web scraping tools an indispensable part of any data-driven strategy.

Navigating Legal Considerations in Web Scraping

Understanding the legal landscape surrounding web scraping is vital for anyone looking to extract data from the web. Laws regarding data usage, copyright, and intellectual property can vary significantly across jurisdictions. Therefore, it is crucial to familiarize oneself with local regulations as well as the specific terms of service of the websites being targeted to avoid potential legal pitfalls.

In some cases, court rulings regarding web scraping have established a precedent on what constitutes fair use. By keeping abreast of these developments and leveraging ethical scraping practices, businesses can effectively mitigate risks associated with legal issues. Consulting with legal expertise when necessary ensures that organizations can confidently navigate data extraction without infringing on rights or regulations.

Common Challenges in Web Scraping and How to Overcome Them

Web scraping, while powerful, is not without its challenges. Websites frequently implement measures such as CAPTCHAs and IP blocking to deter data extraction efforts. These hurdles can prove frustrating for scrapers aiming to gather data efficiently. Identifying and implementing workarounds—such as using proxies to rotate IP addresses or incorporating CAPTCHA-solving services—can significantly improve the chances of successful data collection.

Moreover, web page layouts can often change unexpectedly, leading to discrepancies in the scraping process. These modifications might break previously functioning scrapers, requiring ongoing maintenance and adjustments. By adopting a flexible and modular approach to scraper design, developers can more easily accommodate changes to website structures, ensuring resilience in their data extraction efforts.

The Role of Coding in Optimizing Web Scraping

Coding plays an integral role in optimizing web scraping tasks. By incorporating efficient programming practices, developers can enhance the speed and accuracy of their scraping processes. Using languages like Python, which is well-equipped with libraries such as BeautifulSoup and Scrapy, allows for seamless integration of scraping routines and data handling scripts, resulting in a more streamlined workflow.

Effective coding can also help in tackling common issues faced during data extraction, such as handling asynchronous requests or dealing with large volumes of data. By implementing error-handling mechanisms and ensuring code modularity, programmers can minimize runtime problems and achieve more reliable scraping outcomes. This not only leads to better data quality but also presses forward automation in the data collection process.

Exploring Advanced Scraping Methods

Once the basics of web scraping are mastered, exploring advanced scraping methods can unlock even greater potential for data extraction. These methods may include headless browsing, which allows for the simulation of real browser behavior without a graphical user interface. This approach is especially beneficial for interacting with websites that heavily rely on JavaScript to display content.

Similarly, techniques such as data mining alongside web scraping can provide deeper insights into the gathered information. By combining scraping efforts with analytical tools, organizations can extract not only data but also actionable intelligence that can drive strategic decisions. As the landscape of web technologies continues to evolve, staying abreast of these advanced techniques will ensure that scraping remains an effective tool for data acquisition.

Maximizing Efficiency with Web Scraping Strategies

Efficiency is key when it comes to web scraping, especially when numerous data points need to be collected quickly. To maximize efficiency, scrapers should consider implementing multi-threading or asynchronous programming, which allows for simultaneous processing of requests. This method can significantly reduce the time required to extract large datasets, making the overall process more productive.

Additionally, optimizing data storage and retrieval methods is important for efficient operations. Utilizing databases or cloud storage solutions tailored for large volumes of information can streamline access and management of scraped data. Employing these strategies ensures that data extraction processes are not only efficient but also scalable, providing a robust framework for any organization relying on web scraping for insights.

The Future of Web Scraping Technologies

The future of web scraping technologies looks promising, with continuous advancements enhancing the capabilities and efficiencies of data extraction processes. Artificial intelligence and machine learning are beginning to play a significant role in automating scraping tasks, allowing for smarter data aggregation and analysis. These technologies can help identify patterns, classify information, and make data-driven predictions based on scraping activities.

Moreover, as web technologies evolve, scraping methods will also adapt to keep pace with changing web standards. Innovations in web security may prompt further development of scraping tools that can navigate stricter access controls without compromising ethical considerations. By embracing these future trends, organizations can ensure that they remain at the forefront of data-driven strategies in an increasingly digital world.

Frequently Asked Questions

What is web scraping and how is it used in data extraction?

Web scraping is the automated process of extracting data from websites. It involves fetching a web page and extracting relevant information, which can be used for analysis, market research, or competitive intelligence. By using web scraping, businesses can gather valuable insights from online resources that are crucial for decision-making.

What are the most popular scraping methods used in web scraping?

Various scraping methods include using libraries and frameworks like BeautifulSoup and Scrapy, which simplify the data extraction process. Other methods involve using browser automation tools such as Selenium, which can handle dynamic content, ensuring comprehensive data collection. Each technique has its unique advantages depending on the specific requirements of the scraping project.

What are some essential web scraping tools for effective data extraction?

Key web scraping tools include BeautifulSoup, a Python library for parsing HTML and XML documents, and Scrapy, a robust framework intended for building web crawlers. Other notable tools are Octoparse, ParseHub, and Import.io, which provide user-friendly interfaces for non-coders. Choosing the right tool depends on project complexity and user proficiency.

What are the ethical considerations in web scraping?

Ethical web scraping emphasizes the importance of adhering to a website’s terms of service and robots.txt file, which governs how data can be accessed. It’s crucial to avoid overwhelming servers with too many requests, maintaining a responsible scraping pace to prevent disruption. Furthermore, respecting user privacy and not collecting sensitive information is vital for ethical practices.

How can BeautifulSoup enhance my web scraping experience?

BeautifulSoup is a powerful Python library that simplifies the parsing of HTML and XML documents, allowing for easy navigation and data extraction from complex web pages. It enhances the web scraping experience by providing intuitive methods to search and modify the parse tree, making it easier to extract specific data elements with precision.

What common challenges do web scrapers face and how can they be overcome?

Common challenges in web scraping include handling CAPTCHAs, IP blocking, and dynamic website content. To overcome these issues, scrapers can implement techniques such as rotating IP addresses, using headless browsers for JavaScript-rendered sites, and employing CAPTCHA-solving services. Additionally, optimizing the scraping code and using proper error handling can significantly improve success rates.

What programming skills do I need for effective web scraping?

To excel at web scraping, knowledge of programming languages such as Python or JavaScript is essential, particularly as they are commonly used with frameworks like Scrapy and BeautifulSoup. Understanding HTML and CSS is also crucial for navigating and selecting the right elements during the data extraction process. Familiarity with regular expressions and handling APIs can further enhance scraping capabilities.

Can web scraping be used for competitive analysis?

Yes, web scraping is an invaluable tool for competitive analysis. By collecting data on competitors’ pricing, products, and marketing strategies from their websites, businesses can gain insights into market trends and optimize their own strategies. However, it is important to ensure that such practices remain ethical and within legal boundaries to avoid potential repercussions.

| Key Points | Details |

|---|---|

| Methods | Different techniques for extracting data from websites. |

| Best Practices | Guidelines to follow for effective and ethical data scraping. |

| Legal Considerations | Understanding the laws and regulations surrounding web scraping. |

| Tools | Overview of libraries and frameworks like BeautifulSoup and Scrapy. |

| Ethical Standards | The importance of scraping ethically to avoid legal issues. |

| Coding Techniques | Optimizing code for a more efficient scraping process. |

| Common Challenges | Issues often faced during scraping and how to solve them. |

Summary

Web scraping is a vital technique for extracting data from various websites, allowing users and businesses to gather important information efficiently. By understanding methods, best practices, and legal guidelines, one can effectively navigate the complexities of web scraping. Tools like BeautifulSoup and Scrapy can enhance the scraping experience, while maintaining ethical standards ensures compliance with legal requirements. Recognizing common challenges and developing coding techniques will facilitate a smoother data collection process, leading to successful web scraping endeavors.