Web scraping is a powerful technique used for data extraction from websites, transforming massive amounts of information into structured data for analysis and decision-making. This method not only simplifies research and competitive analysis but also enhances insights by collecting data efficiently, making it indispensable in today’s digital landscape. Various web scraping tools, such as BeautifulSoup and Scrapy, allow users to automate this process with ease, unlocking valuable data hidden across the internet. Understanding how web scraping works is essential, especially as the landscape evolves with new web scraping techniques and technologies emerging continuously. However, it’s crucial to navigate the ethical considerations surrounding web scraping to ensure compliance with legal standards and respect website boundaries.

Data harvesting, often referred to in the realm of automated data collection, emphasizes the importance of efficiently gathering information from web resources. Methods like fetching web pages and parsing HTML enable users to access vital information seamlessly. This innovative approach is not only beneficial for research but also pivotal for comparative market analysis. As professionals embrace data mining and other extraction strategies, understanding the nuances of scraping becomes increasingly important. Ethical data collection practices further contribute to a responsible methodology in this realm, ensuring that users engage with web resources thoughtfully.

Understanding Web Scraping Fundamentals

Web scraping is a technology-driven approach that enables users to collect vast amounts of data from websites quickly and efficiently. This practice is essential for various purposes, such as market research, academic study, and competitive analysis. By leveraging web scraping tools, individuals and businesses can automate the tedious tasks of data collection, thereby streamlining their operations and gaining a significant advantage in their respective fields.

At its core, web scraping involves programmatically navigating the web, retrieving web pages, and extracting relevant data from them. This process can encompass various techniques, including parsing HTML, interacting with APIs, and even conducting web crawling. As these methods evolve, they become more sophisticated, allowing for the extraction of complex datasets that can aid in decisions ranging from product development to content curation.

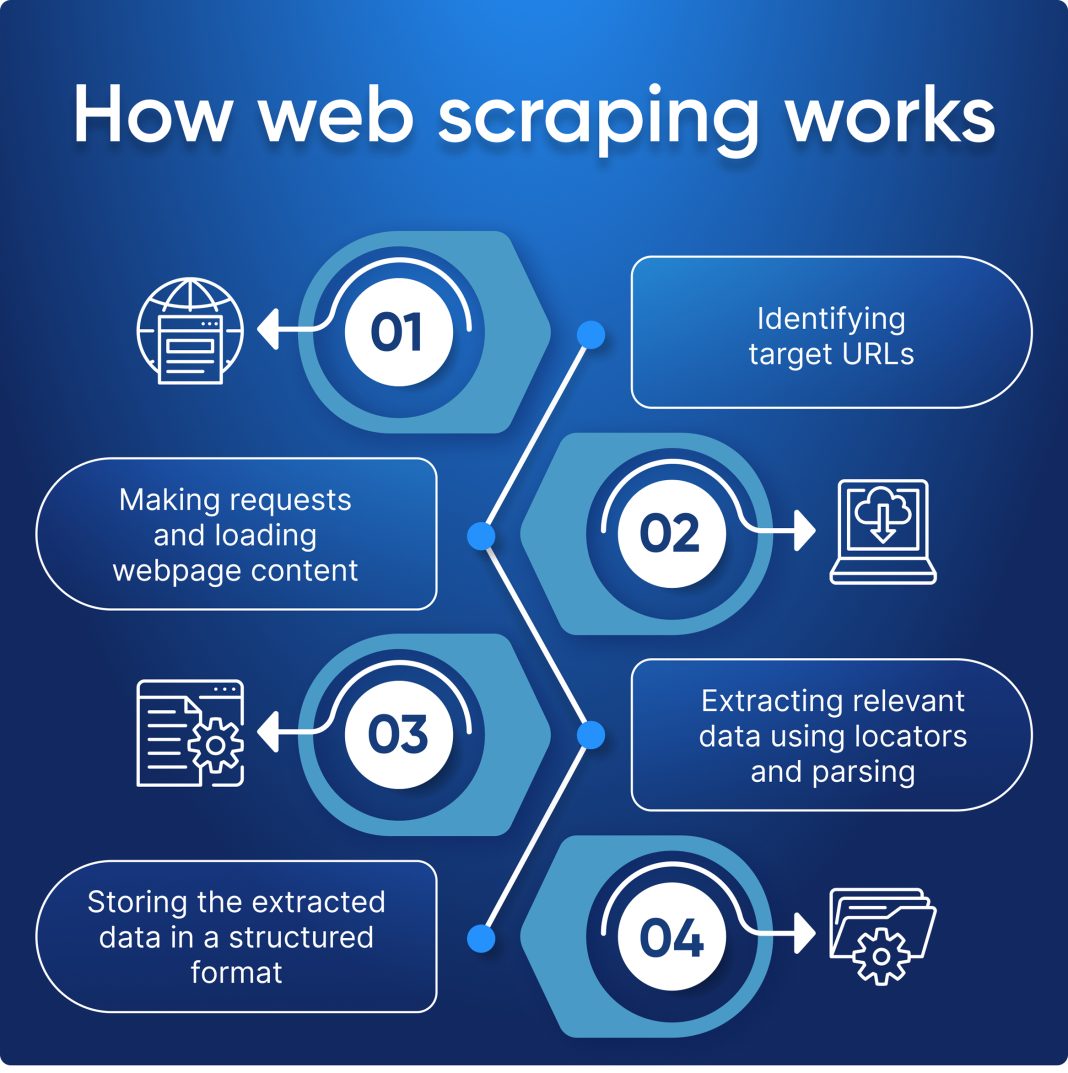

How Web Scraping Works Step-by-Step

The mechanics of web scraping can be broken down into a straightforward process. Initially, a user sends an HTTP request to the target website to access the desired information. This request elicits a response from the server, which includes the HTML content of the page. Afterward, the data extraction phase begins, where various web scraping tools can be employed to navigate the HTML structure and pinpoint the relevant information needed.

Once the data has been identified, scrapers can format it into usable formats like CSV, JSON, or even direct database entries, making it easier to analyze and manipulate later. The efficiency of web scraping techniques hinges on the user’s understanding of HTML and the specific attributes of the data being targeted. With mastery of these elements, users can tailor their scraping methods to yield the best results possible.

Popular Tools and Libraries for Web Scraping

There are several web scraping tools and libraries available that cater to a variety of scraping needs. BeautifulSoup, for instance, is a favored Python library that excels at parsing HTML and XML documents, making it easier to extract relevant pieces of data. Its user-friendly interface helps both beginners and experienced developers scrape web pages efficiently, particularly when dealing with complex structures.

On the other hand, Scrapy is a robust open-source web crawling framework designed for more extensive and elaborate scraping endeavors. As a full-fledged framework, Scrapy supports user agents, custom data storage, and complex scraping operations that can handle large volumes of data over time. Additionally, Selenium can automate browsers and capture dynamic content on sites requiring user interaction. Together, these tools empower users to harness the full potential of web scraping.

Web Scraping Ethics: Best Practices to Follow

While web scraping opens up a treasure trove of data, it comes with an accompanying ethical responsibility. One of the main best practices is respecting the rules laid out in a website’s robots.txt file, which delineates which parts of the site can be crawled and scraped. Abiding by these guidelines not only adheres to legal standards but also ensures that the scraper fosters a respectful relationship with website owners.

Furthermore, ethical web scraping involves being considerate of the server load. Excessive requests in a short timeframe can harm site performance or even lead to IP bans. Implementing time delays, throttling requests, and being mindful of the overall impact of your scraping can enhance both ethical standards and the success of your data extraction efforts.

The Future of Web Scraping Technologies

As web technologies advance, so too does the landscape of web scraping. Emerging tools and innovative scraping techniques are constantly being developed, allowing users to adapt to ever-changing web environments, particularly those that utilize dynamic content or JavaScript-heavy applications. The rise of APIs also influences scraping practices, as many organizations facilitate data access in a more structured environment, promoting less intrusive methods.

Additionally, artificial intelligence and machine learning are starting to play a significant role in enhancing scraping capabilities. These technologies can help scrapers better interpret data context, automate complex extraction tasks, and improve the accuracy of the information being gathered. The synergy of AI with web scraping techniques suggests a promising future where data extraction becomes even more streamlined and efficient.

Practical Applications of Web Scraping

Web scraping finds its relevance across various industries, showcasing its versatility and significance in data-driven decision-making. For businesses, it can facilitate the collection of market prices, giving companies insights into competitor pricing strategies and consumer behavior. This real-time data collection helps organizations adjust their marketing tactics and pricing models to better meet consumer demand.

In academia, researchers often use web scraping tools to mine data related to their studies, such as gathering statistics from online articles or conducting surveys across multiple platforms. By automating the collection of such data, scholars can focus more on analysis and interpretation, ultimately enhancing the quality and efficiency of their research outcomes.

Challenges and Solutions in Web Scraping

Despite its advantages, web scraping comes with its own set of challenges. Websites often implement measures such as CAPTCHAs, IP blocking, and dynamic content rendering, which can hinder a scraper’s ability to access vital information. As such, scraping can potentially turn into a frustrating endeavor without the right approach and tools in place. However, staying updated with the latest web scraping techniques can help navigate these obstacles.

Additionally, leveraging browser automation tools like Selenium can be an effective solution to overcome challenges posed by interactive sites. Techniques such as rotating IP addresses and utilizing headless browsers can also minimize the risk of being blocked by servers. This way, web scrapers can remain agile and adaptive, ensuring access to the richness of data available across the web.

Extracting Data Safely: Legal Considerations

One of the key aspects of web scraping that needs careful consideration is legality. Various jurisdictions have differing laws regarding data ownership and usage, which can complicate the use of web scraping for data extraction. It’s crucial to familiarize oneself with these regulations and the specific terms of service of the websites being scraped, as non-compliance can result in legal repercussions or loss of access.

Moreover, web scraping ethics should guide individuals towards obtaining permission from website owners whenever feasible. Not only does this promote transparency, but it also fosters goodwill between data miners and site operators. By navigating the legal landscape with consideration for both ethical responsibilities and regulations, web scrapers can extract valuable data while respecting the rights of others.

Conclusion: Mastering the Art of Web Scraping

In conclusion, mastering web scraping is an invaluable skill in today’s data-driven world. By understanding the fundamentals of how web scraping works and the ethical considerations involved, aspiring data miners can harness the power of this technique to uncover insights and opportunities previously obscured by manual data collection methods.

As technology advances, so too will the methodologies underpinning web scraping. Staying abreast of new tools, techniques, and ethical considerations will ensure that web scrapers continue to effectively and responsibly access data from the web, fostering innovation and insight across industries.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is the process of automatically extracting data from websites. It works by sending a request to a server hosting the web page, retrieving its content, and then parsing the HTML to isolate and extract the required information, which can include text, images, or links.

What are the most popular web scraping tools?

Some of the most popular web scraping tools include BeautifulSoup, a Python library for parsing HTML; Scrapy, an open-source web crawling framework; and Selenium, which automates web applications and can also be leveraged for scraping data from complex websites.

What ethical considerations should I be aware of with web scraping?

When engaging in web scraping, it’s important to respect the legal guidelines and ethical considerations involved. Always check a website’s terms of service and its robots.txt file to ensure that scraping is permitted, and avoid overloading servers with excessive requests.

What web scraping techniques can enhance data extraction?

Effective web scraping techniques include using REST APIs when available, employing headless browsers for dynamic content, and implementing rate limiting to prevent being blocked by target sites, ensuring a smooth and compliant data extraction process.

How can web scraping be used for business intelligence?

Web scraping can be a valuable tool for business intelligence, allowing companies to gather competitive data, track market trends, conduct sentiment analysis, and retrieve valuable information from various online sources, thereby gaining insights that drive strategic decisions.

| Key Points | Details |

|---|---|

| What is Web Scraping? | Web scraping refers to the automatic extraction of information from websites, often used for data mining and research. |

| How Does Web Scraping Work? | It involves sending requests to a server, retrieving page content, and parsing the HTML to find specific data elements. |

| Tools and Libraries | 1. BeautifulSoup: for parsing HTML and XML. 2. Scrapy: an open-source web crawling framework. 3. Selenium: automates web applications and can be used for scraping. |

| Ethics and Legal Considerations | Be aware of the legal issues related to scraping; review the website’s robots.txt file to avoid breaches. |

Summary

Web scraping is a valuable technique for collecting data from the internet. By understanding the processes involved and following ethical guidelines, users can effectively harness this tool to gather insights, perform analyses, and enhance their projects. Responsible use of web scraping opens up vast opportunities for data-driven decision-making.