Web scraping is a critical technique in the realm of data extraction that allows users to retrieve information from websites in an automated manner. By employing various web scraping tools, individuals and businesses can streamline the process of collecting data, making it faster and more efficient. Techniques such as HTML parsing play a vital role in this process, helping to dissect web pages to extract pertinent information. For those looking to enhance their capabilities, understanding the best practices for web scraping is essential to ensure compliance with website terms. Overall, automated data collection through web scraping can significantly benefit analytics, research, and development across numerous industries.

Data harvesting from online platforms, also known as web scraping, involves systematic retrieval of information available on the Internet. This technique encompasses various methods and tools like browser automation and API requests that enable seamless data acquisition from dynamic and structured sources. By leveraging HTML parsing and other advanced strategies, users can efficiently gather insights that fuel business intelligence and research initiatives. Recognizing key strategies and best practices in this field can aid in creating robust data pipelines while adhering to ethical guidelines. Ultimately, this innovative approach to data collection opens up new possibilities for leveraging online content.

Understanding the Basics of Web Scraping

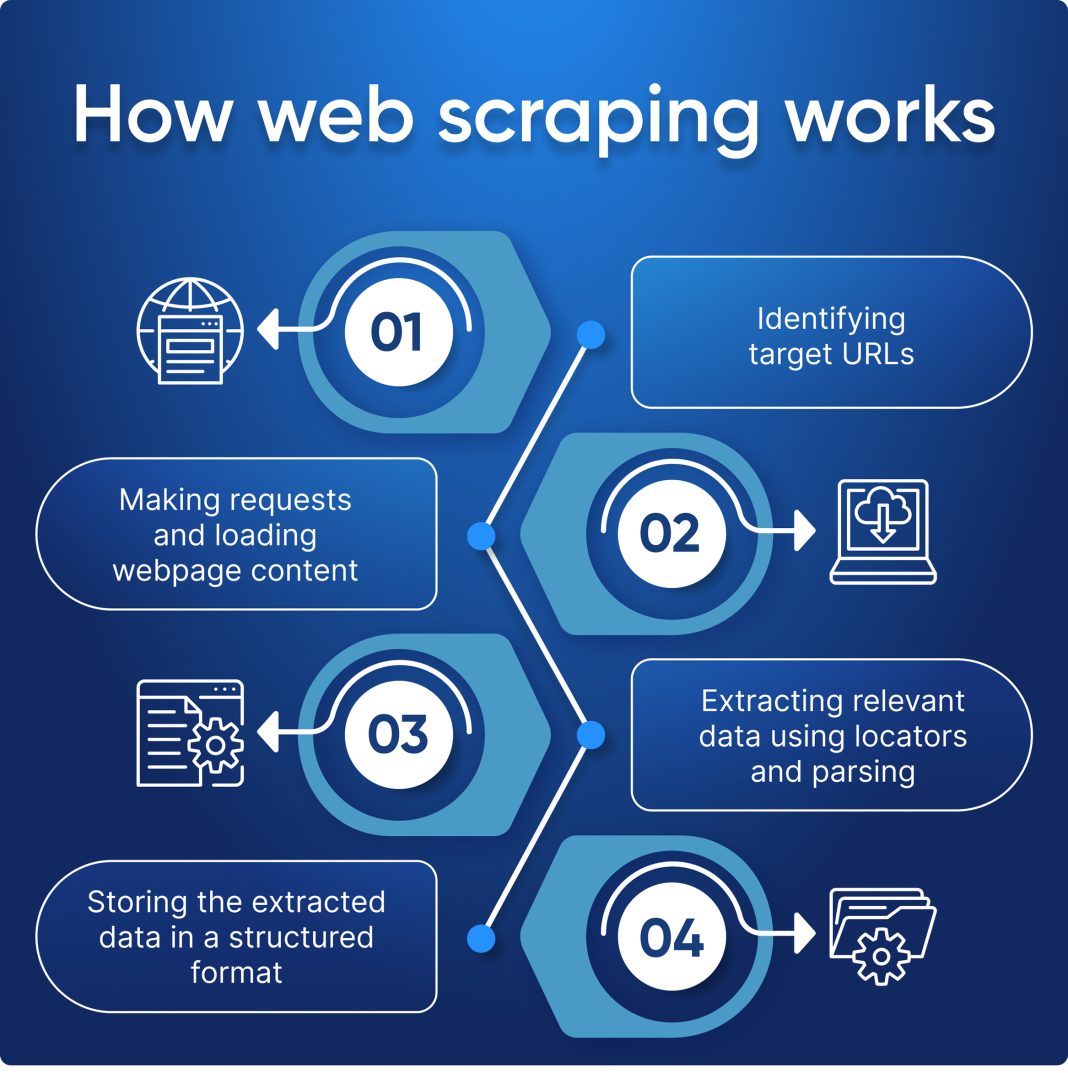

Web scraping is an essential process in the realm of data extraction that allows users to gather information from various websites. By utilizing automated data collection techniques, web scraping simplifies the tedious tasks of manual data entry and processing. When conceptualizing web scraping, it’s crucial to recognize the differences between raw data extraction methods and their applications in more advanced uses, such as analytics and market research. This introductory understanding prepares individuals for a journey into the numerous tools and techniques that can facilitate efficient data gathering.

At its core, web scraping leverages specialized algorithms and user-defined instructions to navigate web pages and extract relevant data elements. Whether this involves parsing HTML documents or utilizing APIs provided by some web platforms, the key is to gather structured information that meets user needs. Grasping these fundamental concepts sets the stage for deeper exploration into both the technology behind web scraping and the best practices necessary for ethical and effective information retrieval.

Techniques for Effective Data Extraction

Various techniques are at the disposal of individuals interested in web scraping for effective data extraction. HTML parsing remains one of the most prevalent methods, allowing users to employ libraries like Beautiful Soup in Python for efficient data manipulation. This approach enables programmers to dissect web pages, selectively extracting tags, attributes, or textual elements that hold value for their specific projects. Understanding how these parsing techniques work is essential for anyone looking to build a robust scraping operation.

Another popular technique involves the use of API requests. Many websites offer APIs which serve as structured interfaces for data retrieval, eliminating the need for HTML parsing in certain instances. By querying these APIs directly, scrapers can access data in a clean, organized format, enhancing accuracy while reducing the effort required for traditional data scraping. For dynamic websites, browser automation tools such as Selenium integrate with these approaches, allowing users to interact with a site as if they were real visitors, gathering data from complex or heavily scripted environments without compromising user experience.

Exploring the Best Tools for Web Scraping

The success of web scraping largely depends on the tools employed during the data extraction process. Among the most widely used tools is Beautiful Soup, recognized for its ease of use and suitability for beginners. This powerful Python library effectively helps users navigate, search, and extract information from HTML files, making it a favorite for those just starting with web scraping. In addition to Beautiful Soup, Scrapy stands out as a comprehensive framework that enables users to create crawlers that can handle massive amounts of data while managing concurrent requests seamlessly.

For those looking to extract data from dynamic web pages, Puppeteer offers a high-level control environment for Chrome or Chromium browsers. This tool allows users to execute scripts that mimic user interactions—ideal for sites with dynamic content loaded via JavaScript. Each of these tools brings unique strengths to the data extraction process. Familiarity with such options empowers users to select the best fit for their specific web scraping needs and to implement best practices for web scraping effectively.

Best Practices for Ethical Web Scraping

As web scraping continues to grow in popularity, adhering to best practices becomes increasingly important to maintain ethical and legal standards. Primarily, respecting the terms of service of the websites you are scraping is paramount. Many sites outline their policies regarding automated data collection and scraping in their terms, and ignoring these results can lead to legal repercussions or IP bans. Users should familiarize themselves with these terms before embarking on their scraping projects to ensure compliance and avoid potential pitfalls.

Additionally, implementing rate limiting and respectful scraping practices is crucial. This involves spacing out requests to a site to minimize potential overload or disruption of services. Many advanced web scrapers incorporate techniques to randomize request intervals or mimic human browsing behavior, which further minimizes the risk of being blocked. By following these best practices for web scraping, users can harness the power of automated data collection responsibly and sustainably.

Data Acquisition for Research and Analysis

Data acquisition through web scraping plays an instrumental role in both research and analysis across various sectors. Whether in academia or business, the ability to extract large volumes of data from public websites enables researchers and analysts to derive insights that would otherwise be difficult or impossible to obtain. With an array of data available online, targeted scraping can provide valuable information for trend analysis, market research, and competitive analysis, effectively enhancing decision-making processes.

Furthermore, the extracted data can be utilized in predictive analytics, feeding algorithms that ward off risks and identify opportunities based on historical trends. By employing risk-free scraping techniques and adhering to ethical guidelines, data scientists can ensure that their sources remain reliable while contributing to the integrity of their analytical work. This reinforces the notion that web scraping is not merely an automated process but a strategic method of acquiring knowledge and understanding through data.

The Role of HTML Parsing in Web Scraping

HTML parsing is a fundamental aspect of web scraping that allows users to extract the necessary information from web pages effectively. By utilizing libraries such as Beautiful Soup, users can parse HTML documents, breaking down complex structures into manageable elements. This technique not only streamlines the data extraction process but also enhances the user’s ability to target specific information, from text content to attribute values, ensuring that no relevant data goes unnoticed.

Furthermore, mastering HTML parsing boosts the efficiency and accuracy of web scraping projects. Users who are familiar with the Document Object Model (DOM) can navigate through nested elements without difficulty, leveraging CSS selectors or XPath for precision targeting. As web technologies evolve, understanding how HTML structures and tags work remains crucial for anyone engaged in data extraction, allowing them to adapt their scraping strategies to various site architectures.

Automation in Data Collection and Its Benefits

The automation of data collection through web scraping tools offers significant benefits that enhance productivity and accuracy. Automated data collection reduces the time and effort manually involved in gathering information from multiple sources, enabling users to focus on analyzing the data rather than the tedious task of acquisition. With the right tools, users can schedule scraping tasks, ensuring that the information they gather is fresh and up-to-date, which is particularly beneficial for time-sensitive projects.

Additionally, automated data collection through scraping minimizes human error, as algorithms execute predefined instructions consistently without fatigue or distraction. This level of reliability is essential in fields such as finance or marketing, where accurate data is critical for making informed decisions. By leveraging automation, organizations can streamline their data workflows, ultimately leading to more insightful analytics and strategic outcomes.

Navigating Legal and Ethical Boundaries in Web Scraping

The legal and ethical landscape surrounding web scraping is complex, requiring users to navigate a landscape of privacy laws and terms of service. While scraping public websites for data may seem permissible, users must consider legal implications, including intellectual property rights and data protection regulations. Awareness of laws such as the Computer Fraud and Abuse Act (CFAA) in the United States is essential for scraper developers to ensure they operate within legal boundaries.

Moreover, ethical scraping is foundational for maintaining good relationships with data sources while ensuring the integrity of scraped information. Scrapers must be transparent about their methods, using identified APIs when available, and being mindful of how their actions impact the web infrastructure. Emphasizing ethics in web scraping fosters a culture of respect and responsibility, further legitimizing the practice within the data science community.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is the automated process of extracting information from websites. It works by parsing the HTML structure of web pages and using various web scraping tools, like Beautiful Soup or Scrapy, to collect the desired data efficiently.

What are the best practices for web scraping?

The best practices for web scraping involve respecting the website’s terms of service, using tools that limit the number of requests made to a server, and ensuring compliance with legal regulations. Additionally, leveraging proper HTML parsing techniques can enhance the efficiency of your data extraction.

What tools can I use for automated data collection in web scraping?

Several tools can facilitate automated data collection in web scraping, including Beautiful Soup for HTML parsing, Scrapy for web crawling, and Puppeteer for automating browser interactions. These tools streamline the extraction process and make it easier to handle complex websites.

How can HTML parsing improve my web scraping efficiency?

HTML parsing improves web scraping efficiency by enabling you to systematically analyze the structure of web pages, thus allowing you to target specific elements within the HTML. Libraries like Beautiful Soup make it easier to navigate and extract data from web pages with complex layouts.

Are there any legal issues associated with web scraping?

Yes, there can be legal issues associated with web scraping, as it may violate a website’s terms of service or copyright laws. It’s crucial to review the legal guidelines and ensure compliance when engaging in automated data collection activities.

Can I use APIs instead of web scraping for data extraction?

Yes, many websites provide APIs that allow for structured data extraction, which is often more reliable and compliant than web scraping. Using APIs can simplify the data collection process while minimizing legal and ethical concerns.

What is the role of browser automation in web scraping?

Browser automation plays a vital role in web scraping by allowing users to interact with dynamic web pages and simulate user behaviors. Tools like Selenium and Puppeteer enable you to automate browser actions, making it easier to extract data from websites that require user interactions.

| Key Point | Details |

|---|---|

| Definition of Web Scraping | Web scraping is the process of automatically extracting information from websites, which can be done with both manual and automated methods. |

| Web Scraping Techniques | 1. HTML Parsing using libraries (e.g., Beautiful Soup). 2. API Requests for structured data. 3. Browser Automation with tools like Selenium. |

| Popular Web Scraping Tools | 1. Beautiful Soup (Python) 2. Scrapy (Python) 3. Puppeteer (Node.js) |

| Conclusion | Web scraping is a valuable method for data collection but requires adherence to website terms of service. |

Summary

Web scraping is an essential technique for gathering data from various online sources. It leverages multiple methods and tools to efficiently extract information, making it valuable for businesses and researchers alike. Understanding the different techniques, such as HTML parsing and API requests, along with the popular tools available allows users to effectively utilize web scraping. However, it is crucial to respect the legal boundaries and ethical considerations when scraping data from any website.