HTML content parsing is an essential process in web development and data analysis, allowing us to extract valuable information from web pages. Utilizing effective web scraping techniques, developers can manipulate HTML structures, such as

, and

tags, to gather targeted content. By employing libraries like BeautifulSoup or Scrapy, a BeautifulSoup tutorial can lead you through automating web content extraction effortlessly. These HTML extraction methods not only streamline data collection but also enhance the overall efficiency of web projects. Whether you’re looking for practical Scrapy examples or seeking to understand the nuances of web scraping, mastering HTML content parsing is pivotal for any aspiring web developer.

Parsing HTML content refers to the technique of breaking down and analyzing the markup language used to create web pages. This art of web content extraction involves dissecting various HTML elements, including sections identified by tags like

Understanding HTML Content Parsing

HTML content parsing is essential for developers and data scientists looking to extract meaningful information from web pages. By delving into the architecture of HTML, users can target specific elements such as

, and

tags to gather content efficiently. This process not only enables effective web scraping but also enhances the ability to automate web content extraction across large datasets. By mastering HTML parsing, one develops skills necessary for leveraging web data across various applications.

In essence, parsing is a method of breaking down and interpreting the HTML structure of a webpage, which allows for the identification of relevant data. Whether it’s parsing out product information from e-commerce sites or pulling together blog content for research, understanding how to navigate HTML tags is crucial. Tools such as BeautifulSoup and Scrapy facilitate this parsing by providing straightforward methods to locate and extract the required information, making the programming process simpler and more efficient.

Essential Web Scraping Techniques

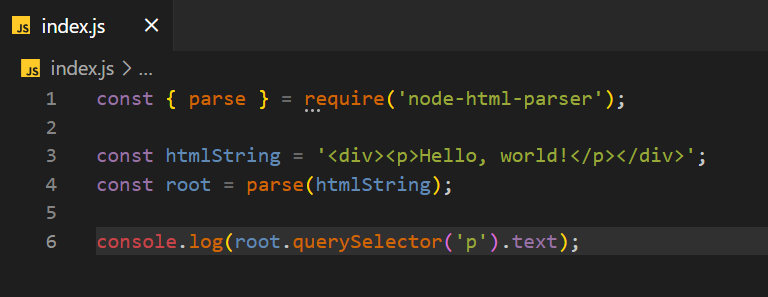

Web scraping techniques vary based on the data requirements and the structure of the target site. Among the most common methods, using libraries like BeautifulSoup for HTML content parsing stands out. BeautifulSoup provides a simple interface for navigating the parse tree of an HTML document, allowing users to extract data by finding tags or navigating through parent-child relationships of the HTML elements.

Another powerful approach is to use Scrapy, a more comprehensive framework that not only enables HTML extraction but also handles tasks like managing requests and processing the scraped data. Scrapy’s examples demonstrate how to build spiders that can crawl websites and gather structured data automatically. Each technique, whether it be employing BeautifulSoup for finer control or utilizing Scrapy for broader scraping tasks, contributes uniquely to the automation of web content collection.

Using BeautifulSoup to Automate HTML Extraction

BeautifulSoup is an invaluable library for automating HTML extraction due to its user-friendly methods and extensive documentation. By utilizing functions such as ‘find()’ and ‘find_all()’, users can quickly pinpoint the precise data needed from a webpage, facilitating efficient content harvesting. This capability is particularly useful for scraping articles, meta tags, or even images from various websites.

Moreover, integrating BeautifulSoup with Python scripts amplifies the automation aspect of web scraping. Users can write scripts that automatically run at scheduled intervals, collecting new data as it becomes available. This process not only saves time but also ensures that the dataset remains current and comprehensive, thereby enhancing the utility of the scraped content.

Exploring Scrapy Examples for Effective Web Crawling

Scrapy is a powerful tool specifically designed for web scraping, providing a robust framework for building web spiders that navigate through websites with ease. In Scrapy examples, users can learn how to create their own spiders, set up item pipelines, and even handle complex websites with AJAX content. This versatility allows for a wide range of applications, from simple data collection to more integrated data analysis workflows.

A typical Scrapy project includes the ability to specify the structure of the data to be scraped, process it in real-time, and store it in various formats like JSON or CSV. This flexibility makes Scrapy a preferred choice among developers who need to extract and analyze large volumes of data from diverse web sources swiftly. Learning how to effectively implement Scrapy can significantly enhance one’s ability to automate scraping tasks and manage vast datasets.

Best Practices in HTML Extraction Methods

When it comes to HTML extraction methods, adhering to best practices ensures efficiency and reliability in web scraping endeavors. Firstly, it’s crucial to respect website terms of service and robots.txt files, which may dictate how their data can be scraped. Utilizing polite scraping techniques, such as pacing requests and avoiding excessive load on server resources, helps maintain a good relationship with website owners.

Furthermore, organizing the extracted data systematically is a best practice that aids in subsequent analysis or reporting. Tools like Pandas can be integrated with web scraping frameworks, allowing for structured data management and enabling users to perform further data manipulation and analysis post-extraction. By following these best practices, developers can enhance the sustainability and effectiveness of their web scraping projects.

Leveraging Regular Expressions in Scraping

Regular expressions (regex) serve as a powerful tool for parsing and extracting data during web scraping tasks. By defining specific patterns, users can search through HTML content for particular attributes or formats, such as email addresses, phone numbers, or URLs, significantly enhancing the data extraction process. This technique is especially useful when dealing with unstructured data where traditional HTML parsing may fall short.

Incorporating regex within a web scraping workflow, users can combine the ease of tools like BeautifulSoup with precise filtering capabilities. For example, after extracting data with BeautifulSoup, implementing regex can further refine the results, isolating only the relevant information needed for analysis. Mastering this hybrid approach not only improves data accuracy but also streamlines the extraction process.

The Importance of Data Cleaning After Extraction

Data cleaning is a crucial step that follows the extraction of web content. Raw data extracted from websites often contains noise, such as HTML tags, extraneous spaces, or incomplete entries, which can impede further analysis. By implementing effective data cleaning methods, such as removing duplicates and standardizing formats, users can enhance the quality and reliability of their datasets.

Utilizing libraries such as Pandas, one can automate the data cleaning process post-extraction to ensure that the data is fully usable for analysis or reporting. Proper data cleaning not only makes the dataset more manageable but also improves the accuracy of insights drawn from the data, allowing for more effective decision-making.

Sustaining Ethical Scraping Practices

As the web scraping landscape evolves, maintaining ethical scraping practices becomes paramount. Respecting the rights of content creators and ensuring compliance with legal guidelines plays a significant role in the long-term sustainability of web scraping activities. By adhering to ethical standards, scrapers not only protect themselves from potential legal issues but also promote a culture of responsible data usage.

Moreover, engaging with website owners and seeking permission for scraping can lead to fruitful collaborations that benefit both parties. Sharing insights gathered from scraping with content creators can help improve their offerings and is a practice that fosters trust within the online community. Ultimately, ethical scraping practices contribute to a healthier web ecosystem.

Future Trends in Web Scraping Technologies

The future of web scraping technologies promises exciting developments as more sophisticated tools emerge. With the increasing complexity of web pages, driven by dynamic content and JavaScript-heavy frameworks, new technologies aimed at automating data extraction are likely to become standard. For instance, advancements in machine learning and AI could aid in making scraping processes more intelligent, enabling automated detection of important patterns and content.

In addition to AI, enhanced tools that focus on real-time scraping and cloud integration are expected to reshape how developers engage with data extraction. By leveraging these innovations, web scrapers can effectively tackle the challenges posed by modern websites while continuing to gather actionable insights. This continual evolution in scraping technology ensures that professionals can keep pace with the rapidly changing digital landscape.

Frequently Asked Questions

What is HTML content parsing and how does it relate to web scraping techniques?

HTML content parsing involves extracting specific information from web pages by analyzing the HTML structure. It is a critical component of web scraping techniques, allowing developers to collect data from various elements like <div>, <p>, or <h1> tags. By automating the parsing process, users can efficiently harvest relevant content.

How can BeautifulSoup tutorial help in HTML content parsing?

A BeautifulSoup tutorial provides essential guidelines on how to utilize this powerful Python library for HTML content parsing. With BeautifulSoup, users can easily navigate and extract data from HTML documents, making it ideal for automating web content extraction from various sites.

What are some practical Scrapy examples for HTML content parsing?

Practical Scrapy examples for HTML content parsing include creating spiders that navigate to specific web pages, identify HTML elements of interest, and extract their content. Scrapy streamlines the process of web scraping by managing requests and providing built-in functions for parsing HTML effortlessly.

What are the best HTML extraction methods for web scraping?

The best HTML extraction methods for web scraping include using libraries such as BeautifulSoup and Scrapy, which offer robust tools for navigating HTML structures. These methods allow users to target specific tags and classes, effectively filtering and retrieving the desired content from web pages.

How does automating web content improve HTML content parsing efficiency?

Automating web content significantly improves HTML content parsing efficiency by reducing manual effort and increasing data collection speed. Tools like BeautifulSoup and Scrapy can be programmed to fetch and parse multiple pages simultaneously, ensuring that relevant information is extracted quickly and accurately.

| Key Point | Description |

|---|---|

| HTML Content Parsing | A method to extract relevant sections from HTML documents based on their structure. |

| Common Tags | Includes

,

, and tags, which are often used to hold main content. |

| Exclusion Criteria | Focus on the main text body while excluding headers, footers, and unnecessary links. |

| Tools Used | Libraries like BeautifulSoup and Scrapy can streamline the content extraction process. |

Summary

HTML content parsing is a crucial technique for extracting meaningful information from web pages. By structuring the extraction process around specific HTML tags, such as

, one can efficiently gather the main content while avoiding the clutter of unnecessary sections like headers and footers. Utilizing advanced tools like BeautifulSoup or Scrapy can further enhance the efficiency and effectiveness of this process, making HTML content parsing an invaluable skill for developers and data analysts.