Web scraping is a vital process in today’s data-driven world, enabling individuals and businesses to efficiently extract valuable information from websites. This technique has gained immense popularity as it simplifies the complexities of data extraction, making it an asset across diverse sectors such as e-commerce, finance, and technology. With a plethora of web scraping tools available, users can perform comprehensive data analysis and gather insights that drive decision-making. Ethical web scraping practices ensure compliance with legal guidelines and respect for website owners, safeguarding both the data and the scrapers’ reputation. As you delve into the world of web scraping techniques, you’ll discover its potential to transform raw data into actionable intelligence, ultimately elevating your competitive edge.

When we refer to the practice of collecting data from the internet, various terms come into play, including data harvesting and web data extraction. This indispensable method serves as a cornerstone for multiple industries that rely on accurate information for strategic planning and market insights. By leveraging advanced algorithms and scraping software, researchers and marketers can efficiently compile datasets that support their objectives. However, understanding the nuances of responsible data gathering, including legal boundaries and ethical guidelines, is essential for successful implementation. As we explore these concepts, we uncover the sophisticated landscape of information retrieval that empowers businesses to thrive in the digital age.

The Power of Web Scraping in Data Extraction

Web scraping has revolutionized the way we gather information in the digital age. By automating the data extraction process, users can efficiently capture large volumes of data from various online sources. This capability is invaluable for market research, enabling companies to track competitor prices, customer reviews, and trends across different platforms. Moreover, web scraping techniques can be tailored to suit specific needs, whether it be academic research or e-commerce analytics. The ability to aggregate and analyze this data allows businesses and researchers to make informed decisions based on real-time insights.

In sectors such as finance and academic research, web scraping plays a vital role in analyzing trends and developing strategies. Financial analysts may scrape data from multiple financial sites to assess market movements, while researchers can pull data from various publications to enhance their studies. The integration of advanced web scraping tools has simplified these processes, leading to faster and more accurate data acquisition. However, it is essential for users to understand the implications of data extraction, ensuring that they adhere to ethical guidelines and legal standards.

Navigating Ethical Web Scraping Practices

While web scraping offers considerable advantages, ethical considerations must guide its use. Ethical web scraping involves respecting a website’s terms of service, including the directives outlined in its robots.txt file, which can dictate what data can be collected. Moreover, using scraping techniques responsibly helps maintain the integrity of the services being scrapped, preventing potential server overloads. Practitioners must employ strategies to minimize their footprint on a website, such as implementing rate limits and requesting data at intervals.

Additionally, understanding the legal landscape surrounding web scraping is paramount. Depending on the jurisdiction, certain practices may be deemed illegal or unethical, leading to potential legal repercussions for violators. By conducting thorough research into local laws and standards, individuals and companies can ensure that their data scraping efforts remain above board. Ethical web scraping not only contributes to a better relationship between data gatherers and website owners but also reinforces the legitimacy of the gathered data.

Essential Web Scraping Techniques for Success

To effectively harness the power of web scraping, understanding various techniques is crucial. Some popular web scraping techniques include dynamic scraping, which involves accessing and extracting data from websites that utilize JavaScript for content rendering. Additionally, using APIs can be a preferred method of gathering structured data, as they often provide cleaner and more organized outputs than traditional scraping methods. Each technique has its benefits and challenges, making it important for users to choose the right approach based on their specific needs.

Moreover, the choice of scraping technologies can greatly impact the efficiency and accuracy of data extraction. From simple library-based solutions like Beautiful Soup and Scrapy to more robust tools that include Selenium for simulating user actions, understanding the strengths and weaknesses of each tool is essential. As such, aspiring scrapers should invest time in learning and experimenting with different tools and techniques, allowing them to build a toolkit capable of adapting to various data extraction scenarios.

Top Web Scraping Tools for Efficient Data Gathering

With the rising demand for data extraction, numerous web scraping tools have emerged, each catering to different user needs. Tools like Octoparse and ParseHub simplify the scraping process with user-friendly interfaces that do not require coding knowledge. These platforms enable users to create scraping routines by visually mapping out the data fields they wish to extract, making web scraping accessible to a broader audience.

For developers and advanced users, frameworks like Scrapy and Beautiful Soup offer greater control and customization. These open-source tools allow for complex scraping tasks, including data cleaning and transformation, which are essential in preparing data for analysis. Utilizing the right web scraping tools streamlined for specific tasks can significantly enhance the data collection process, leading to more robust datasets and meaningful insights.

Data Analysis: The Next Step After Web Scraping

After successfully gathering data through web scraping, the next critical phase is data analysis. This process involves interpreting the extracted information, identifying patterns, and deriving actionable insights. Techniques such as statistical analysis and data visualization play crucial roles in transforming raw data into coherent narratives that can inform decision-making. Users are encouraged to leverage analytics tools such as Pandas for data manipulation or Tableau and Power BI for visualization, further enhancing their analysis.

Effective data analysis doesn’t just stop at identifying trends; it also requires validating the accuracy and reliability of the gathered data. Implementing measures to ensure data integrity, such as cross-referencing sources and utilizing data validation techniques, can significantly improve the quality of insights derived from web scraping. Ultimately, combining web scraping with robust data analysis leads to powerful outcomes and enhanced competitive advantage.

Understanding the Legal Implications of Web Scraping

As the practice of web scraping becomes increasingly common, understanding its legal implications is critical for practitioners. Various countries have established regulations that might affect how data can be collected and used. Familiarity with laws such as the Computer Fraud and Abuse Act (CFAA) in the U.S., or the General Data Protection Regulation (GDPR) in the European Union is crucial to ensure compliance and avoid legal disputes. A thorough review of a website’s terms of service and privacy policy can provide valuable insight into what is permissible.

Moreover, the ongoing debates around data ownership and intellectual property rights present further challenges in the realm of web scraping. This makes it essential for scrapers to stay updated on legal precedents that evolve alongside technology. By taking proactive steps to educate themselves on these issues, data practitioners can mitigate risks and align their web scraping activities with best legal practices.

Best Practices for Ethical and Effective Web Scraping

To maximize the benefits of web scraping while adhering to ethical standards, practitioners should follow established best practices. This includes developing a clear data scraping strategy that defines objectives, tools to be used, and the types of data to be collected. Additionally, maintaining transparency with website owners by contacting them when appropriate can help foster goodwill and prevent misunderstandings surrounding data collection.

Moreover, conducting regular audits of scraping processes and the data collected helps ensure compliance with ethical guidelines and legal requirements. Implementing appropriate measures for data security and privacy protection will not only guard against potential breaches but also enhance the credibility of the practice. By following these best practices, users can leverage web scraping effectively and responsibly.

The Future of Web Scraping in a Data-Driven World

As industries increasingly rely on data for decision making, the future of web scraping looks promising. Emerging technologies such as AI and machine learning are set to enhance web scraping methods, making data collection even more efficient and insightful. Integrating these technologies can lead to smarter scraping solutions that adapt to website changes and offer more advanced data analytics capabilities.

In addition, the expansion of open data initiatives and the availability of public datasets are likely to influence the landscape of web scraping. These developments could encourage more ethical practices and facilitate access to high-quality data for researchers and businesses alike. Overall, as web scraping continues to evolve, its role in supporting data-driven decisions across sectors will only become more significant.

Frequently Asked Questions

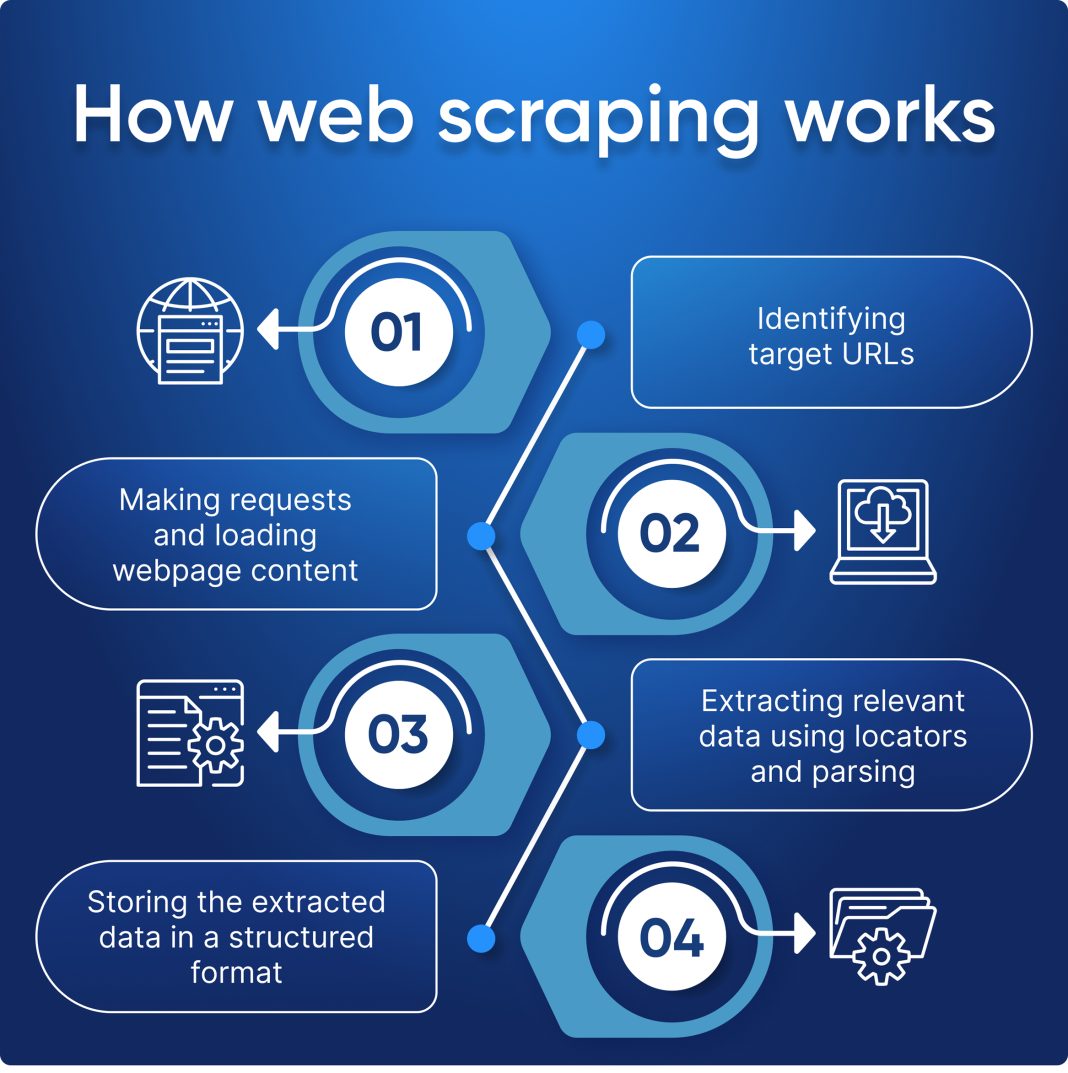

What is web scraping and how does it work?

Web scraping is the process of automatically extracting information from websites. It involves sending requests to web pages, retrieving the HTML content, and parsing it to collect specific data elements. This technique is widely used for data extraction in sectors like e-commerce, finance, and research.

What are the most common web scraping techniques?

Common web scraping techniques include HTML parsing, DOM manipulation, and API usage. HTML parsing involves extracting data from the source code, while DOM manipulation allows for interaction with elements of the webpage. APIs can also be leveraged to retrieve structured data in a more systematic manner.

What tools are available for ethical web scraping?

Several tools are available for ethical web scraping, including Beautiful Soup, Scrapy, and Puppeteer. These tools help automate data extraction while allowing users to follow best practices, such as respecting robots.txt files and ensuring compliance with legal guidelines.

How can web scraping assist in data analysis?

Web scraping can significantly aid data analysis by enabling users to gather large datasets from various online sources quickly. This data can be aggregated, cleaned, and analyzed to uncover insights, trends, and patterns, particularly in competitive fields like market research or academic investigations.

What are the ethical considerations for web scraping?

Ethical web scraping involves respecting the legal boundaries and guidelines laid out by website owners, such as adhering to the rules stated in robots.txt files. It is also important to avoid overwhelming servers with requests, and to use scraped data responsibly for research or analysis without infringing on copyrights.

Can web scraping be used for market research?

Yes, web scraping is an effective tool for market research. It allows businesses to gather valuable data on competitor pricing, customer reviews, trends, and product availability, helping them make informed decisions and strategies.

Is web scraping legal?

The legality of web scraping varies by jurisdiction and depends on various factors, such as the nature of the data sourced and compliance with website terms of service. It’s important to conduct web scraping with an understanding of legal implications and ethical guidelines.

What are some best practices for conducting web scraping?

Some best practices for conducting web scraping include: respecting robots.txt files, minimizing request rates to avoid server overload, maintaining anonymity through IP rotation, and ensuring compliance with legal policies associated with the data being scraped.

| Key Points |

|---|

| Definition: Web scraping is the automated process of extracting data from websites. |

| Purpose: It allows users to quickly gather data for analysis, research, or machine learning. |

| Applications: Commonly used in e-commerce, finance, and academic research for market analysis and dataset creation. |

| Ethical Considerations: Importance of respecting robots.txt, server load, and legal implications of scraping. |

Summary

Web scraping is a vital tool in today’s data-driven world, enabling users to gather significant amounts of information quickly and efficiently. As industries like e-commerce, finance, and academics harness the power of data, the demand for web scraping continues to grow. However, users must operate within ethical boundaries, ensuring that web scraping activities comply with legal standards and respect website protocols. Overall, responsible web scraping practices can yield valuable insights while maintaining integrity in data collection.