Web scraping is a powerful technique that enables users to extract valuable information from websites efficiently. As the digital landscape continues to expand, the benefits of web scraping have become increasingly apparent, from data collection for research to understanding market trends through competitor analysis. To effectively leverage these advantages, it’s essential to utilize the right web scraping tools and adhere to best practices for web scraping. Techniques such as respecting robots.txt files and managing request throttling are crucial to maintain ethical scraping practices. In this guide, we’ll explore what is web scraping, why it matters, and how you can implement effective strategies to maximize your data gathering efforts.

Data extraction from online sources, commonly referred to as web mining or data scraping, has become an essential skill for researchers and marketers alike. This practice allows individuals to compile insights from various platforms with relative ease, providing a comprehensive overview of trends and topics of interest. Employing various web scraping techniques can significantly enhance the efficiency of information retrieval, making data-driven decisions more accessible. Understanding the core principles and best practices is vital for maintaining data integrity and compliance in this field. In exploring this powerful tool, we will delve into the different methodologies available and outline the tools that can streamline your data-gathering processes.

What is Web Scraping?

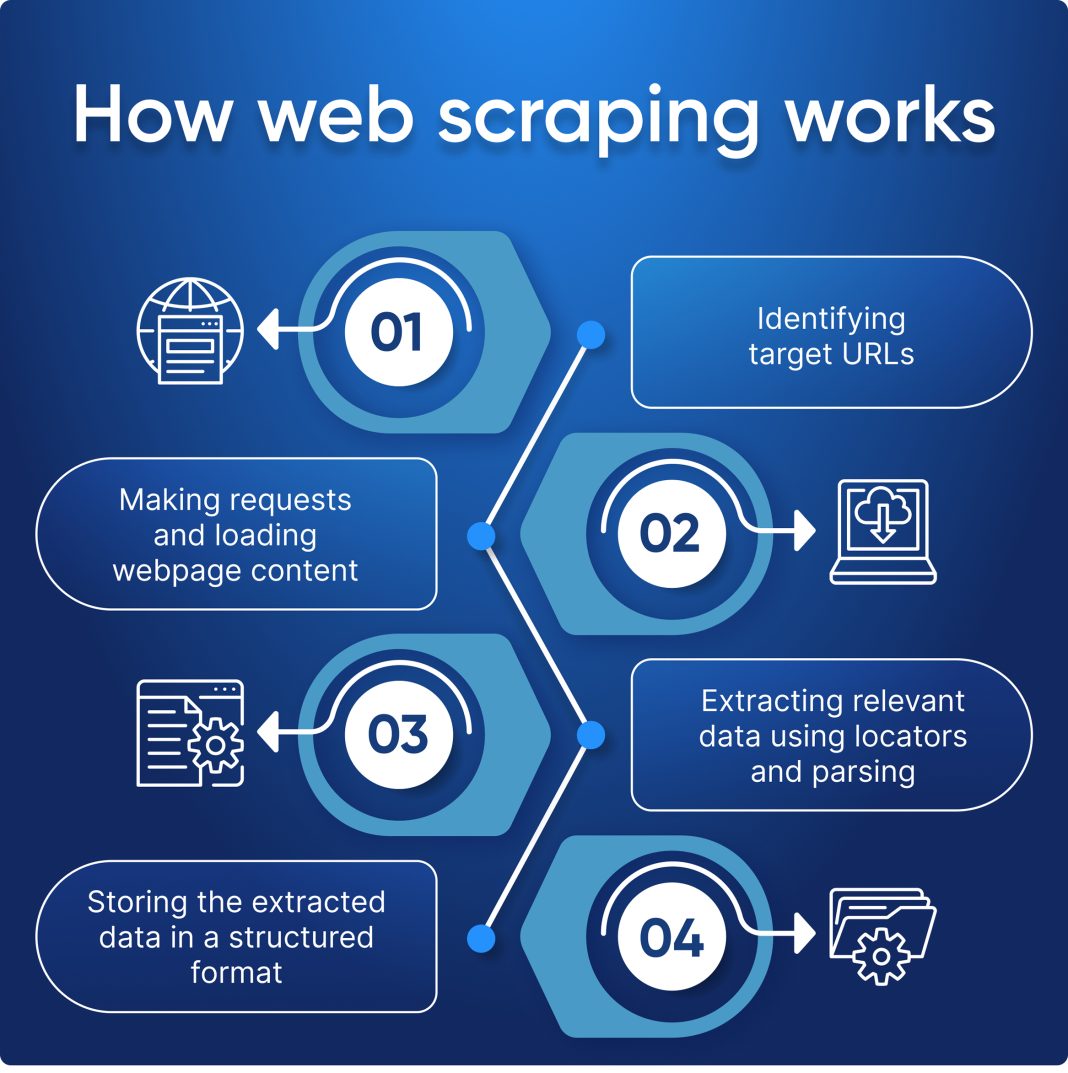

Web scraping is the process of systematically extracting information from websites. It involves using software or scripts to access web pages and retrieve data, which can then be processed and analyzed. This technique can serve various purposes, from gathering statistical data to simplifying the collection of product details for e-commerce platforms. Essentially, web scraping automates the tedious process of data entry, allowing users to efficiently accumulate information without having to do everything manually.

The concept of web scraping has become increasingly relevant as more businesses and individuals seek to leverage online information for insights and competitive advantages. By understanding ‘what is web scraping’, users can harness its capabilities to drive innovation and decision-making. Moreover, the technology continues to evolve, with new tools and techniques emerging that enhance scraping efficiency and ease of use.

Benefits of Web Scraping for Businesses

The benefits of web scraping are manifold, particularly for businesses looking to gain a competitive edge. One prominent advantage is the ability to gather and analyze large datasets from diverse online sources without the substantial time investment that would typically be required for manual data collection. This not only streamlines operations but also ensures that businesses can make informed decisions based on up-to-date information.

Another significant benefit is competitive analysis. By scraping data from competitors’ websites, businesses can monitor their strategies, product offerings, pricing models, and customer engagement techniques. This intelligence helps organizations adjust their operational strategies and stay ahead in the market. With proper web scraping practices, companies can also enhance their marketing efforts and tailor their products or services to meet the evolving demands of their target audience.

Best Practices for Web Scraping

When undertaking web scraping, following best practices is crucial to ensure efficiency and ethical compliance. A fundamental guideline is to always review the website’s robots.txt file before beginning the scraping process. This file indicates which areas of the site are permissible for crawling and scraping, helping to avoid legal repercussions and maintain goodwill with website owners.

Additionally, implementing throttle requests is an essential practice in web scraping. This involves introducing delays between requests made to a server, which helps to prevent overwhelming the site with traffic. Respecting server load and bandwidth capacity not only ensures the longevity of the scraping process but also upholds ethical standards in data extraction.

Overview of Popular Web Scraping Tools

Several web scraping tools are available to cater to a wide range of user needs, from novices to seasoned professionals. BeautifulSoup, for instance, is a Python library favored for its simplicity and effectiveness in parsing HTML and XML documents. It allows users to easily navigate and search for data within complex documents, making it ideal for those looking to get started with web scraping.

On the other hand, Scrapy is a more advanced option widely used in the industry. This powerful open-source framework provides a comprehensive suite of features that support scraping, data extraction, and storage. For those who prefer a no-code solution, Octoparse offers a user-friendly interface that enables users to scrape data without requiring any programming knowledge, broadening the accessibility of web scraping to a larger audience.

Advanced Web Scraping Techniques

As web scraping has evolved, so have the techniques associated with it. Advanced web scraping techniques include using APIs, which can provide structured data without the need for scraping. Engaging directly with a website’s API can yield more reliable data compared to scraping public-facing web pages, particularly for complex datasets.

Another technique is the use of headless browsers, which simulate human-like interaction with websites. This method is particularly useful for scraping dynamic sites that load content via JavaScript, as these browsers can render the page as a user would see, ensuring that all information is captured accurately. Employing such sophisticated techniques can enhance the effectiveness of web scraping endeavors.

Ethical Considerations in Web Scraping

Ethical considerations are paramount in the practice of web scraping. While the technique opens numerous doors for data collection, it must be conducted with respect for both the law and the rights of website owners. Understanding copyright laws and adhering to data privacy regulations, such as GDPR, is crucial to avoid potential legal issues.

Moreover, ethical scraping involves maintaining transparency with users and stakeholders about how their data is being handled. Developing clear data usage policies and following ethical guidelines when utilizing scraped data not only fosters trust but also contributes to the responsible use of digital resources.

Impact of Web Scraping on Data-Driven Decisions

The impact of web scraping on organizations’ data-driven decisions is profound. By harnessing data sourced from the web, businesses gain critical insights into market trends, customer preferences, and competitive dynamics. This capability enhances their strategic planning, allowing for tailored approaches that cater to specific audience segments or niches.

Furthermore, the integration of web scraping into data analysis processes facilitates a more comprehensive understanding of complex data landscapes. By combining scraped data with internal metrics, organizations can create robust dashboards and reporting systems that drive informed decision-making and foster innovation across departments.

Web Scraping in Academic Research

Web scraping has carved a valuable niche in the realm of academic research. Researchers often rely on vast amounts of online data to inform their studies, and web scraping provides a viable means to collect this information efficiently. From analyzing social trends to gathering statistical data for experimental purposes, the technique expands the scope of research.

Moreover, web scraping allows researchers to access unstructured data that might otherwise be inaccessible. This capability enables scholars to develop new hypotheses and insights that contribute to a richer understanding of various fields. As academic institutions continue to embrace the digital age, the role of web scraping in research will likely expand further.

Future Trends in Web Scraping

Looking ahead, several trends are emerging in the field of web scraping that promise to reshape its landscape. One notable trend is the growing use of artificial intelligence and machine learning to enhance scraping capabilities. These technologies can automate data extraction processes, improve accuracy, and enable better handling of challenges like CAPTCHAs and complex web structures.

Additionally, the rise of ethical scraping practices will likely shape future developments. As awareness of data protection laws increases, businesses and individuals are expected to adopt more responsible and compliant scraping practices to align with global ethical standards. This shift will ensure that web scraping remains a legitimate and beneficial tool in the digital economy.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is a technique used to extract data from websites automatically. It works by making HTTP requests to a web server, retrieving the HTML content of the page, and then parsing that content to extract the desired information using various web scraping tools like BeautifulSoup and Scrapy.

What are the benefits of web scraping for businesses?

The benefits of web scraping for businesses include data collection for market analysis, competitive intelligence to monitor rivals, and aggregating content for research purposes. This technique allows businesses to gather insights quickly and efficiently, leading to informed decision-making.

What are some popular web scraping tools available?

Some popular web scraping tools include BeautifulSoup for parsing HTML, Scrapy which is a flexible web scraping framework, and Octoparse, a no-code solution ideal for beginners. Each of these tools offers different features tailored to various web scraping needs.

What are the best practices for web scraping?

The best practices for web scraping include respecting the robots.txt file of websites to ensure compliance, throttling requests to avoid overwhelming servers, and having a plan for data storage (e.g., CSV, JSON, or databases) to organize the scraped data effectively.

How can web scraping be used for research purposes?

Web scraping can be used for research by collecting data from multiple sources, allowing researchers to analyze trends and patterns. This method provides a large dataset that can enhance academic studies or market research without the need for manual data entry.

What are the legal considerations for web scraping?

Legal considerations for web scraping involve respecting copyright laws and terms of service of websites. It’s crucial to check the robots.txt file and ensure that your scraping activities do not violate any agreements, as this could lead to legal implications.

| Key Points | Details |

|---|---|

| Benefits of Web Scraping | 1. Data Collection: Gather large amounts of data efficiently. 2. Competitive Analysis: Monitor competitors by analyzing their content. 3. Research: Utilize data for academic and market research. |

| Tools for Web Scraping | – BeautifulSoup: Parses HTML/XML. – Scrapy: A flexible web scraping framework. – Octoparse: User-friendly no-code tool. |

| Best Practices for Web Scraping | – Respect Robots.txt: Ensure scraping is allowed. – Throttle Requests: Avoid overwhelming servers. – Data Storage: Plan data storage format (CSV, JSON, Database). |

Summary

Web scraping is a powerful technique for extracting valuable data from the internet. By utilizing various tools and adhering to best practices, individuals and organizations can efficiently collect information for research, analysis, and business intelligence. However, it is crucial to follow ethical guidelines and respect legal restrictions when engaging in web scraping to ensure a responsible use of data.