Web scraping is an essential technique for data extraction that enables users to gather vast amounts of information from the web quickly and efficiently. By employing various web scraping techniques, individuals and businesses can harness valuable insights that drive decision-making and strategic planning. Whether you’re following a BeautifulSoup tutorial or utilizing a Scrapy guide, the power of automated data collection can unlock numerous opportunities for analytics and market research. However, as the landscape of data collection evolves, it’s imperative to consider ethical web scraping practices to ensure compliance with legal standards. This introduction to web scraping outlines the importance of this skill in today’s data-driven world.

The process of gathering online data often referred to as web harvesting or web scraping, plays a pivotal role in modern data analytics. This method of automated information retrieval allows users to capture and utilize data from various sources across the internet, enriching their knowledge base. With tools and frameworks like BeautifulSoup and Scrapy at one’s disposal, extracting relevant information becomes a streamlined process. Yet, amidst the convenience of this data acquisition approach, one must not overlook the principles of ethical data collection to avoid potential privacy infringements. Understanding the nuances of web data extraction is crucial for anyone aiming to leverage the wealth of information available online.

Understanding Web Scraping Techniques

Web scraping techniques are essential for anyone looking to collect meaningful data from the plethora of information available online. There are various methods to perform web scraping, including the use of automated tools and libraries that simplify the process. Techniques like DOM parsing and regular expressions play vital roles in extracting data from HTML and XML documents. Understanding these foundational scraping techniques is crucial for effectively gathering data that can drive decisions and strategies.

Among the popular web scraping techniques are the use of tools like BeautifulSoup and Scrapy. BeautifulSoup is a Python library that makes it easy to navigate, search, and modify HTML or XML documents. On the other hand, Scrapy is a more robust framework that allows for large-scale web scraping projects, enabling users to create spiders that can crawl websites and extract desired information efficiently. Learning these techniques not only enhances one’s data extraction skills but also opens doors to various applications across fields such as marketing, research, and beyond.

Getting Started with BeautifulSoup Tutorial

If you’re new to web scraping, a BeautifulSoup tutorial is an excellent place to start. BeautifulSoup simplifies the process of web scraping by providing Python developers with tools to parse HTML and extract the relevant data. The library works by creating a parse tree for parsing the HTML or XML documents, allowing for easy navigation and searching of tags and attributes. This feature makes it highly advantageous for individuals who are not yet familiar with the complexities of web scraping.

To install BeautifulSoup, you can easily use pip, the Python package manager, and start scraping in no time. A simple script can demonstrate how to fetch a web page and extract specific data points, such as titles, paragraphs, or links. By the end of a well-structured BeautifulSoup tutorial, you’ll not only be able to scrape data but also understand how to handle different HTML structures that could arise during your projects.

Exploring the Scrapy Guide for Advanced Users

For those looking to dive deeper into web scraping, the Scrapy guide offers invaluable insights. Scrapy is a Python framework specifically designed for extracting data from websites quickly and efficiently. It allows users to write flexible and powerful spiders, which can crawl multiple pages, handle requests concurrently, and manage large datasets with ease. This framework is particularly useful for professional data scientists and analysts who require a comprehensive solution for web scraping.

In addition to its crawling capabilities, Scrapy’s in-built support for exporting data into structured formats such as JSON, CSV, and XML makes it a favorite among data engineers. The Scrapy guide typically covers topics like setting up a Scrapy project, creating items and pipelines, and managing web page responses. By following this guide, advanced users can tailor their web scraping projects to meet specific challenges and efficiently scale their data extraction processes.

Ethical Web Scraping Practices

Ethical web scraping is a critical aspect that anyone engaging in data extraction should prioritize. While web scraping can provide significant benefits, it is essential to understand the legal and ethical implications associated with this practice. Websites often have terms of service that restrict how their data can be used or extracted. Respecting these guidelines not only keeps your scraping activities within legal limits but also maintains a good relationship with website owners.

Incorporating ethical web scraping practices includes recognizing the importance of robots.txt files, which indicate what data can be accessed by bots. Additionally, rate-limiting your requests to avoid overwhelming a website’s server is crucial to prevent denial-of-service issues. By adhering to ethical standards, you’re not only safeguarding your reputation but also contributing to a more sustainable and respectful web scraping ecosystem.

Advantages of Using Web Scraping for Data Extraction

Web scraping offers numerous advantages for data extraction that can significantly enhance business intelligence and research efforts. One of the primary benefits is the ability to gather large volumes of data from multiple sources quickly and accurately. By automating this process, organizations can save valuable time and resources, allowing them to focus on analyzing data rather than spending hours manually collecting it.

Furthermore, web scraping provides the flexibility to extract data from various formats and websites, which can be tailored to meet specific analytical needs. Whether it’s product pricing data for e-commerce, consumer reviews for market research, or content for competitive analysis, web scraping enables businesses to adapt and collect information that drives strategic decision-making. As a result, web scraping serves as a vital tool for staying competitive in today’s data-driven marketplace.

Navigating Limitations in Web Scraping

While web scraping presents incredible opportunities for data extraction, it also comes with certain limitations that practitioners need to navigate. Websites frequently change their layouts, which can break existing scraping scripts and require ongoing maintenance to ensure consistent data extraction. Additionally, some sites implement anti-scraping measures such as CAPTCHAs or IP blocking, posing challenges that can hinder effective scraping efforts.

Due to these limitations, it’s important for web scrapers to continually adapt and improve their techniques. Engaging with community forums, continuously learning about the latest scraping tools, and respecting website policies can minimize issues while maximizing efficiency. By addressing these challenges proactively, individuals and businesses can maintain an edge in their web scraping endeavors.

The Role of APIs vs Web Scraping

When collecting data from the web, many prefer to use APIs (Application Programming Interfaces) as a structured alternative to web scraping. APIs allow for easier access to data since they are specifically designed for sharing information in a controlled manner. Utilizing APIs can lead to cleaner, more organized data that is often presented in formats like JSON or XML, making data integration and analysis simpler.

However, web scraping remains a valuable method, particularly when APIs are not available. Many websites do not offer APIs, and when they do, the available data may be limited or outdated. In such cases, web scraping becomes a necessary skill for data enthusiasts and professionals seeking to extract relevant information. Understanding the balance between using APIs and web scraping is key for efficiently obtaining data.

Common Challenges in Automated Data Extraction

Automated data extraction through web scraping comes with its own set of challenges that users must be prepared to face. One of the most common obstacles is handling dynamic pages, which often load content asynchronously via JavaScript. This can complicate the extraction process because traditional scraping methods may fail to capture dynamically generated content.

Another significant challenge is ensuring data accuracy and quality. As websites frequently update their structures, scrapers must be vigilant to avoid harvesting incorrect or outdated information. Implementing robust validation processes can help ensure that the data extracted is reliable and useful. By being aware of these challenges, users can develop targeted strategies to enhance their web scraping effectiveness.

Future of Web Scraping in a Data-Driven World

The future of web scraping is bright, especially as the world becomes more data-driven. As businesses increasingly rely on data to inform their strategies, the demand for effective web scraping tools and techniques will continue to grow. Machine learning and artificial intelligence are poised to transform the web scraping landscape, allowing for more sophisticated data analysis and processing capabilities.

Moreover, ethical considerations in web scraping will likely lead to more robust regulatory frameworks that govern data extraction practices. Professionals in this field will need to stay informed on these changes and adapt accordingly to ensure compliance. The ongoing evolution of web scraping technology and methodology suggests that this area will remain a vital component of data collection efforts for the foreseeable future.

Frequently Asked Questions

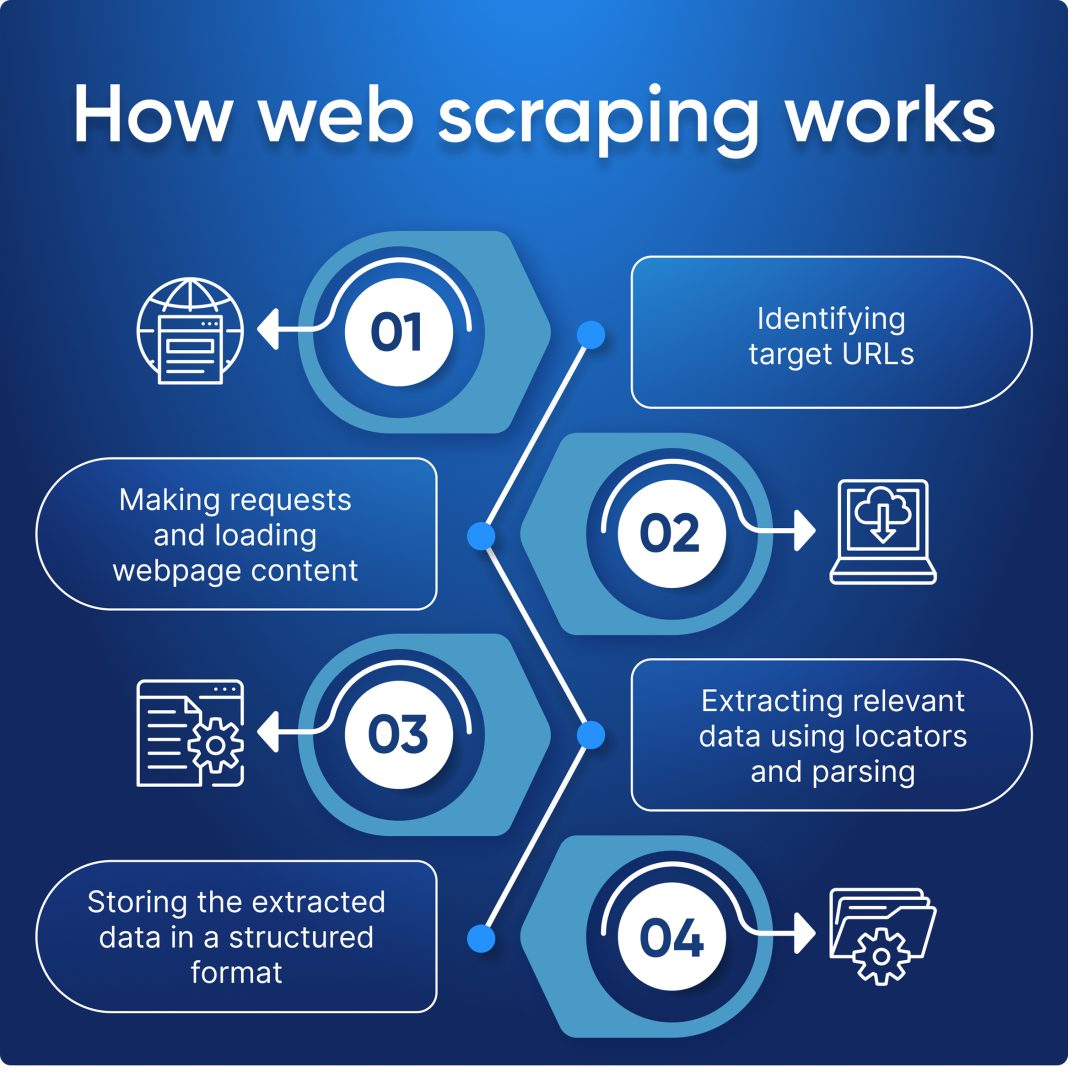

What is web scraping and how does it work?

Web scraping is the automated process of extracting data from websites. It involves fetching a webpage and then parsing the HTML content to retrieve desired information. Typically, web scraping utilizes programming languages like Python with libraries such as BeautifulSoup or Scrapy to facilitate data extraction.

What are some common web scraping techniques?

Common web scraping techniques include using HTML parsers like BeautifulSoup for data extraction, employing Scrapy for managing large scraping tasks, and utilizing browser automation tools like Selenium for dynamic content. Each technique has its strengths depending on the complexity of the website being scraped.

How do I get started with a BeautifulSoup tutorial for web scraping?

To start with a BeautifulSoup tutorial for web scraping, first, install the BeautifulSoup and requests libraries in Python. Then, follow tutorials that guide you through fetching web pages and parsing HTML documents to extract data. Numerous online resources provide step-by-step instructions for beginners.

What is a Scrapy guide and why should I use it for web scraping?

A Scrapy guide provides comprehensive instructions on utilizing the Scrapy framework for web scraping. Scrapy is ideal for large-scale web scraping projects because it efficiently handles requests and data extraction. Following a Scrapy guide can help you set up spiders and pipelines to automate and optimize your data extraction tasks.

Is ethical web scraping important and what should I consider?

Ethical web scraping is crucial as it ensures compliance with a website’s terms of service and respects user privacy. When engaging in web scraping, consider factors like copyright laws, request rates to avoid server overload, and the use of data in compliance with data protection regulations to maintain ethical standards.

How can businesses benefit from web scraping?

Businesses can benefit from web scraping by acquiring competitive intelligence, market research data, and insights into consumer trends. By extracting valuable information from various online sources, companies can enhance their products, optimize services, and make informed decisions based on real-time data analysis.

What are the advantages of web scraping compared to APIs?

Web scraping offers advantages over APIs, particularly when APIs are not available or limited. It allows for the extraction of data from virtually any website, providing access to unstructured data. Additionally, web scraping can yield a larger volume of data specific to user needs, which APIs may not provide.

What should I know about legal considerations in web scraping?

Legal considerations in web scraping include compliance with a website’s robots.txt file, adhering to copyright laws, and respecting the site’s terms of service. It’s important to verify that your web scraping activities do not infringe on legal restrictions or the intellectual property rights of data owners.

Can web scraping skills lead to job opportunities?

Yes, web scraping skills can open up job opportunities in data analysis, data science, and research. Companies often seek individuals with the ability to extract and analyze data efficiently for decision-making and market strategies, making web scraping a valuable asset in the tech industry.

What tools are recommended for ethical web scraping?

Recommended tools for ethical web scraping include BeautifulSoup for HTML parsing, Scrapy for structured scraping tasks, and Selenium for dynamic web content. Additionally, tools like Octoparse offer user-friendly interfaces for those who prefer not to code.

| Key Points | Details |

|---|---|

| Definition | Web scraping is the process of extracting data from websites using automated tools. |

| Techniques | Common libraries for web scraping include BeautifulSoup and Scrapy in Python. |

| Uses | Used for collecting data for analytical purposes, market research, and competitive intelligence. |

| Legal Considerations | Important to adhere to legal and ethical standards, such as website terms of service. |

| Alternatives | APIs provide a more structured way to retrieve data when available. |

| Importance | A crucial skill for tech enthusiasts and professionals to leverage data on the web. |

Summary

Web scraping is an essential technique for anyone interested in extracting valuable insights from online data. By automating the process of collecting information from websites, businesses and individuals can effectively gather analytical data for market research and improve their offerings. While it presents immense opportunities for insight and intelligence, it is critical to remain aware of the legal boundaries associated with this practice. As web scraping evolves alongside technologies like APIs, it remains an indispensable skill for data-driven decision-making.