Web scraping is an essential technique in the modern digital landscape, enabling the automated data collection from websites with ease and precision. By employing web scraping tools and programming for web scraping, businesses and researchers can extract vital information for analysis, market research, and competitive intelligence. The ability to gather data efficiently makes web scraping a vital skill, yet it also raises questions about ethical web scraping practices. To ensure compliance and respect for website protocols, understanding the legal framework is crucial when engaging in data extraction. This comprehensive overview of web scraping will guide you through the ins-and-outs of this powerful data-gathering method.

The practice of web harvesting involves systematically retrieving and organizing data from online sources, often referred to as data scraping or web crawling. This approach facilitates automated data collection, significantly enhancing the efficiency and accuracy of information gathering from various platforms. With the rise of big data, the relevance of employing sophisticated web scraping techniques has grown tremendously. Furthermore, developers often leverage popular languages such as Python and JavaScript to implement scrapers, employing advanced tools to facilitate the extraction process. As we delve deeper into this topic, we’ll explore the methods, tools, and ethical considerations surrounding this innovative form of data acquisition.

Understanding the Basics of Web Scraping

Web scraping is an essential technique in the digital age, allowing individuals and organizations to collect vast amounts of data efficiently. This automated process involves fetching a web page and extracting information that is crucial for various applications such as market research, data analysis, and even competitor price tracking. Utilizing programming languages like Python or R, web scraping can be tailored for different needs based on the goals of data extraction.

At its core, web scraping transforms the unstructured web content into structured data. By leveraging web scraping tools, businesses can automate data collection processes, saving time and resources. The ability to regularly collect and analyze data empowers companies to make informed decisions quickly, adapting to market changes with agility and ensuring they remain competitive.

Popular Programming Languages for Web Scraping

When it comes to web scraping, several programming languages stand out due to their ease of use and robust capabilities. Python is undoubtedly the most popular choice among data enthusiasts and professionals alike, primarily because of its extensive libraries like BeautifulSoup, Scrapy, and Selenium. These tools simplify the process of web scraping by providing pre-built functions that streamline tasks like accessing web pages, parsing HTML, and storing information.

JavaScript also plays a significant role in web scraping, especially when dealing with Single Page Applications (SPAs) that dynamically load content. Tools like Puppeteer allow developers to simulate user interactions, making it easier to extract data from complex web structures. Meanwhile, R is gaining traction among statisticians and data analysts for its strong data manipulation capabilities, with packages such as rvest facilitating efficient web data extraction.

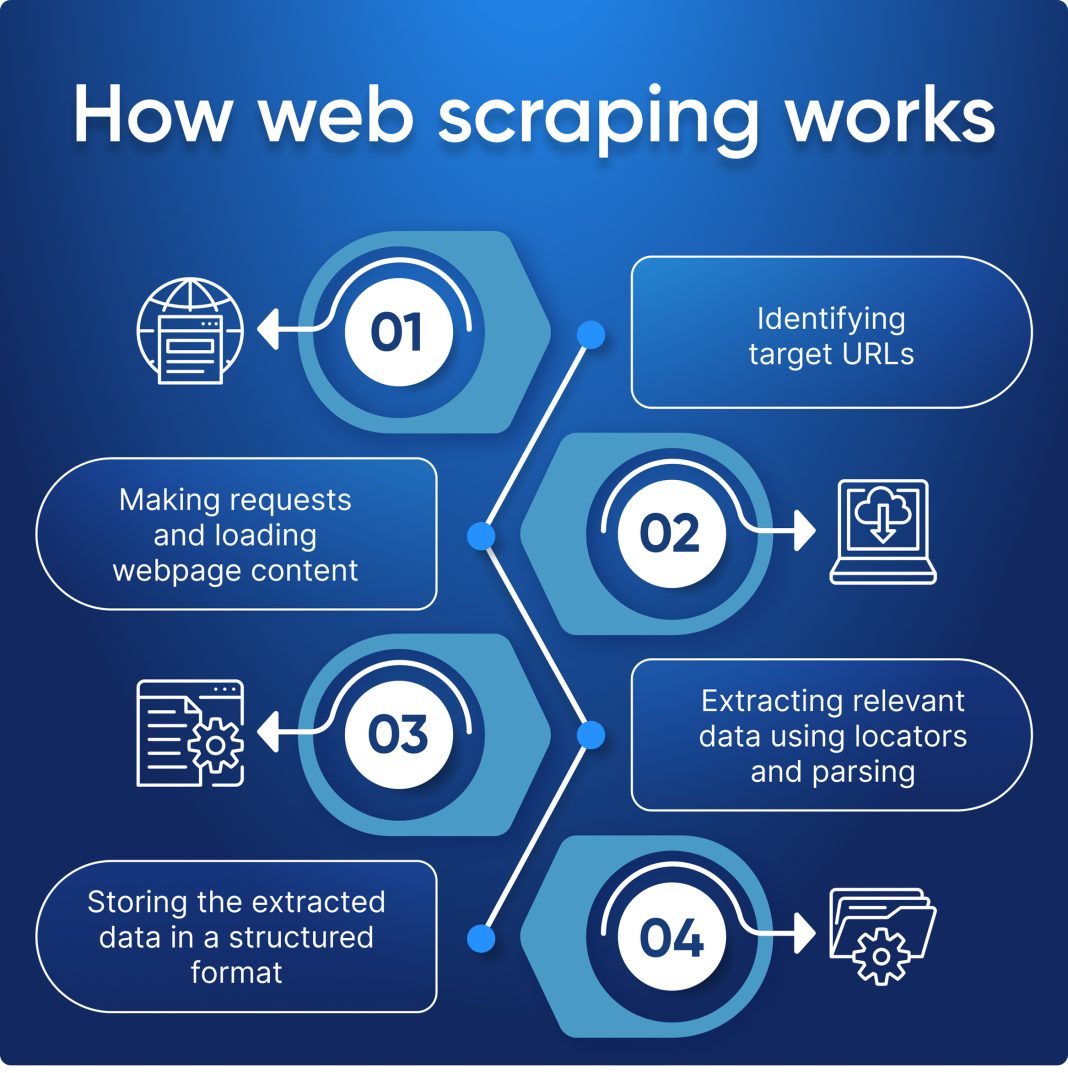

Essential Steps to Perform Web Scraping

The process of web scraping consists of several critical steps that ensure the accuracy and effectiveness of data collection. First, it is vital to identify the data source, which entails selecting the specific website or web page from which information will be extracted. Once the target is pinpointed, the next step involves inspecting the page using browser developer tools to understand its structure, which is crucial for writing effective scraping scripts.

After familiarizing yourself with the HTML layout, the script can be written using the chosen programming language to fetch the page content. Following this, parsing the content is essential for extracting relevant information from the HTML structure. Finally, the scraped data must be stored in a format that is conducive to further analysis or processing, whether that be JSON, CSV, or a dedicated database.

Legal and Ethical Considerations in Web Scraping

Navigating the legal landscape of web scraping is paramount to ensuring compliance and avoiding potential repercussions. Ethical web scraping practices start with respecting the website’s robots.txt file, which outlines the permissions for data collection. This file serves as a guide for web scrapers to understand what content can be accessed, ultimately promoting responsible scraping.

In addition to adhering to robots.txt directives, it is equally important to review and comply with the website’s terms of service regarding data usage. Responsible web scraping practices entail avoiding excessive requests that could lead to server overload or IP blocking. By following these ethical guidelines and respecting copyright laws, data collectors can uphold the integrity of their operations while gathering valuable insights.

Automation in Data Collection: Tools and Techniques

Automating data collection through tools and techniques is a significant advantage offered by web scraping. Various web scraping tools, such as Octoparse, ParseHub, and Apify, facilitate automated data collection processes by providing user-friendly interfaces that require minimal coding knowledge. These platforms enable users to set up scraping tasks, schedule data extraction, and manage large volumes of data with ease.

Moreover, utilizing programming languages for automation can lead to more customized and intricate scraping solutions. Libraries like Scrapy in Python allow developers to create complex spiders that navigate web pages, handle JavaScript-rendered content, and manage data pipelines for further analysis. However, it’s crucial to ensure that such automation adheres to ethical guidelines to maintain a good standing in the digital ecosystem.

The Impact of Web Scraping on Business Strategy

Web scraping significantly influences business strategies by enabling organizations to harness real-time data for informed decision-making. By collecting and analyzing data from competitors, businesses can identify market trends, understand pricing strategies, and optimize their offerings to ensure competitiveness. This data-driven approach allows businesses to respond quickly to customer needs and market fluctuations.

Additionally, web scraping facilitates the monitoring of customer sentiment across various platforms. This insight allows businesses to adapt their marketing strategies in real-time, conducting targeted campaigns based on current consumer preferences. Hence, integrating web scraping into business operations can propel strategic planning and execution, transforming data into actionable insights.

Ethical Guidelines for Responsible Web Scraping

As web scraping continues to grow in popularity, adhering to ethical guidelines becomes increasingly important. Ethical web scraping focuses on obtaining data in a manner that does not infringe on user rights or violate the integrity of the website. Key practices include being transparent about data collection intentions and ensuring that any data used respects privacy laws and user agreements.

Moreover, ethical considerations also encompass the technical aspects of scraping activities. This means avoiding excessive load on a website’s server, which can result in unintentional denial-of-service and potentially harm the website’s functionality. By prioritizing ethical scraping practices, organizations can build trust with both customers and website owners, fostering a collaborative rather than adversarial relationship.

Best Practices for Efficient Data Extraction

To maximize the effectiveness of web scraping, it’s essential to adopt best practices for efficient data extraction. This starts with choosing the right web scraping tools that align with project requirements and the level of coding expertise available. Utilizing established libraries and tools can save time and improve the quality of data gathered.

In addition, optimizing scraping scripts for speed and accuracy is vital. This can be achieved by implementing appropriate error handling, rate limiting requests to avoid being blocked, and ensuring consistent parsing of the extracted data. By following these best practices, web scrapers can create systems that yield reliable data without compromising efficiency or compliance.

Leveraging Data for Enhanced Business Insights

The true power of web scraping lies in its ability to transform raw data into meaningful insights that drive business growth. Once data is collected and processed, organizations can leverage advanced analytics and visualization tools to derive actionable insights. This approach aids in identifying patterns, predicting trends, and ultimately supporting strategic initiatives.

Furthermore, by continuously monitoring data from various sources, businesses can stay attuned to industry shifts, customer preferences, and emerging market opportunities. Leveraging data in this manner not only enhances competitive advantage but also fosters innovation by driving product development and marketing strategies aligned with customer expectations.

Future Trends in Web Scraping Technology

As technology advances, so too will the tools and methods used in web scraping. Future trends indicate an increased focus on artificial intelligence and machine learning, which will likely enhance data extraction processes by enabling smarter parsing and analysis of complex web structures. Such advancements will allow web scrapers to handle larger datasets more efficiently, leading to improved business insights.

Moreover, the conversation around ethical web scraping will continue to evolve, prompting the development of more sophisticated compliance tools and practices. As regulations surrounding data privacy grow tighter, the integration of ethical guidelines with scraping technology will become increasingly necessary to ensure that organizations can adapt while still conducting effective data collection.

Frequently Asked Questions

What is web scraping and how is it used for data extraction?

Web scraping refers to the automated process of obtaining data from websites. It is widely used for data extraction across various applications such as market research, data analysis, and price monitoring. By fetching web pages and parsing their content, businesses and individuals can gather valuable information efficiently.

Which programming languages are best for web scraping?

Python is the most popular programming language for web scraping due to its simplicity and powerful libraries like BeautifulSoup and Scrapy. JavaScript is essential for scraping Single Page Applications (SPAs) using tools like Puppeteer, while R is also effective, especially for data analysis tasks with its rvest library.

What are the main steps in performing web scraping?

To successfully perform web scraping, follow these key steps: 1) Identify your data source, 2) Inspect the webpage using browser tools, 3) Fetch the page content with your chosen programming language, 4) Parse the HTML to extract the relevant data, and 5) Store the data in an appropriate format like JSON or CSV for future analysis.

What ethical considerations should I keep in mind while web scraping?

When engaging in web scraping, it’s crucial to adhere to ethical considerations such as respecting the site’s `robots.txt` file, complying with the terms of service, and avoiding excessive requests that could overload servers. Ethical web scraping ensures responsible data extraction without compromising website performance.

How to choose the right web scraping tools?

Selecting the right web scraping tools depends on your project requirements and technical skill level. Popular web scraping tools include Python libraries like BeautifulSoup and Scrapy, JavaScript tools like Puppeteer, and browser extensions like Web Scraper. Consider factors like ease of use, community support, and feature set when choosing your tools.

Is automated data collection legal and safe?

Automated data collection via web scraping can be legal if done following website policies and local regulations. Always check the site’s `robots.txt` file and terms of service to ensure compliance. Ethical web scraping practices can minimize legal risks and foster good relationships with website owners.

Can I perform web scraping on any website?

While you can technically scrape data from many websites, it is essential to check each site’s `robots.txt` file and terms of service to determine what data can be legally collected. Some sites explicitly prohibit scraping, while others may allow it under certain conditions.

What tools can enhance my programming for web scraping?

To enhance your programming for web scraping, consider using tools and libraries like BeautifulSoup and Scrapy for Python, Puppeteer for JavaScript, and rvest for R. Additionally, consider web scraping frameworks that provide features for data handling and archiving to streamline your projects.

| Key Points | Details |

|---|---|

| Understanding Web Scraping | Automated process of extracting data from websites for various applications like data analysis, price monitoring, market research. |

| Popular Programming Languages | 1. Python: Easy to use with libraries like BeautifulSoup, Scrapy, and Selenium. 2. JavaScript: Useful for SPAs with tools like Puppeteer. 3. R: Suitable for analysis using libraries like rvest. |

| Steps to Perform Web Scraping | 1. Identify the data source. 2. Inspect the page structure using developer tools. 3. Fetch the page content using a script. 4. Parse the HTML to extract data. 5. Store the data in formats like JSON, CSV, or a database. |

| Legal and Ethical Considerations | 1. Respect `robots.txt` files. 2. Follow terms of service for data usage. 3. Avoid excessive server requests. |

Summary

Web scraping is a crucial technique for data extraction, allowing users to automate the gathering of information from various online sources. By employing efficient programming languages and following best practices, including respecting legal boundaries and ethical guidelines, anyone can leverage web scraping to gain valuable insights and enhance decision-making processes.