Web scraping is an invaluable technique utilized by businesses and individuals alike for extracting data from various online sources. This automated process allows users to gather information efficiently, which is crucial for tasks such as market research and competitive analysis. As the need for data-driven decision-making grows, understanding what web scraping entails becomes essential. It leverages various web scraping techniques and tools to turn unstructured web content into structured data, making insight extraction more manageable. However, it is vital to adhere to ethical web scraping practices to maintain compliance and respect for website policies.

Collecting information from the internet, often referred to as data harvesting or web data extraction, has gained significant traction in recent years. These processes enable organizations to compile vast amounts of online data for insightful analysis and informed decision-making. To maximize their efforts, businesses implement various methods and scraping tools tailored to their specific needs. However, responsible execution of these techniques is imperative to ensure an ethical approach to data gathering, which includes respecting site guidelines and avoiding unnecessary strain on web services. In this rapidly evolving digital landscape, understanding the nuances of information collection can greatly enhance a company’s strategic endeavors.

What is Web Scraping?

Web scraping is defined as the automated collection of data from websites through various techniques and tools. This practice enables users to extract large volumes of data that are otherwise only accessible in a human-readable format. By utilizing specific programming languages and libraries, businesses and developers can effectively automate the data extraction process, transforming unstructured web content into structured data that can be analyzed and utilized for strategic decision-making.

The significance of web scraping lies in its ability to offer insights into consumer behavior, market trends, and competitive analysis. For instance, companies can gather price information, customer reviews, and inventory details across multiple e-commerce platforms to refine their own strategies. Consequently, understanding what web scraping is unlocks a multitude of opportunities for businesses looking to leverage data-driven strategies.

Web Scraping Techniques

Various techniques can be employed in web scraping to suit different types of projects and objectives. Among the most popular methods are HTML parsing, DOM parsing, and XPath methods. Each technique has its own advantages and is chosen based on the complexity of the website’s structure and the specific requirements of the data extraction task. For example, HTML parsing, often performed with libraries such as BeautifulSoup or lxml, allows for efficient data extraction from simpler webpages, while DOM parsing is more suitable for complex websites.

Moreover, developers may also opt for headless browsers or API-based scraping, particularly when dealing with JavaScript-heavy websites. These advanced techniques simulate a browser environment, enabling scrapers to render and extract data from dynamic websites like social media platforms. Understanding the various web scraping techniques is crucial for selecting the right approach for efficient and effective data collection.

Ethical Web Scraping Practices

While web scraping can unlock valuable data, it is imperative to engage in ethical web scraping practices to maintain legal compliance and foster goodwill with website owners. Key ethical considerations include respecting the ‘robots.txt’ file, which dictates the pages a scraper is allowed to access. Additionally, responsible scrapers should avoid overwhelming a website’s server with excessive requests, which can lead to service disruptions and may result in permanent banning from the site.

Furthermore, it is essential to be aware of the laws governing data usage in different jurisdictions. While many websites encourage data sharing, others explicitly prohibit scraping, making it crucial for developers to familiarize themselves with terms of service and copyright laws. By adhering to ethical web scraping practices, businesses can ensure sustainable and responsible data extraction.

Essential Web Scraping Tools

In the realm of web scraping, utilizing the right tools can significantly enhance the efficiency and effectiveness of the data extraction process. Popular web scraping tools such as Octoparse, ParseHub, and Import.io provide user-friendly interfaces and powerful functionalities, enabling users to scrape data without extensive programming knowledge. These tools often come equipped with features like point-and-click data extraction, scheduling, and cloud storage options.

For more technically inclined users, programming libraries such as BeautifulSoup, Scrapy, and Selenium offer advanced functionalities for custom web scraping solutions. These libraries provide developers with the flexibility to create robust scraping scripts tailored to specific needs, including handling complex site structures and managing sessions. Learning about these essential web scraping tools can empower businesses to leverage data in a competitive marketplace.

Data Extraction for Business Intelligence

Data extraction through web scraping is invaluable for business intelligence, providing insights that drive strategic decision-making. By collecting vast amounts of external data, organizations can analyze trends in customer preferences, monitor competitors’ pricing strategies, and identify emerging market demands. This rich data set enables companies to make informed decisions based on empirical evidence rather than conjecture.

Furthermore, integrating scraped data into business intelligence tools can enhance visualization and reporting capabilities, allowing for more sophisticated analyses. By harnessing the power of data extraction and analysis, businesses can stay agile and responsive to market changes, thereby maintaining a competitive edge in their respective industries. This transformation of raw data into actionable insights emphasizes the importance of web scraping in modern business practices.

Frequently Asked Questions

What is web scraping and how is it used?

Web scraping is the automated process of extracting data from websites. It is widely used for gathering information for analysis, market research, and competitive intelligence, allowing businesses to make data-driven decisions.

What are some common web scraping techniques?

Common web scraping techniques include HTML parsing, DOM manipulation, and API integration. Using libraries like BeautifulSoup or Scrapy, users can efficiently parse HTML content to extract relevant data.

Is ethical web scraping possible?

Yes, ethical web scraping is achievable by following guidelines such as respecting ‘robots.txt’ files, avoiding excessive requests, and understanding the legal implications of data extraction. Responsible scraping practices are crucial for maintaining data integrity.

What tools are best for web scraping?

Several web scraping tools are popular among developers, including BeautifulSoup, Scrapy, Selenium, and Octoparse. These tools provide various functionalities, from simple HTML parsing to automated browsing and data extraction.

How do I ensure my web scraping practices are legal?

To ensure legality in web scraping, make sure to review a website’s terms of service, comply with ‘robots.txt’ rules, and avoid scraping sensitive or copyrighted data. Understanding copyright laws and data privacy regulations is essential.

What types of data can I extract through web scraping?

Web scraping can be used to extract various types of data, including product details, prices, user reviews, and market trends from e-commerce websites, social media platforms, and public databases.

Can web scraping impact website performance?

Yes, if not conducted responsibly, web scraping can overload a website’s server, leading to performance issues. It’s essential to implement rate limiting and respect request limits to avoid putting undue strain on a site.

What programming languages are commonly used for web scraping?

Python is the most popular programming language for web scraping due to its powerful libraries like BeautifulSoup and Scrapy. Other languages such as JavaScript and Ruby are also used, but Python remains the preferred choice among developers.

How can I store data after scraping it from the web?

After scraping data, it can be stored in various formats like CSV, JSON, or directly into databases such as SQL or MongoDB, depending on the needs and scale of the project.

What are the challenges associated with web scraping?

Challenges of web scraping include dealing with anti-scraping technologies, web page structure changes, and legal issues. It requires adaptability and continuous learning to overcome these obstacles effectively.

| Key Point | Description |

|---|---|

| Definition | Web scraping is the automated process of collecting information from websites. |

| Purpose | It allows businesses to gather data for analysis, market research, and competitive intelligence. |

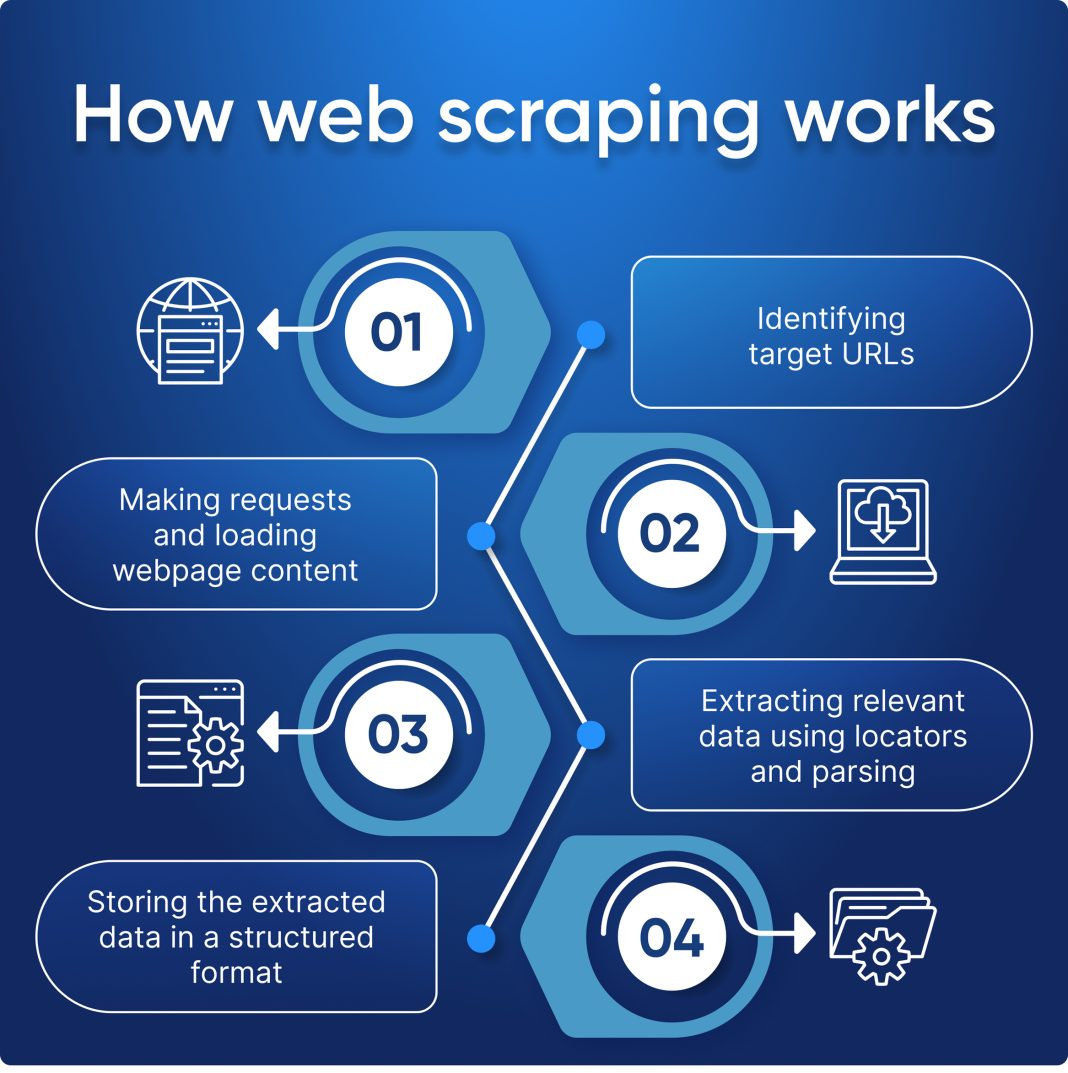

| Process | Involves requesting a webpage, parsing its HTML content for data extraction, and storing the data in a preferred format. |

| Best Practices | Respecting robots.txt, avoiding server overload, and being aware of legal implications are crucial for ethical scraping. |

Summary

Web scraping is a vital tool for businesses and organizations looking to extract valuable information from the web effectively. By understanding the processes involved and adhering to best practices, companies can harness the power of web scraping to enhance their data-driven decision-making.