Web scraping is a pivotal technique in the digital age, enabling users to extract valuable data from websites with ease. By leveraging specialized web scraping tools, users can automate the retrieval of information from countless web pages, streamlining tasks that would otherwise be tedious. Whether for data analysis, competitive intelligence, or market research, this method can significantly enhance the efficiency of information gathering. Many practitioners favor web scraping Python libraries like BeautifulSoup and Scrapy due to their ease of use and powerful capabilities. In this article, we will explore the various web scraping techniques, tools, and ethical considerations that should be kept in mind when embarking on a data scraping journey.

Data extraction, often referred to as web harvesting or web data mining, plays a crucial role in today’s data-driven landscape. This process utilizes automated scripts to navigate the vast resources of the internet, allowing users to compile comprehensive datasets for analysis or other applications. Outcome-focused programming languages such as Python have emerged as go-to solutions for these tasks, thanks to their rich ecosystem of libraries designed for web scraping. Ethical considerations are paramount in this field, ensuring that users respect website policies while implementing scraping techniques. In this discussion, we will delve into the intricacies of ethical data acquisition methods and the tools that facilitate efficient data retrieval.

Understanding Web Scraping Basics

Web scraping is a methodical approach to extracting valuable data from web pages. By employing automated bots or software, users can efficiently navigate through various websites to gather pertinent information. This level of data extraction is essential for tasks like market analysis, competitive research, or even big data projects. With the increasing need for data-driven decision-making, mastering the process of web scraping has become an invaluable skill in today’s digital landscape.

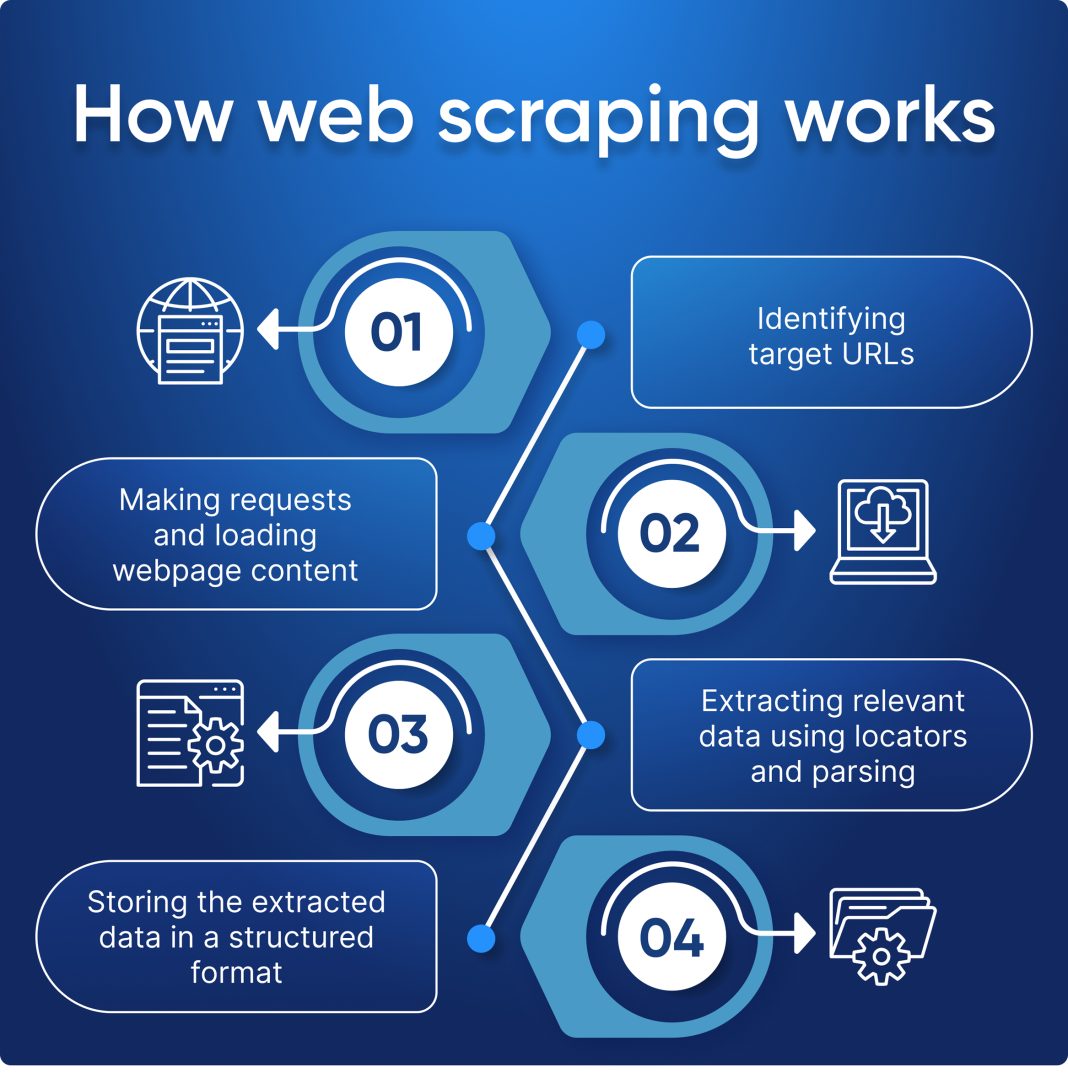

The techniques used in web scraping can vary widely, from simple data extraction to complex crawling procedures. At its core, web scraping relies on a few fundamental steps: making an HTTP request to a web page, retrieving the HTML content, and parsing it to capture the desired data. This process is crucial for anyone looking to convert unstructured web data into a structured format, ideal for analysis or reporting.

Popular Web Scraping Tools

When it comes to web scraping, several tools and libraries stand out, particularly in the Python ecosystem. Python has gained immense popularity due to its simplicity and the robustness of its libraries. Tools like BeautifulSoup and Scrapy are well-documented and widely used in the industry. For instance, BeautifulSoup is particularly effective for smaller scrapes where you need to obtain data from simpler HTML structures, while Scrapy is equipped for handling larger projects with its comprehensive framework designed for crawling across sites efficiently.

Moreover, Selenium is another powerful tool that mimics user behavior on a browser, allowing for web scraping of JavaScript-heavy sites that traditional libraries may struggle with. Users can automate browser interactions to interact dynamically with web content, making it ideal for scraping from modern web applications. Selecting the right web scraping tool depends largely on the project requirements, the type of data to be scraped, and the complexity of the web pages involved.

Leveraging Python for Web Scraping

Python has emerged as the go-to language for web scraping due to its simplicity and the availability of powerful libraries. Libraries like Requests and BeautifulSoup allow developers to pull data from web pages effortlessly. The straightforward syntax of Python makes it easy for both beginners and experienced developers to write effective web scrapers. Additionally, the strong community support for these libraries ensures that users have access to vast resources and examples to aid their scraping endeavors.

Using Python also facilitates the handling of various data formats, such as JSON and XML, which are often encountered during data scraping. This versatility, combined with Python’s data manipulation libraries, such as Pandas, allows for seamless integration of scraped data into analytic workflows. In essence, mastering Python for web scraping opens up a world of possibilities for data acquisition and analysis.

Ethical Web Scraping Practices

While web scraping provides invaluable insights, it’s essential to practice ethical web scraping to respect the rights of website owners and maintain lawful operations. Ethical web scraping involves adhering to the guidelines set forth in a site’s robots.txt file, which specifies which pages can be scraped. Ignoring these guidelines can lead to undesirable consequences, including blocking access to the site or facing legal repercussions.

Furthermore, ethical web scraping implies not overwhelming websites with excessive requests, which could lead to server issues. It’s crucial to implement responsible scraping techniques, such as throttling requests to avoid flooding the server. Engaging in ethical web scraping not only protects you legally but also helps maintain the integrity of the web scraping community.

Legal Considerations in Web Scraping

Navigating the legal landscape of web scraping is vital for ensuring compliance and avoiding legal pitfalls. Different countries have varying laws regarding data extraction and usage, making it essential for scrapers to familiarize themselves with local regulations. For example, in the United States, the Computer Fraud and Abuse Act may come into play regarding unauthorized access to systems.

Additionally, many websites have terms of service that explicitly outline their policies regarding automated data collection. Ignoring these agreements can lead to serious legal consequences, including potential lawsuits. Therefore, it’s imperative for anyone engaging in web scraping to conduct thorough research and ensure that their practices are both ethical and legally sound.

Web Scraping Techniques for Effective Data Gathering

There are several techniques employed in web scraping that cater to different needs and complexities of data extraction. One fundamental approach is DOM parsing, where scrapers retrieve and navigate the Document Object Model (DOM) of web pages to extract specific elements and attributes. This technique is foundational for those starting in web scraping as it provides a clear understanding of how HTML structures data.

Another technique is the use of API endpoints, where scrapers tap into publicly available data interfaces designed for data exchange. APIs offer a more structured and often more reliable way to access data compared to scraping HTML directly, as they reduce the likelihood of being blocked. Understanding these techniques and their advantages is crucial for anyone looking to implement effective web scraping solutions.

Challenges in Data Scraping and Solutions

Despite its advantages, web scraping presents various challenges, especially with the increasing complexity of web pages and the implementation of anti-scraping technologies. Many websites now employ measures such as CAPTCHA, session verification, and dynamic content rendering, which can hinder standard scraping methods. As a result, scrapers must adapt and devise innovative strategies to overcome these obstacles.

One effective solution is to use headless browsers or tools like Selenium, which can handle JavaScript-rendered content. Moreover, developing techniques like rotating IP addresses and employing user agents can help disguise scraping activities as normal user behavior, reducing the risk of IP bans. By staying informed about the latest scraping challenges and solutions, you can enhance your web scraping success.

The Future of Web Scraping

Looking ahead, the future of web scraping appears promising, particularly with advancements in artificial intelligence and machine learning. These technologies have the potential to streamline the scraping process further and improve data extraction accuracy. As more businesses recognize the value of data-driven strategies, the demand for efficient and ethical web scraping solutions will continue to grow.

Furthermore, as regulations surrounding data privacy evolve, web scraping practices must adapt to ensure compliance. This ongoing evolution will likely lead to the development of more sophisticated tools that integrate ethical considerations while still providing valuable insights from the web. Staying abreast of these trends will be crucial for anyone aiming to leverage web scraping effectively in their operations.

Frequently Asked Questions

What is web scraping and how is it used?

Web scraping is an automated technique to extract information from websites. It’s commonly used for data analysis, market research, and competitive intelligence. By employing web scraping tools, users can efficiently gather large amounts of data from various web pages.

What are the best web scraping tools available?

Some of the best web scraping tools include Python libraries such as BeautifulSoup, Scrapy, and Selenium. These web scraping tools help automate the data extraction process, making it easier to retrieve and parse information from HTML documents.

Why is Python popular for web scraping?

Python is popular for web scraping due to its robust libraries like BeautifulSoup and Scrapy, which simplify the process of extracting data from websites. These libraries provide powerful functions for parsing HTML and navigating web pages, making Python a top choice for data scraping.

What are ethical considerations in web scraping?

Ethical web scraping involves respecting the site’s robots.txt file and adhering to the website’s terms of service. It’s important to ensure that web scraping does not violate any legal guidelines or lead to site blocking, ensuring that your data extraction methods are responsible.

What are some common web scraping techniques?

Common web scraping techniques include using Python libraries like BeautifulSoup for parsing HTML, employing Scrapy for comprehensive site crawling, and utilizing tools like Selenium for dynamic web pages that require interaction. These techniques enable effective data extraction.

| Key Point | Description |

|---|---|

| Definition | Web scraping is a technique for extracting information from websites using automated programs. |

| Purpose | Used for data analysis, market research, and competitive intelligence. |

| Popular Language | Python is widely used for web scraping due to its libraries like BeautifulSoup, Scrapy, and Selenium. |

| Using BeautifulSoup Example | Shows how to extract data from a web page using Python’s BeautifulSoup. |

| Legal Considerations | It’s important to check the robots.txt file and adhere to website policies to avoid legal issues. |

Summary

Web scraping is an essential technique for gathering valuable data from the web. It enables users to extract information efficiently from various sources, making it a powerful asset for businesses and researchers alike. When done ethically and within legal boundaries, web scraping can unlock insights that drive better decision-making. By utilizing programming tools such as Python and being mindful of site-specific regulations, users can navigate the intricacies of web scraping to harness its full potential.