Web scraping is a dynamic and powerful technique used for data extraction from various online sources, pivotal for anyone looking to gather information efficiently. By employing effective web scraping tools, users can automate the process of downloading web page content and extracting useful data from the intricate HTML structure. This data extraction technique not only saves time but also enhances the accuracy of information retrieval, making it invaluable for businesses and researchers alike. Ethical web scraping practices are essential to ensure compliance with legal standards and website terms of service. From academic research to price comparison websites, the range of data scraping applications continues to grow, highlighting the importance of mastering web scraping skills.

Data harvesting from websites, commonly referred to as web scraping, encompasses the automated methods utilized for collecting information online. Also known as data mining or web data extraction, this process involves systematically searching through HTML content to glean valuable insights. The utilization of web scraping methodologies can lead to significant benefits in sectors ranging from research to e-commerce, enhancing decision-making processes through rich datasets. It is crucial to follow ethical web scraping guidelines to protect intellectual property and adhere to website regulations. Utilizing various web scraping technologies effectively can equip individuals and businesses with the necessary tools to thrive in a data-driven landscape.

Understanding Web Scraping Basics

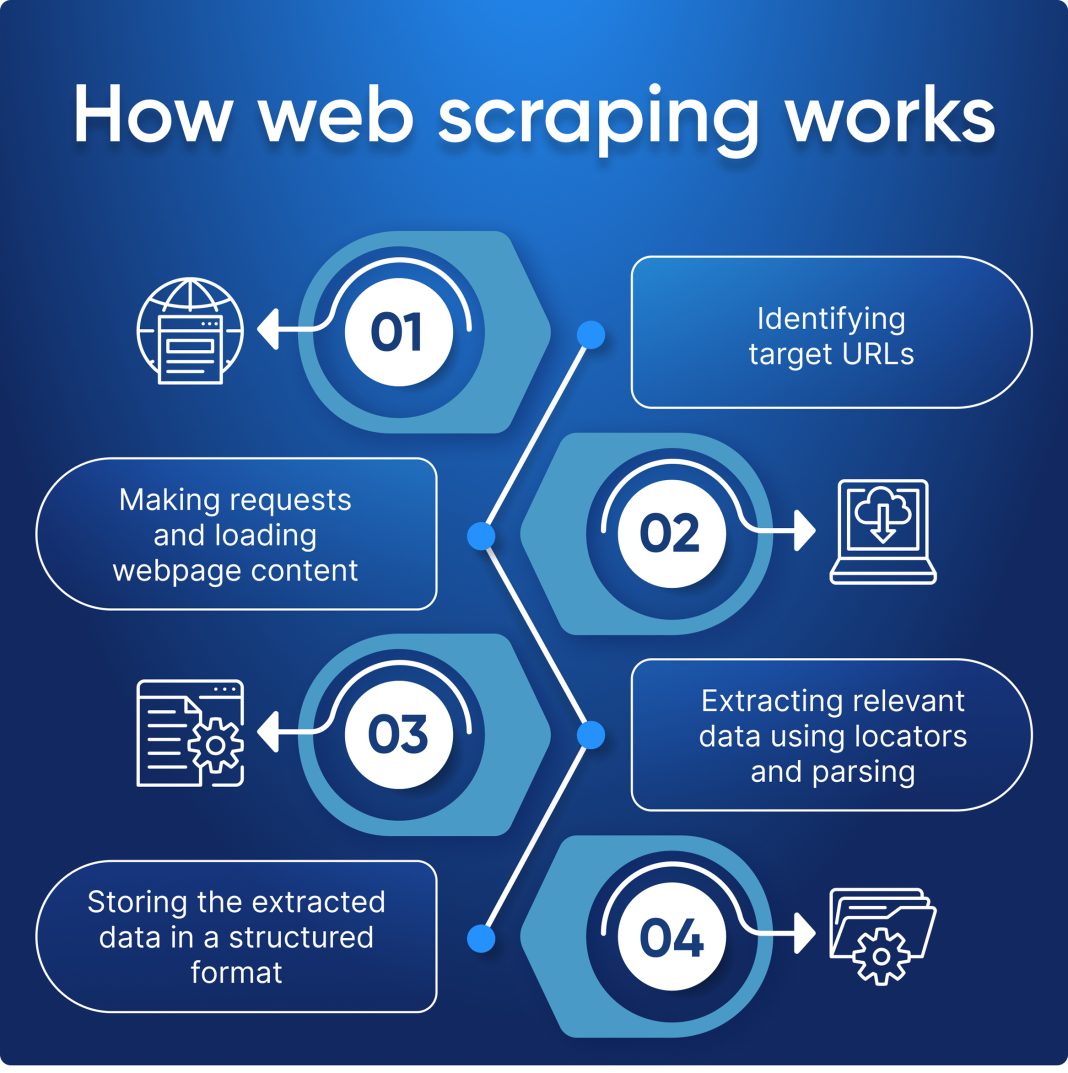

Web scraping is a powerful technique that allows users to extract data from webpages in an automated manner, offering a strategic advantage for various applications. By employing web scraping tools, users can gather data efficiently without manual input, thus saving time and increasing productivity. To understand web scraping thoroughly, one must be aware of its core functionalities, which include downloading webpage content and parsing the HTML structure to locate the desired information.

As web developers harness web scraping for various tasks, it’s essential to explore the underlying technologies. Tools like Beautiful Soup and Scrapy make it easier to navigate the complexities of HTML data parsing. By leveraging these frameworks, developers can create scripts that automate the collection of vast datasets, which has become increasingly valuable in fields like data analysis and digital marketing.

The Importance of Ethical Web Scraping

While web scraping opens doors to immense data resources, it is paramount to engage in ethical web scraping practices. Understanding the legalities and ethical considerations surrounding data extraction helps maintain a respectful relationship with website owners. For instance, adhering to the directives in the robots.txt file is crucial, as it outlines the specific areas of the website that can be crawled. Ignoring these rules can lead to unwarranted legal complications and potential bans from the site.

Moreover, responsible web scraping also includes the ethical practice of not overwhelming the web server with excessive requests. Employing techniques such as rate limiting helps ensure that scraping activities do not disrupt the normal functionality of the website. By prioritizing ethical practices, users can harness the benefits of web scraping while respecting the digital landscape and legal regulations.

Effective Data Extraction Techniques

To achieve successful web scraping outcomes, one must utilize effective data extraction techniques that can handle various obstacles encountered during the process. Initially, selecting the right scraping method is critical; using tools or libraries designed for robust HTML data parsing can significantly enhance data retrieval accuracy. Developers often employ libraries like Selenium for dynamic webs, which allows interaction with pages that rely heavily on JavaScript for rendering content.

Additionally, adjustments in web scraping strategies may be necessary when faced with changing website structures. Websites frequently update their layouts, which can render existing scraping scripts ineffective. To counter this, continuous monitoring and maintenance of scraping workflows are essential, ensuring that defined extraction methods remain operational despite external modifications.

Utilizing Advanced Web Scraping Tools

The landscape of web scraping tools is continuously evolving, offering a plethora of options for data extraction tailored to diverse needs. Popular tools such as Scrapy and Beautiful Soup enable developers to efficiently gather and process information while managing complex web frameworks. These tools provide comprehensive documentation that can aid beginners in navigating the intricacies of web scraping.

Moreover, each tool comes with unique features that cater to specific data scraping applications. For instance, while Scrapy is known for its powerful spider capabilities, Beautiful Soup excels in ease of use for simple HTML parsing tasks. By evaluating the strengths of various scraping tools, users can make informed decisions that optimize the efficiency and accuracy of their data extraction processes.

Challenges of HTML Data Parsing

HTML data parsing poses its own set of challenges that can hinder the effectiveness of web scraping efforts. Variations in design and markup across different websites can lead to issues with data retrieval, as scripts built for one structure may not work uniformly for another. Scrapers must implement flexible parsing strategies that can adapt to these changes, ensuring resilience and reliability in the scraping process.

Furthermore, some websites use strategies to prevent web scraping, such as CAPTCHA or dynamic content loading, which require advanced approaches to bypass. Consequently, developers need to remain up-to-date on the latest challenges in HTML data parsing, continuously adapting their techniques to ensure successful data extraction, while also prioritizing ethical considerations in their approaches.

Applications of Data Scraping

Data scraping finds diverse applications across various industries, making it an invaluable tool for businesses and researchers alike. For instance, market analysts often rely on data scraping to monitor competitors, track pricing trends, and assess consumer behavior, enabling them to make informed decisions that drive strategic planning. Similarly, academic researchers utilize web scraping to gather data for studies, enriching their analyses with real-time information.

Moreover, targeted data scraping applications extend to fields such as real estate, where agents collect listings data for comparative analysis, and e-commerce, where sellers track product prices across multiple platforms. As the demand for large datasets grows, the versatility and practicality of data scraping continue to demonstrate its importance in a data-driven world.

The Future of Web Scraping

As technology progresses, the future of web scraping looks promising, with advancements in AI and machine learning playing a significant role. The integration of smart algorithms can enhance the efficiency of data extraction tasks by automating decision-making processes and improving accuracy in data collection. Furthermore, advancements in natural language processing may lead to more nuanced scraping techniques that can glean insights from textual data.

Moreover, the continuous evolution of data privacy laws and regulations will likely have a profound impact on web scraping practices. Developers must remain vigilant about adhering to legal requirements while leveraging new technologies for data extraction. The balance between innovation and compliance will define the trajectory of web scraping, shaping it into a more sustainable and ethical domain for years to come.

Incorporating API Access in Web Scraping

In addition to traditional web scraping methods, utilizing API access presents a streamlined approach for data extraction when available. APIs provide users with structured data formats that are easier to handle compared to scraping HTML web pages. By leveraging APIs, developers can bypass the complexities of data parsing and focus on retrieving relevant information efficiently.

Furthermore, working with APIs often means accessing up-to-date data without the overhead of parsing and restructuring HTML documents. This not only saves time but also enhances the accuracy of the data collected. For organizations seeking reliable datasets for analytics or integration into their workflows, opting to use available APIs can substantially simplify their data acquisition processes.

Legal Aspects of Web Scraping

Navigating the legal landscape surrounding web scraping is paramount for developers and businesses alike. Understanding the boundaries set by legal frameworks is crucial to avoid potential disputes and maintain compliance. Various jurisdictions have different regulations concerning data scraping, creating a complex environment that requires careful consideration before executing scraping operations.

Additionally, addressing concerns surrounding intellectual property rights is critical, as scraping protected content without permission can lead to legal challenges. By engaging in transparent practices, seeking permissions where necessary, and adhering to guidelines, entities can responsibly leverage the benefits of web scraping while minimizing risks associated with legal ramifications.

Enhancing Data Quality through Scraping Techniques

Ensuring data quality during scraping procedures is fundamental for achieving accurate and reliable results. Implementing validation checks during the extraction process helps identify and correct discrepancies, enhancing the overall integrity of the gathered datasets. For instance, data cleaning techniques can be applied post-scraping to remove duplicates, fix formatting issues, and standardize data attributes.

Moreover, maintaining detailed logs of scraping activities can provide insights into the data acquisition processes, allowing for better management of errors and improvements over time. By focusing on these quality assurance practices, developers can maximize the utility of the data collected, leading to more impactful analyses and informed decision-making.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is an automated technique used to extract data from websites. It involves downloading the contents of web pages and analyzing their HTML structure to retrieve specific information. This process is typically facilitated by web scraping tools such as Beautiful Soup and Scrapy, which help in parsing the HTML and organizing the extracted data.

What are some common web scraping tools available for data extraction?

There are several popular web scraping tools designed for data extraction, including Beautiful Soup, Scrapy, and Selenium. These tools simplify the web scraping process by providing features for HTML data parsing, allowing users to efficiently collect and organize information from multiple web sources.

What is ethical web scraping and why is it important?

Ethical web scraping refers to the practice of collecting data from websites while respecting the site’s terms of service and legal guidelines. This includes adhering to the robots.txt file, which determines permissible areas for crawling, as well as being mindful not to overload a website’s server. Ethical practices protect both the web scraper and the website being scraped.

How do I parse HTML data in web scraping?

HTML data parsing in web scraping involves using tools and libraries that can interpret and navigate the HTML structure of web pages. Libraries like Beautiful Soup allow users to search for specific data elements and extract them efficiently, making the parsing process straightforward and effective for data extraction.

What are the applications of web scraping in various industries?

Web scraping has diverse applications across multiple industries. It is widely used in market research to gather competitive data, in academic studies for collecting datasets, and in price comparison websites to aggregate prices from different retailers. These applications leverage the capabilities of web scraping to analyze large datasets for informed decision-making.

Why should I consider using APIs instead of web scraping for data extraction?

Using APIs for data extraction is often preferable when available, as APIs provide structured data formats that are easier to work with than raw HTML. They offer a more organized way to access specific data without the complexities of web scraping, such as handling HTML changes or adhering to scraping rules.

What precautions should I take while conducting web scraping?

When conducting web scraping, it’s essential to follow best practices such as checking the website’s robots.txt file, implementing rate limits to avoid server overload, and ensuring compliance with legal regulations. Additionally, be prepared to handle changes in the website’s layout that could affect scraping scripts.

| Key Point | Details |

|---|---|

| Definition | Web scraping is the automated process to gather data from websites. |

| Tools Used | Commonly used tools include Beautiful Soup, Scrapy, and Selenium in Python. |

| Robots.txt | Respect the robots.txt file to identify which parts of a site are crawlable. |

| Server Load Limits | Scraping should be done thoughtfully to avoid overloading servers, following rate limits. |

| Use of APIs | APIs provide structured data access, which is often easier than scraping HTML. |

| Application Areas | Applications include academic research, market analysis, and price comparisons. |

| Ethical Considerations | Maintain ethical practices and comply with legal standards when scraping data. |

Summary

Web scraping is an invaluable tool for data extraction, allowing users to efficiently gather information from various online sources. This process not only facilitates diverse applications such as market research and academic studies but also demands a careful approach to ethical and legal considerations. By using the right tools and respecting site rules, web scraping can yield significant insights while protecting both the scraper and the source.