Web scraping is an innovative and automated process for extracting valuable information from websites, revolutionizing how data is collected and analyzed. With various web scraping techniques available, users can efficiently gather vast amounts of data for applications such as market research and price monitoring. However, while harnessing automated web scraping tools, one must remain aware of potential legal issues surrounding web scraping, ensuring compliance with website terms. This comprehensive guide aims to dive into the myriad applications of web scraping, exploring its benefits and best practices. From understanding data extraction methods to navigating legal considerations, prepare to unlock the full potential of this transformative practice.

Known by many as data harvesting or web data extraction, web scraping is becoming more ubiquitous in today’s digital landscape. This process employs sophisticated software applications designed to retrieve and compile information from various online sources automatically. Users leverage this technology for numerous purposes, including data analysis, competitive research, and content compilation. As these data collection strategies evolve, it is crucial to consider the ethical implications and regulations tied to automated data acquisition. Understanding the nuances of web scraping not only helps mitigate legal risks but also maximizes its utility across diverse industries.

Understanding the Basics of Web Scraping

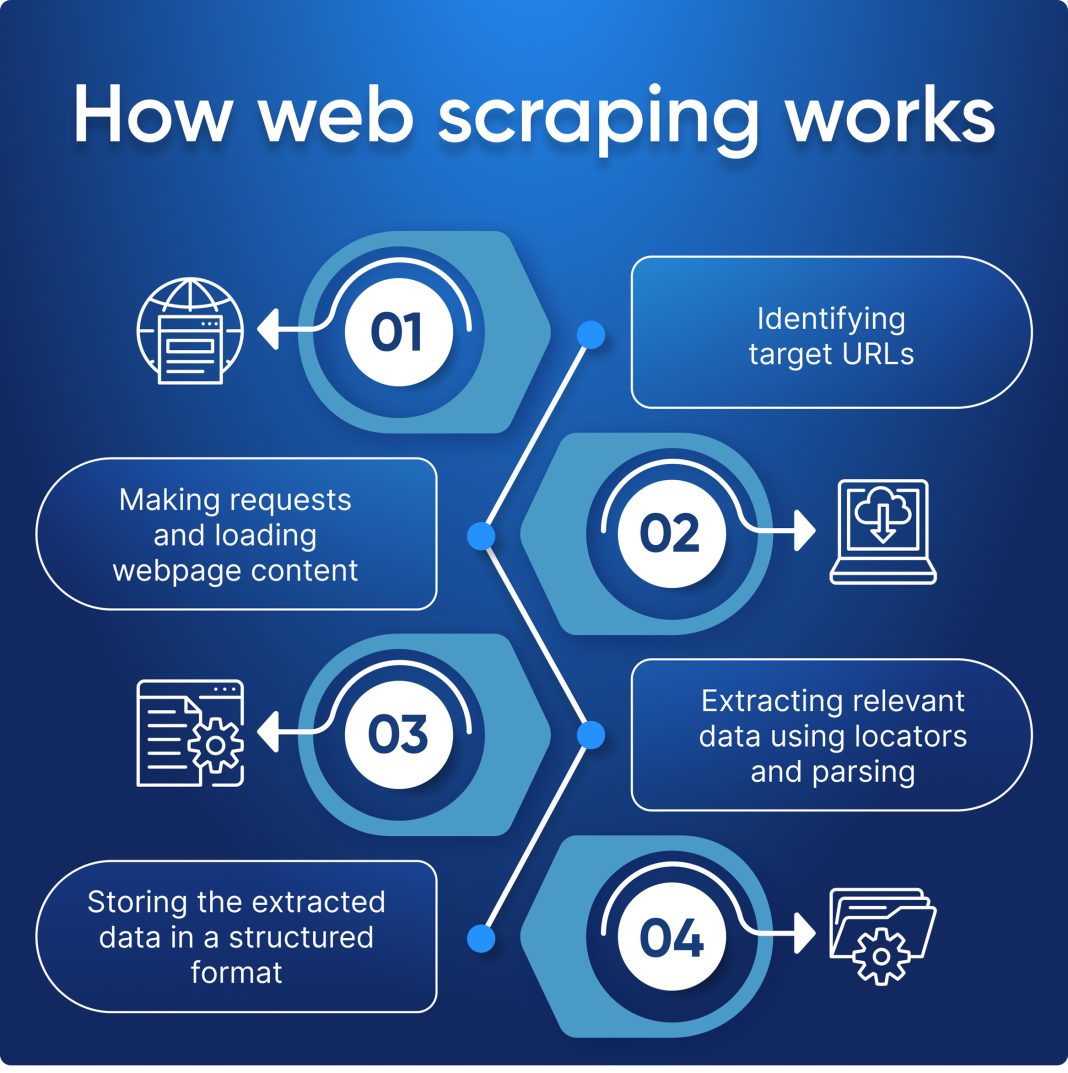

Web scraping is crucial in today’s digital landscape, enabling users to efficiently gather and analyze data from various online sources. At its core, web scraping involves using programmable scripts to automate the retrieval of content from websites. This process not only saves time but also enhances accuracy in data collection compared to manual data entry methods. The ability to extract data systematically allows businesses and researchers to harness vast amounts of information available on the internet.

The foundational techniques of web scraping revolve around understanding the structure of web pages, typically delivered in HTML format. By parsing this HTML, web scrapers can identify and pull specific data points into a usable format. Methods such as XPath or CSS selectors are frequently used in this extraction process, facilitating targeted data retrieval. Consequently, mastering these basics is essential for those looking to develop advanced scraping capabilities.

Exploring Applications of Web Scraping

Web scraping serves numerous applications across various industries, making it an invaluable resource for organizations looking to leverage data for competitive advantage. For example, e-commerce companies frequently deploy scraping techniques to monitor pricing trends across their competitors, enabling them to adjust their pricing strategies dynamically. This practice not only helps businesses stay competitive but also provides insights into market positioning.

Beyond e-commerce, web scraping plays a significant role in market research where businesses collect qualitative and quantitative data on consumer behavior and industry trends. News aggregation platforms similarly benefit from web scraping by consolidating information from different news outlets, providing users with a comprehensive view of current events in one place. Moreover, academic researchers are increasingly utilizing scraping methods to gather data for studies, demonstrating the versatility of this tool across sectors.

Web Scraping Techniques You Should Know

Utilizing effective web scraping techniques is essential for automating data collection efficiently. One of the most popular methods is the use of automated scraping tools like Scrapy or Beautiful Soup, which help streamline the process of data extraction. By employing these tools, users can write scripts that navigate through a website, access HTML elements, and save the necessary data into structured formats, such as CSV or JSON.

Another important scraping technique involves the use of APIs, which many websites provide for cleaner, more efficient data access. Leveraging APIs is often regarded as a best practice in data extraction because it allows users to pull data directly without the risk of violating a website’s terms of service, which can happen with traditional scraping methods. Overall, mastering these techniques enables users to harness the full potential of web scraping.

Automated Web Scraping Tools Overview

The rise of automated web scraping tools has transformed the landscape of data extraction, making it accessible to users without extensive programming knowledge. Tools like ParseHub and Octoparse offer user-friendly interfaces that allow individuals to visually select data points to be scraped. This simplicity ensures that even beginners can gather data efficiently without needing to write complex code.

Moreover, advanced tools provide features such as scheduling scrapes and handling data in real-time. For instance, using tools like Selenium allows for scraping dynamic web pages that rely on JavaScript, further broadening the scope of available data. These automated solutions not only improve the efficiency of the scraping process but also minimize manual errors, establishing a new standard in data harvesting.

Legal Issues Surrounding Web Scraping

Navigating the legal landscape surrounding web scraping is paramount for any organization engaged in this practice. Many websites have terms of service that explicitly prohibit scraping, and violating these terms can lead to significant legal ramifications. It is essential to conduct due diligence before scraping any website, which includes reviewing its robots.txt file and terms of service to understand the restrictions in place.

In recent years, legal cases concerning web scraping have emerged, underscoring the need for a careful approach. For example, lawsuits to protect data ownership and intellectual property are on the rise, challenging the ethics of scraping practices. Therefore, businesses must be aware of potential legal implications and consider consulting with legal professionals to ensure compliance while engaging in web scraping activities.

Future Trends in Web Scraping Technology

As technology continues to advance, the future of web scraping promises to be both exciting and complex. Emerging technologies, such as artificial intelligence and machine learning, are beginning to influence web scraping methodologies. These technologies can enable more accurate data extraction by intelligently determining which data is most relevant for specific needs, thereby enhancing the efficiency of the scraping process.

Additionally, as regulations surrounding data scraping evolve, tools and techniques must adapt to comply with new legal frameworks efficiently. Future developments may lead to more sophisticated tools that not only respect website policies but also navigate complex legal landscapes intelligently. This evolution will likely carve out a niche for ethical scraping practices that balance data utilization with respect for copyright and intellectual property rights.

Best Practices for Effective Web Scraping

Implementing best practices is crucial for anyone looking to excel at web scraping. Firstly, always respect the website’s robots.txt file, which indicates which areas are permissible for scraping. This not only adheres to ethical guidelines but also prevents potential legal repercussions. Furthermore, setting polite scraping intervals can help reduce the load on the website’s server, ensuring your actions do not disrupt their operations.

Another best practice involves regularly updating your scraping scripts and methods. Websites frequently redesign their pages, which can break existing scrapers. By maintaining flexibility and updating your technology accordingly, you can ensure continued success in data extraction endeavors. Additionally, having a contingency plan for handling changes in scraping permissions or website structures can safeguard against future setbacks.

Combining Web Scraping with Data Analysis

Web scraping, when combined with data analysis, can yield profound insights and drive business decisions effectively. After extracting data from various web sources, organizations can apply data analysis techniques to uncover trends, correlations, and actionable insights. This synergy allows businesses to tap into previously inaccessible information and use it to enhance strategic planning and marketing efforts.

Employing analytical tools alongside scraping not only enriches data quality but also transforms raw information into meaningful narratives that support decision-making. By integrating web scraping with visualization tools like Tableau or data analytics platforms, organizations can present findings in an easily digestible format, making them more compelling and actionable for stakeholders.

The Importance of Web Scraping in Big Data

In the era of big data, web scraping has become an integral practice for acquiring unstructured data from the web, which vastly increases knowledge bases across industries. By systematically gathering data from diverse online sources, organizations can enhance their datasets, enabling richer analytics and insights that drive innovation and growth. This collection method plays a critical role in supporting data-driven decision-making.

Moreover, as the volume of data from online sources continues to soar, web scraping techniques will likely become more sophisticated, incorporating advanced technologies. This evolution is essential to handle vast amounts of information efficiently, ensuring that businesses can stay ahead of their competition by leveraging actionable data extracted from the web.

Frequently Asked Questions

What are the most effective web scraping techniques available?

The most effective web scraping techniques include using automated tools like Beautiful Soup and Scrapy, employing browser automation with Selenium, and utilizing APIs for structured data access. These techniques help streamline data extraction processes, ensuring efficiency and accuracy when gathering information from websites.

What are the key legal issues surrounding web scraping?

Key legal issues surrounding web scraping involve violating a website’s terms of service, potential copyright infringement, and data privacy concerns. It’s crucial to review the legal status of the website being scraped and to follow ethical guidelines to avoid any legal repercussions.

What are the common applications of web scraping?

Common applications of web scraping include price monitoring for e-commerce, market research to analyze trends, competitive analysis, and content aggregation from various online sources. Businesses leverage web scraping to gather valuable insights and enhance their data-driven decision-making.

What are some popular data extraction methods used in web scraping?

Popular data extraction methods in web scraping include manual copying, automated scraping using tools like Scrapy or Beautiful Soup, and fetching data via APIs. Each method has its advantages, with automated tools providing efficiency and speed.

What are some effective automated web scraping tools?

Effective automated web scraping tools include Beautiful Soup for parsing HTML, Scrapy for building web crawlers, and Selenium for dynamic websites. These tools simplify the web scraping process, making it accessible for users with varying levels of programming knowledge.

| Key Points |

|---|

| Web Scraping Definition: The automated process of extracting information from websites, enabling systematic data gathering from the internet. |

| Applications: Includes price monitoring, market research, and content aggregation. |

| Methods: Involves manual copying, automated tools (like Beautiful Soup and Scrapy), and APIs for simpler access. |

| Legal Considerations: Understanding the terms of service of websites is essential to avoid legal issues from unauthorized scraping. |

Summary

Web scraping is an essential digital tool for extracting valuable data from online sources. As explored in this guide, web scraping can be efficiently applied in various fields such as market research and competitive analysis. However, it is crucial to employ proper methods and remain aware of the legal boundaries to ensure responsible scraping practices.