Web scraping is a powerful technique for automated data collection that allows users to efficiently extract valuable information from websites. By programmatically retrieving web pages and utilizing advanced scraping techniques, individuals and businesses can gather data, such as product pricing and customer reviews, with remarkable ease. This method of data extraction not only enhances research capabilities but also aids in crucial applications like price comparison scraping, which helps consumers secure the best deals. As we delve deeper into the world of web scraping, you’ll discover a variety of effective web scraping tools that can further streamline your data-gathering process. Join us as we explore the essentials of web scraping and how it can transform your approach to data analysis.

Often referred to as data harvesting or web data extraction, this process involves systematically collecting information from various online sources. With the rise of big data analytics, the demand for effective data aggregation methods has surged, leading to the development of sophisticated tools designed for seamless automated data collection. By utilizing these innovative technologies, businesses can access a wealth of knowledge, ranging from market trends to competitive pricing strategies. In this discussion, we will highlight the fundamentals of this approach and its diverse applications across industries.

Understanding the Fundamentals of Web Scraping

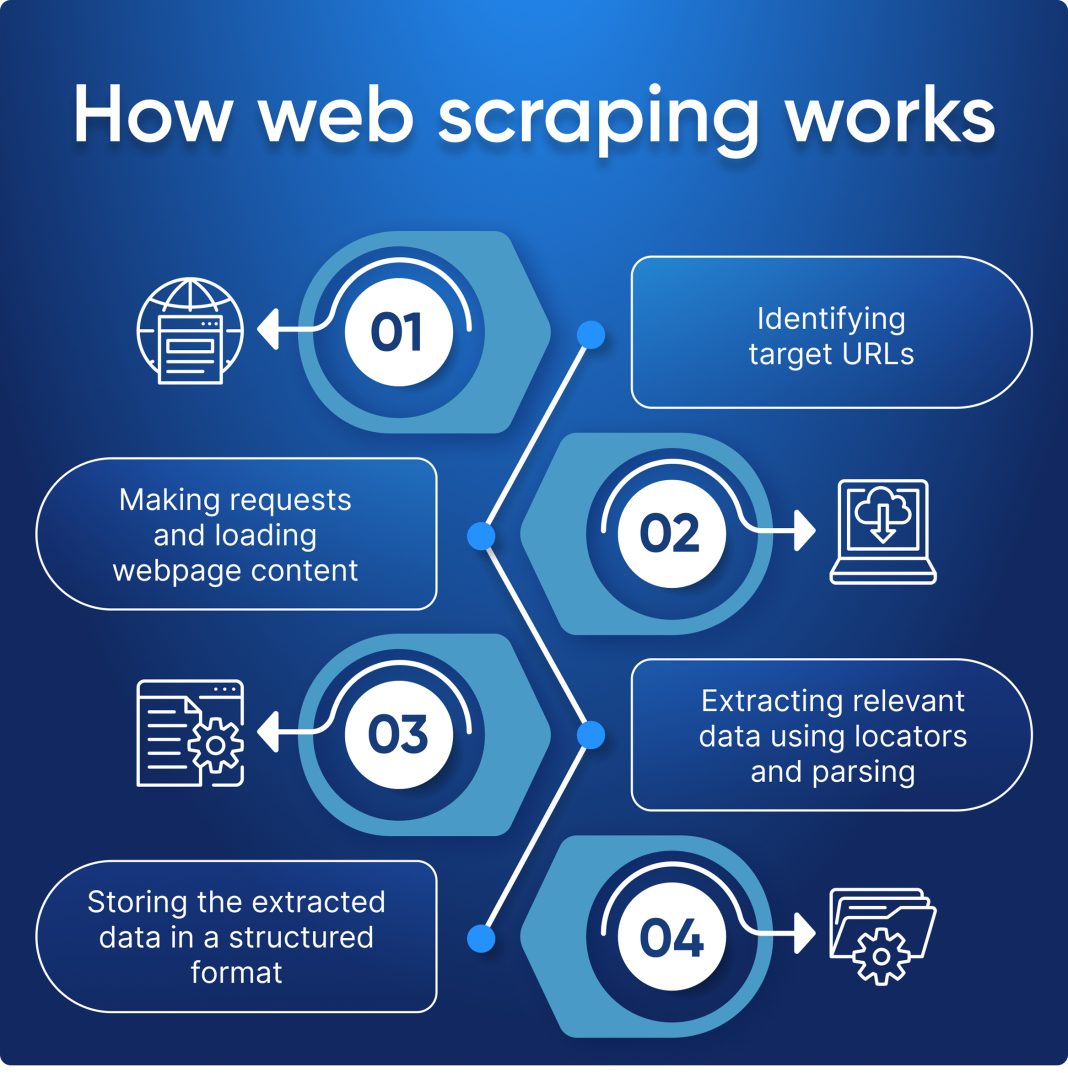

Web scraping is the technique by which data is programmatically retrieved from web pages. It primarily involves sending a request to a web server and receiving the HTML content of a web page, which is then parsed to extract meaningful information. This process requires an understanding of HTML and the structure of web pages, as you’ll need to identify specific elements to gather the required data. By automating this procedure, businesses can save significant time and resources, making data extraction a seamless process that facilitates decision-making.

Modern web scraping often employs various libraries and tools to streamline the process, allowing users to scrape multiple pages at once. This automated data collection has become essential across industries, driving innovations in how organizations gather and utilize information. Whether it’s tracking website changes or gathering competitive pricing data, web scraping serves as a cornerstone for effective data analytics.

Applications of Web Scraping in Business

Web scraping applies to numerous business models, enhancing their operational efficiency and market understanding. For instance, companies can conduct extensive data analysis by scraping reviews or customer feedback from social media and e-commerce platforms. This extracted data enables them to identify market trends, understand consumer behavior, and tailor their products or services to meet specific needs.

Additionally, price comparison scraping is one of the most prominent applications in the retail sector. E-commerce businesses utilize this technique to stay competitive by aggregating pricing information from various competitors. By regularly updating their databases with the most current prices, they can optimize their pricing strategies to attract more customers while maintaining profit margins.

Popular Web Scraping Tools and Technologies

When it comes to executing web scraping efficiently, several tools stand out. Beautiful Soup, commonly used for parsing HTML and XML documents, allows developers to navigate through the parse trees effortlessly. This Python library simplifies the data extraction process by providing easy methods for searching and modifying parse trees, making it a go-to choice for many developers.

Another powerful tool is Scrapy, an open-source web crawling framework. This robust platform supports large-scale web scraping and provides an array of features such as selectors for extracting data, built-in handling of requests and responses, and support for data storage in various formats. With its extensible architecture, Scrapy allows developers to build custom spiders to harvest diverse information across different sites, promoting efficient automated data collection.

Comparative Analysis of Scraping Techniques

Different scraping techniques can be employed based on the complexity of the target website and the type of data required. Traditional methods involve static scraping, where only the HTML content is retrieved. However, with the increase in dynamic web pages that utilize JavaScript to load content, more advanced techniques such as headless browser scraping have become necessary. Utilizing tools like Selenium, developers can interact with web pages as if they were normal users, enabling them to extract content that is dynamically generated.

Furthermore, targeted scraping methods, such as API scraping, allow users to obtain data directly from websites’ APIs. This not only ensures data consistency and integrity but also minimizes server load, as APIs are designed to serve requests efficiently. Understanding when to apply each scraping technique is crucial for optimizing extraction speeds and minimizing the chances of being blocked by web servers.

Legal and Ethical Considerations for Web Scraping

While web scraping offers various advantages, it’s vital to navigate the legal landscape carefully. Websites often have terms of service that prohibit unauthorized data extraction. Engaging in scraping practices that violate these terms can lead to lawsuits, IP bans, or other legal ramifications. Therefore, it’s essential for scrapers to review these conditions and ensure compliance to avoid potential conflicts with web hosts.

Moreover, ethical scraping practices can enhance the reputation of a business. This includes being transparent about data usage, respecting robots.txt files which indicate the areas of a site that should not be accessed by web crawlers, and ensuring that the scraped data is used responsibly. By adhering to ethical guidelines, businesses can avoid backlash and foster long-term relationships with clients and data sources.

Optimizing Web Scraping Processes for Efficiency

To maximize the efficiency of web scraping, it’s crucial to implement optimization strategies. For instance, leveraging multi-threading techniques allows multiple threads to scrape different pages simultaneously, significantly speeding up the extraction process. Additionally, implementing error handling mechanisms ensures that the crawler can resume operations without losing collected data in case of network failures or timeouts.

Caching can also play a vital role in optimizing scraping tasks. By storing previously scraped data, businesses can minimize redundant requests to web servers, which can improve both the speed of the scraping process as well as limit bandwidth consumption. With these strategies in place, organizations can achieve efficient and reliable data extraction.

Choosing the Right Web Scraping Tool for Your Needs

The choice of a web scraping tool often depends on the specific requirements of the project. For users who require simple data extraction and have minimal coding experience, tools like ParseHub or Octoparse provide user-friendly interfaces that facilitate drag-and-drop scraping, making data gathering accessible to everyone. These tools typically come with pre-built templates for common tasks like price comparison scraping.

On the other hand, for developers looking for more control and customization, programming libraries such as Scrapy or Beautiful Soup provide the flexibility to build tailored scraping solutions. These solutions can adapt to varying website structures and can be integrated into larger applications, offering the versatility required for intricate data extraction tasks.

The Future of Web Scraping: Trends and Innovations

As technology continues to evolve, so do the tools and methods of web scraping. One of the key trends is the shift towards AI-driven scraping solutions. These advanced tools leverage machine learning algorithms to improve data extraction accuracy, automate the identification of data points, and adapt to changes in web layouts without manual updates. This not only streamlines the scraping process but also enhances the quality of the gathered data.

Additionally, with the increasing focus on privacy and data protection, innovations that prioritize ethical scraping practices will likely gain traction. Companies are exploring consent-based scraping, where data is collected with the website owner’s permission, and systems that promote transparency in data use. This shift may redefine the standards and practices surrounding web scraping, leading to more responsible data collection methods in the future.

Getting Started with Web Scraping: Best Practices

For those new to web scraping, it’s essential to start with best practices to ensure a positive experience. Begin by familiarizing yourself with the website’s structure and the data you aim to collect. Utilize tools that support seamless parsing and extraction of information. It’s advisable to conduct small test runs before launching a full scraping operation to identify potential barriers or errors in the process.

Another important practice is to respect the limitations set by websites. This includes adhering to crawl-delay settings outlined in the robots.txt file and avoiding excessive requests that could overwhelm the server. By being judicious in your scraping activities, you not only enhance your own experience but also contribute to the sustainability of online resources.

Frequently Asked Questions

What is web scraping and how does it work?

Web scraping is the automated process of extracting data from websites. By using web scraping tools, users can programmatically access web pages and retrieve information such as text, images, and links. Typically, it involves making HTTP requests to web servers and parsing the returned HTML or XML content to capture the required data.

What are the common applications of web scraping?

Web scraping has various applications, including data analysis for research, price comparison scraping for e-commerce, and aggregating real estate listings. Analysts use web scraping to gather extensive datasets from multiple sources, while businesses track competitors’ prices to ensure competitiveness and relevance in the market.

What scraping techniques are often used in web scraping?

Popular scraping techniques include HTML parsing, where libraries like Beautiful Soup help extract data from the HTML structure, and web crawling, often implemented with tools like Scrapy to traverse and scrape multiple pages efficiently. Additionally, automated data collection can be conducted using headless browsers like Selenium to pull dynamic content.

What are some popular web scraping tools?

Some of the most popular web scraping tools include Beautiful Soup for parsing HTML, Scrapy for comprehensive web crawling, and Selenium for scraping dynamic web content. These tools cater to different needs, from simple data extraction to complex scraping projects.

Is web scraping legal?

While web scraping can be a valuable method for data collection, its legality depends on various factors, including the website’s terms of service and the type of data being scraped. Always ensure that scraping practices comply with legal regulations and respect robots.txt files.

How can I perform price comparison scraping effectively?

To perform effective price comparison scraping, select reliable web scraping tools like Scrapy or Beautiful Soup, identify competitor product URLs, and ensure your scripts parse the correct HTML elements. Additionally, set up a schedule to run your scraping tasks regularly for up-to-date price information.

What is the difference between web scraping and data extraction?

Web scraping is a specific form of data extraction that focuses on gathering information from websites. Data extraction can refer to any method of pulling data from various sources, including databases, APIs, or file formats, while web scraping specifically targets web pages.

Can I use web scraping for academic research?

Yes, web scraping can be a powerful tool for academic research, allowing researchers to compile large datasets for analysis. However, it’s essential to adhere to ethical guidelines and the respective websites’ terms of service when collecting data.

| Key Points | Details |

|---|---|

| What is Web Scraping? | The process of automatically extracting information from web pages. |

| Applications of Web Scraping | 1. **Data Analysis**: Collecting data for market research. 2. **Price Comparison**: Monitoring competitors’ prices. 3. **Real Estate Listings**: Aggregating property listings. |

| Popular Tools for Web Scraping | – **Beautiful Soup**: For parsing HTML and XML. – **Scrapy**: Open-source framework for data extraction. – **Selenium**: Automates web applications and scrapes content. |

Summary

Web scraping is an essential technique for extracting information from various websites, providing valuable data for different industries. By understanding its fundamentals, applications, and tools, individuals and businesses can harness the power of web scraping effectively. However, it is crucial to perform web scraping responsibly, adhering to legal guidelines and website policies.